How to Design Agentic Software People Will Trust [Guest]

Looking beyond chat based interfaces for designing next generation AI Software

It takes time to create work that’s clear, independent, and genuinely useful. If you’ve found value in this newsletter, consider becoming a paid subscriber. It helps me dive deeper into research, reach more people, stay free from ads/hidden agendas, and supports my crippling chocolate milk addiction. We run on a “pay what you can” model—so if you believe in the mission, there’s likely a plan that fits (over here).

Every subscription helps me stay independent, avoid clickbait, and focus on depth over noise, and I deeply appreciate everyone who chooses to support our cult.

PS – Supporting this work doesn’t have to come out of your pocket. If you read this as part of your professional development, you can use this email template to request reimbursement for your subscription.

Every month, the Chocolate Milk Cult reaches over a million Builders, Investors, Policy Makers, Leaders, and more. If you’d like to meet other members of our community, please fill out this contact form here (I will never sell your data nor will I make intros w/o your explicit permission)- https://forms.gle/Pi1pGLuS1FmzXoLr6

Demetrios Brinkmann started the MLOps Community in 2020. Since its inception, the community has been a space for folks to discuss the ~pains~ art of bringing ML/Al to production. Through it, he regularly works with leading companies deploying Artificial Intelligence in production. His insights when it comes to deploying AI in high-performance systems that actually make money are second to none.

In the following guest post, DB lays out a clear argument: progress in agents will depend less on larger models and more on the interaction patterns we build around them. The piece examines where chat falls short, why shared visual context matters, how structured affordances reduce cognitive load, and what a modern “trust stack” needs to look like for agents operating in the real world. It’s an honest look at how richer interfaces — plans, partial outputs, controls, boundaries — change not just usability but user confidence.

With the rise of CLI, ADE (Agentic Development Interfaces), and other paradigms, this shift is playing out in real-time. So my only question to you would be to think deeply about what this means and how we can build interfaces that take us away from chat towards interactions (what does the bridge between these two paradigms look like)?

Look forward to hearing your thoughts, and if you like this post, then sign up for DBs upcoming virtual conference Agents in Production — hosted in collaboration with Prosus.

It will show builders experimenting with visual context, live steerability, and permission-aware architectures. Across more than 30 sessions, you’ll hear from the engineers and researchers taking agents from prototype to production: Ben Hindman’s talking about MCP and what it means for building agents that hold context and state. Sanjana Sharma’s focusing on how to test and trust agents once they’re deployed. And Mefta Sadat’s showing what real orchestration looks like at scale.

(As always, I have received no compensation for this; I’m just a super fan of MLOps Community and their excellent work).

TL;DR

Like that new Vibe-Coder HR hired, chat-first UX is showing its limits. Text interfaces add cognitive load, blur accountability, and make control fuzzy. To move agents from novelty to necessity, we need richer ways to express intent, steer during execution, and a trust stack that handles data, permissions, and actions. The next leap won’t come from bigger models - it’ll come from better design.

The problem with chat-first

Chat UI felt right at first. It was approachable, familiar, and made these systems feel more like collaborators than command lines. It gave us a way to talk to software in the medium we already use with each other.

But chat is a brittle format when a task has structure, dependencies, or takes shape over time. It’s like trying to explain a drawing to someone over the phone: you know what you mean, they think they know what you mean, and the gap only shows up at the end. There’s no shared reference frame to correct midstream.

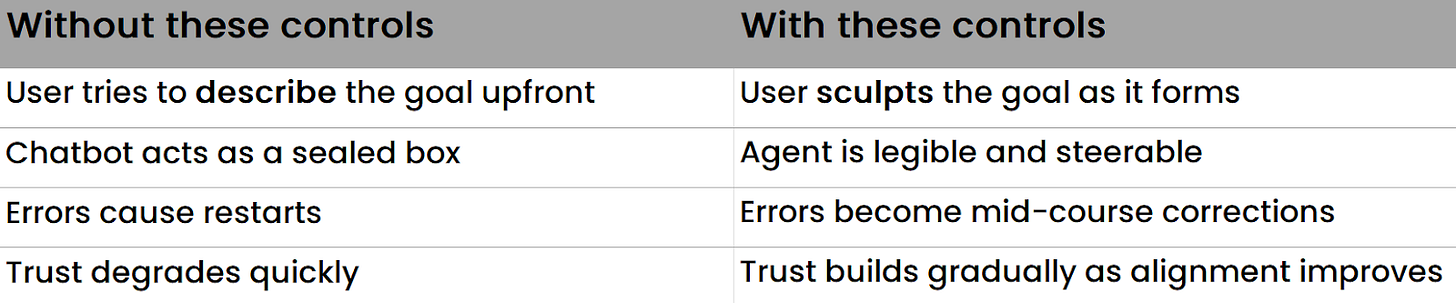

It quietly assumes the user already knows the final goal and how to phrase it precisely. Most of us don’t. We find the goal by circling it, adjusting, reacting. Chat doesn’t support that kind of unfolding. It asks for precision upfront and makes it hard to repair misunderstandings when they happen.

And when the system sounds confident in moments where it’s actually guessing, trust erodes fast. The first hallucination is funny. The second is mildly irritating. By the fifth, the user categorises the system as unreliable for anything that matters.

Chat can show intelligence. It struggles to support shared understanding.

We align with diagrams, not dialogue

Most of collaboration isn’t talking - it’s showing. Engineers sketch on whiteboards, circle dependencies, drag arrows, adjust diagrams mid-sentence. Visual structure reduces ambiguity and helps people form a shared mental model fast.

Chat strips away that grounding. It forces the user to compress a spatial idea into text and hope the system infers the same shape.

Better interfaces don’t mean ornamental UI. They mean interfaces that expose the structure the agent is already using internally: plans, steps, dependencies, intermediate drafts, tool calls. When users can see and shape those structures directly, alignment becomes something you do together rather than something you hope happened.

What better interfaces look like

We’re not starting from zero here. There are already clear signals of where interaction is heading.

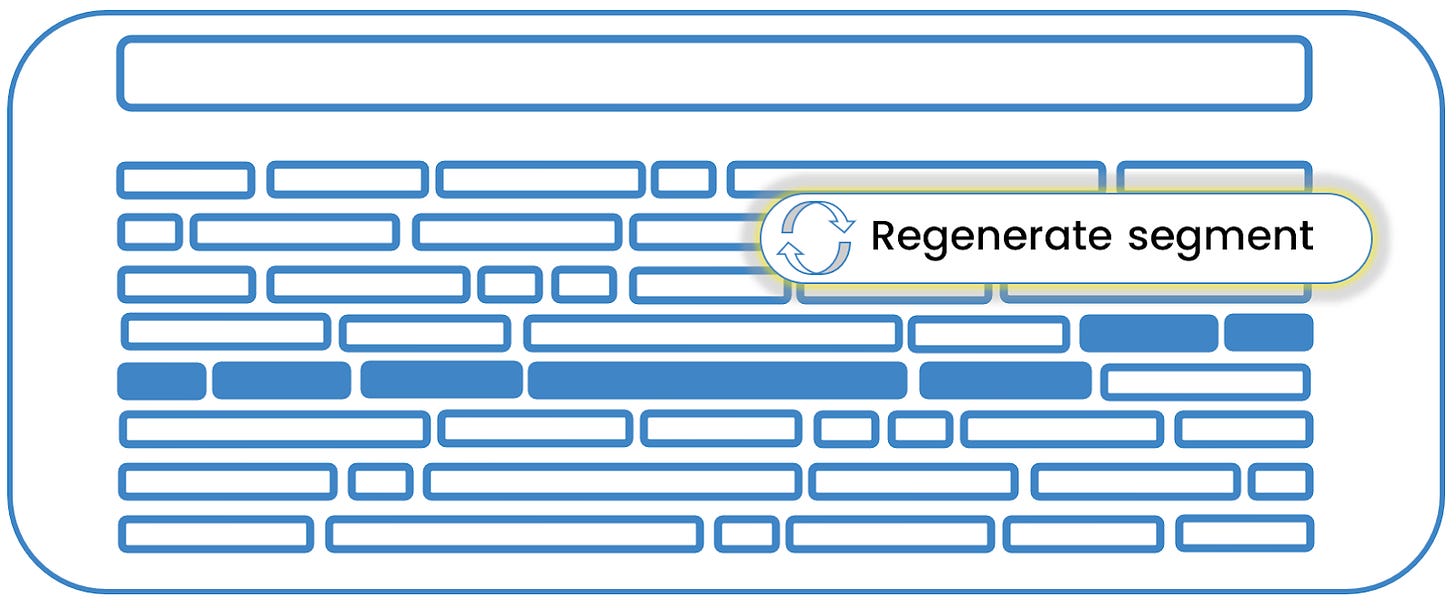

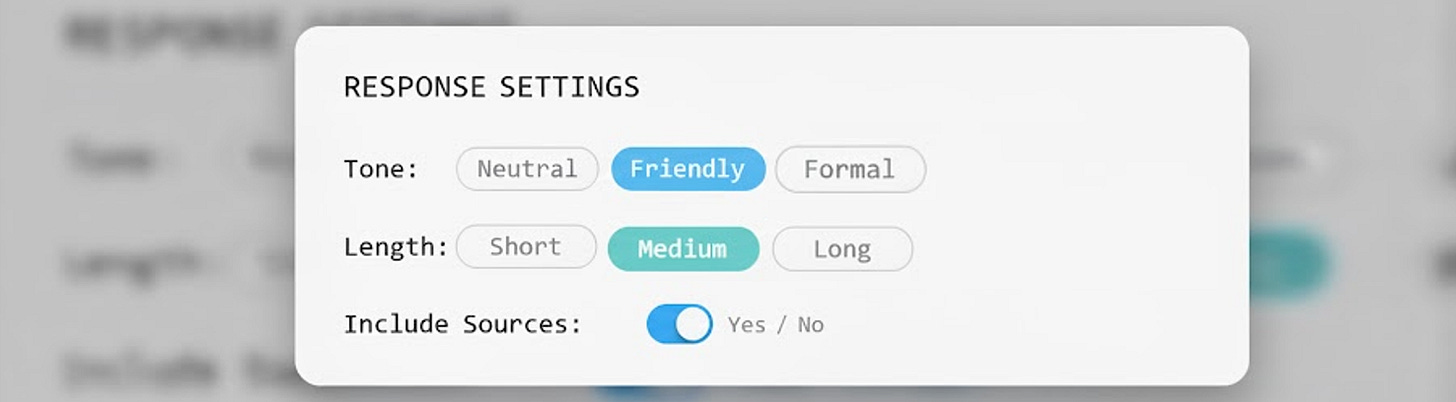

AI UX Patterns has been collecting early interface experiments in the wild. Some tools let you adjust or regenerate just one section of an output instead of throwing everything away. Others add small configuration panels for tone, length, structure, or emphasis. These seem minor, but they change the feel of working with the system. They introduce control points instead of making the user keep prompting and hoping.

We’re also seeing structured affordances make a quiet return. Toggles. Filters. Selectable blocks. The stuff interface designers have trusted for decades. It matters because it reduces the burden on language. Not everything needs to be phrased. Sometimes you just click the version you meant.

Instead of typing “make it shorter and more formal, and add sources,” you nudge three controls and the agent already knows what you mean. The interface carries part of the intent, so the language doesn’t have to.

And then there’s compositional output. Instead of one long monologue, agents are returning modular pieces: text blocks, tables, embedded charts, small code snippets, sketches. Pieces you can rearrange, revise, replace. The result is something you can shape, rather than something you react to.

It echoes what Panos Stravopodis is exploring at Agents in Production - designing voice agents that make their reasoning and intent clear enough for humans to trust and guide.

How richer interfaces change workflows

People don’t specify tasks once and walk away. We refine. We change direction. We notice problems halfway through. Real work unfolds. Strong interfaces acknowledge that.

When an agent shows its intermediate state – the plan, the steps it thinks are required, the tools it intends to call – the interaction changes. The user can step in early, long before anything irreversible happens. Instead of finding out at the end that step three went completely sideways, the user can say, “No, that part’s off, fix that before continuing.”

Being able to regenerate just one part of an output matters for the same reason. If the entire result explodes every time you tweak one detail, the system feels fragile. When only a section is replaced and everything else holds, the agent feels stable. You can sculpt it.

Even showing uncertainty helps. If the agent flags the places where it’s inferring or guessing, the user instantly knows where to guide, review, or tighten constraints. Uncertainty isn’t the problem. Hidden uncertainty is.

And when the agent lets you set execution boundaries – how aggressive it should be, what tools it’s allowed to use, how much latency vs thoroughness to trade – the system stops feeling mysterious and starts feeling directed. It feels like something you pilot, not something you hope behaves.

The shift is subtle but powerful: the user moves from hoping the model understood to shaping its understanding while the work is happening.

It becomes collaboration, not gambling.

From autopilot to co-pilot

A healthy agent workflow isn’t “give it a task and come back later.” That only works when the task is perfectly stated and the environment is stable. That’s not most real work. Most real work looks like co-piloting: steering and adjusting while the system runs.

We already have mature tools built on this pattern.

A music producer doesn’t type a sentence describing the sound they want. They listen, tweak filters, isolate a track, bring other parts in, and shape the output in motion.

A photographer doesn’t tell Lightroom “make this more warm and dramatic.” They slide contrast, exposure, black levels, saturation, and shadows until it feels right.

Even SQL work is rarely one-and-done. You run, inspect, adjust the join, tighten the filter, run again.

These tools assume that clarity arrives through interaction, not before it.

Agents should work the same way.

That means:

The plan is visible while the work is in progress

The user can adjust course at any step

The system pauses before doing anything irreversible

There are clear “knobs” for pace, cost, thoroughness, and risk

When the user stays in the loop, trust grows naturally.

Not because the agent is perfect, but because the collaboration stays recoverable.

This moves the experience from “I hope it understood me” to “I can guide it as we go.”

Which is the difference between delegation and partnership.

Make chat the fallback, not the foundation

Chat should stay, but as the escape hatch. The primary interface should guide structure:

Task templates with adjustable constraints

Inline refinement for partial outputs

Visual dashboards showing agent reasoning, progress, and tool use

You get clearer intent, faster iteration, and fewer “try again” loops.

The strategy tension: agents vs tools

There’s a growing tension between agents and tools. Everyone wants to be the agent that users talk to, not the API that another agent calls. But that’ll lead to silos that make TV streaming look joined up - dozens of half-capable systems that don’t talk to each other.

The tech will eventually solve interoperability, but the business incentives won’t vanish. Whoever controls the gateway to the user controls the relationship. The outcome: fractured experiences.

Designers and engineers should assume multi-agent ecosystems by default. Build for composition, not monopoly.

Building the trust stack

For agents to act for us, we need layers of confidence. Three stand out:

Start with data.

The user should be able to see what information the agent is using at any moment: what comes from the current screen, what was retrieved, and what the system is holding in memory. Sensitive context needs labels that carry through the workflow so the agent knows which information can be used where. This isn’t just privacy; it creates more reliable reasoning because the agent can distinguish stable facts from temporary notes from private context.Then permissions.

Just because a model knows how to call a tool doesn’t mean it should. Tool access needs to be scoped, reversible, and adjusted as the task evolves. Not a one-time permission popup. A live control surface. For example: allow this file once, allow this API for the next ten minutes, revoke calendar access entirely. The user should feel like they can tighten or loosen trust without ceremony.And finally actions.

Before the agent affects the outside world, it should show its intended steps. Not a philosophical explanation. A simple, inspectable action outline: here is what will happen. After execution, it should produce a clear report of what actually occurred. Ideally, with the ability to undo.

Without that triad, automation looks magical until it breaks - then it looks terrifying.

The near future: sensory, proactive, steerable

We’re still in the T9-texting phase of agent interaction. The touchscreen moment will come when agents can:

See what we see. Context from our screens, not just our words.

Guide next steps. Proactively surface options without spamming us.

Personalize responsibly. Adapt to user style without overfitting to user noise.

Make chat secondary. Keep it, but behind richer, multimodal controls.

When that happens, we’ll stop talking to agents and start working with them.

Why this matters right now

If we don’t rethink how humans interact with agents, we’ll keep scaling models that frustrate users faster. The future isn’t “better chat.” It’s “better interaction.”

That shift is already happening in the field. Builders are experimenting with visual context, live steerability, and permission-aware architectures. And that’s the spirit behind Agents in Production — our upcoming virtual conference hosted in collaboration with Prosus.

Across more than 30 sessions, you’ll hear from the engineers and researchers taking agents from prototype to production: Ben Hindman’s talking about MCP and what it means for building agents that hold context and state. Sanjana Sharma’s focusing on how to test and trust agents once they’re deployed. And Mefta Sadat’s showing what real orchestration looks like at scale.

It’s still virtual, but not static - expect live demos, offbeat moments, and a format built to keep you awake and learning.

This article is adapted from a keynote delivered at PyData Amsterdam.

Resources

Thank you for being here, and I hope you have a wonderful day.

Dev <3

I provide various consulting and advisory services. If you‘d like to explore how we can work together, reach out to me through any of my socials over here or reply to this email.

I put a lot of work into writing this newsletter. To do so, I rely on you for support. If a few more people choose to become paid subscribers, the Chocolate Milk Cult can continue to provide high-quality and accessible education and opportunities to anyone who needs it. If you think this mission is worth contributing to, please consider a premium subscription. You can do so for less than the cost of a Netflix Subscription (pay what you want here).

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow. The best way to share testimonials is to share articles and tag me in your post so I can see/share it.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

My (imaginary) sister’s favorite MLOps Podcast-

https://machine-learning-made-simple.medium.com/

My YouTube: https://www.youtube.com/@ChocolateMilkCultLeader/

Reach out to me on LinkedIn. Let’s connect: https://www.linkedin.com/in/devansh-devansh-516004168/

My Instagram: https://www.instagram.com/iseethings404/

My Twitter: https://twitter.com/Machine01776819

Thanks Devansh! The critical importance of structure is becomming more and more evident and mainstream.

The copilot analogy really nails the problem with current agentic interfaces. When you compare it to how music producers or photgraphers work with their tools, the gap becomes obvious. Those workflows assume you'll adjust and refine as you go, not that you'll spec everything perfectly upfront. The idea of exposing internal structures like plans and intermediate steps makes alot more sense than hiding everything behind a chat interface that pretends to understand exactly what you ment the first time.