The "AI is a Bubble" Narrative is Stupid, Wrong, and Dangerous

How the memes and surface level analysis are distracting from Nvidia's play to control the ecosystem.

It takes time to create work that’s clear, independent, and genuinely useful. If you’ve found value in this newsletter, consider becoming a paid subscriber. It helps me dive deeper into research, reach more people, stay free from ads/hidden agendas, and supports my crippling chocolate milk addiction. We run on a “pay what you can” model—so if you believe in the mission, there’s likely a plan that fits (over here).

Every subscription helps me stay independent, avoid clickbait, and focus on depth over noise, and I deeply appreciate everyone who chooses to support our cult.

PS – Supporting this work doesn’t have to come out of your pocket. If you read this as part of your professional development, you can use this email template to request reimbursement for your subscription.

Every month, the Chocolate Milk Cult reaches over a million Builders, Investors, Policy Makers, Leaders, and more. If you’d like to meet other members of our community, please fill out this contact form here (I will never sell your data nor will I make intros w/o your explicit permission)- https://forms.gle/Pi1pGLuS1FmzXoLr6

EDIT 10/28/2025— To calrify my stance on bubble. The difference between bubble and not isn’t utility (tulips still have utility) but on the basis of returns. Essentially do you buy because you think this asset will be priced higher than you can sell to another person (without it generating more value per se) or are you buying because you think the tech itself and what it does will become more useful over time.

With dotcom and real estate the asset was useful but a lot of the specific companies didn’t have plan to capture value. Investment was made on the assumption that it was good for its own sake. That’s a bubble. I think there are companies that are doing this even in AI but the biggest players with the deals have clear business cases (possibly faulty if you disagree with the assumptions but still a clear set of cases).

Hope that clarifies why I think it’s not a bubble but why dot com was.

Enjoy the article

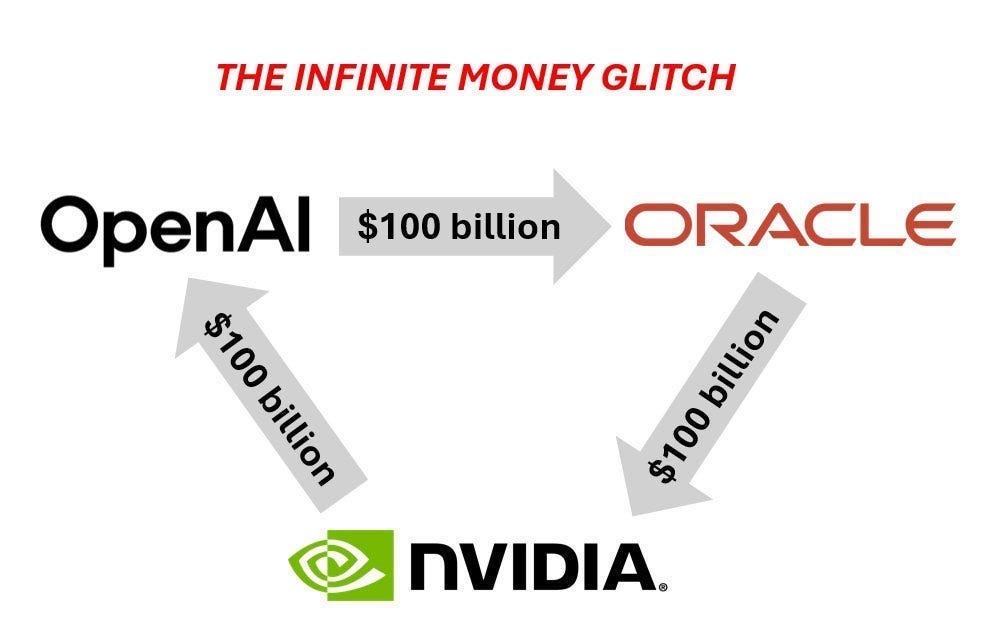

Recently, variants of this meme have been making the grounds on the internet, with people screaming about Ponzi schemes and Bubbles.

Even Bloomberg got in on this, sharing the extended version of this here —

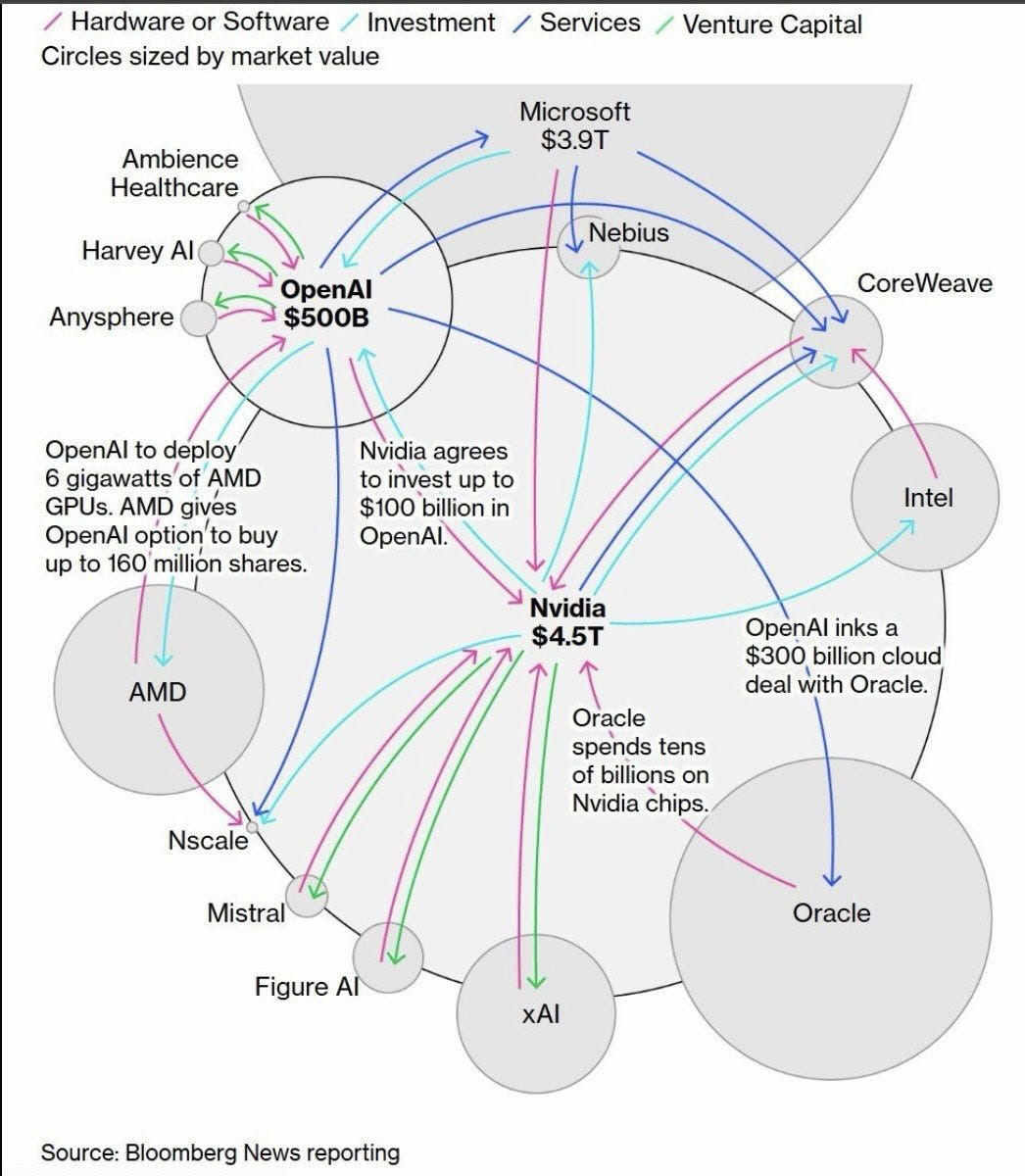

The situation is a bit… odd. OpenAI signs a $300B deal with Oracle. Oracle buys tens of billions in GPUs from Nvidia. Nvidia invests $100B back into OpenAI and GPU clouds like CoreWeave — who then buy more Nvidia chips. Microsoft intermediates the whole loop through Azure while being OpenAI’s largest backer. The money goes in a circle, the headlines amplify each deal, and the stock prices climb in lockstep.

On the surface, calling this a Ponzi makes perfect sense: circular investments creating synthetic demand, revenue recognized before end-customer ROI is proven, valuations lifted by financial engineering and vibes rather than fundamentals, vendor financing that rhymes uncomfortably with telecom’s late-90s implosion. The pattern looks like fraud — or at least like a bubble primed to pop.

But that’s only when you lack critical thinking skills, have a raging hate-boner for AI, and your understanding of economics is stuck in the 1800s. Yes, tech valuations are inflated, and yes, some of these credit structures will blow up. But the real story isn’t about a bubble fueled by greed, it’s about the attempt to dye the entire ecosystem in the colors of one of tech philosophy.

In this article, we will:

Map the actual capital flows and show why “circular” doesn’t mean “fraudulent” — every functioning economy is circular; this is just industrial-scale vendor financing.

Dismantle the Ponzi comparison by showing what bubbles and Ponzis actually look like and why this pattern doesn’t match.

Reveal the mechanism everyone’s missing: How these investments serve as it’s vertical integration via balance sheet, using capital to control standards, lock in ecosystems, and foreclose alternatives.

and more.

So let’s leave the Mercantilism back in the industrial era with Opiumed Babies, stop listening to people whose biggest claim to fame is bitching about other people’s work, and actually understand the deeper games Silicon Valley is playing.

Because the Ponzi critics think they’re being realists. They’re actually feeding narratives that will help one company force the ecosystem into a monopoly that will preemptively kill competition and force innovation through their (logic) gates.

And you are falling for it; all so you and your faux intellectual friends can circle jerk each other about having “common sense”.

Executive Highlights (TL;DR of the Article)

The $400B+ capital loop between Nvidia, OpenAI, Oracle, Microsoft, and CoreWeave looks circular but functions as vendor financing at industrial scale — capital deployed to lock in architectural commitment before alternatives mature. Nvidia invests in customers who buy Nvidia chips, creating revenue that funds more investment. This isn’t synthetic demand; it’s pre-buying the future.

Why Ponzi/bubble labels fail: Ponzis collapse to zero when belief breaks (no underlying business). Bubbles pop when prices detach from fundamentals (tulips, NFTs). Here, workloads are real, demand is measurable, and the infrastructure survives even if valuations correct. The worst case is a painful contraction, not implosion. This is vertical integration via balance sheet — standard industrial playbook (Amazon/AWS, railroads, electricity grids).

The actual mechanism: Every major AI deployment defaults to CUDA because (1) all frameworks optimize for it, (2) porting costs 6–18 months of engineering work, (3) researchers learn to think in CUDA primitives, (4) tooling/documentation/community knowledge compounds around one stack. The gap between Nvidia and alternatives widens daily. Alternative chips exist but face bootstrapping problems — software ecosystem is years behind, hyperparameter tuning doesn’t transfer, organizational inertia favors “if it works, don’t break it.”

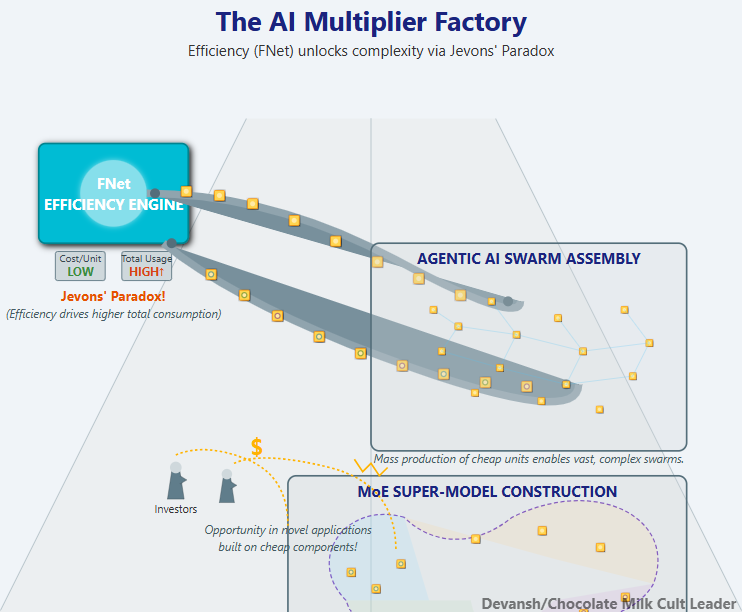

Why misframing matters: Critics watching for fraud/collapse miss the real threat — standards becoming power. Every hour debating stock valuations is an hour not discussing why one company’s architectural choices have become the cognitive primitives of an entire field. The bubble narrative serves Nvidia perfectly by keeping attention on the wrong metric.

What breaks consolidation: Not regulation (legislators won’t understand CUDA until the next layer consolidates; even if they did, lobbying/revolving doors corrupt enforcement). The gap is where Nvidia’s assumptions fail — architectures that don’t need dense matmul, computing paradigms where von Neumann bottlenecks matter more than GPU strengths, vertical specialization where general-purpose becomes the wrong frame. Nvidia’s aggressive ecosystem building signals their power isn’t solidified yet. Smart capital recognizes the opening.

I put a lot of work into writing this newsletter. To do so, I rely on you for support. If a few more people choose to become paid subscribers, the Chocolate Milk Cult can continue to provide high-quality and accessible education and opportunities to anyone who needs it. If you think this mission is worth contributing to, please consider a premium subscription. You can do so for less than the cost of a Netflix Subscription (pay what you want here).

I provide various consulting and advisory services. If you‘d like to explore how we can work together, reach out to me through any of my socials over here or reply to this email.

Section 1. The Circular Money Loop of AI

First, let’s all get on the same page wrt to the numbers and the structure of the investments made.

1.1 The Primary Circuit

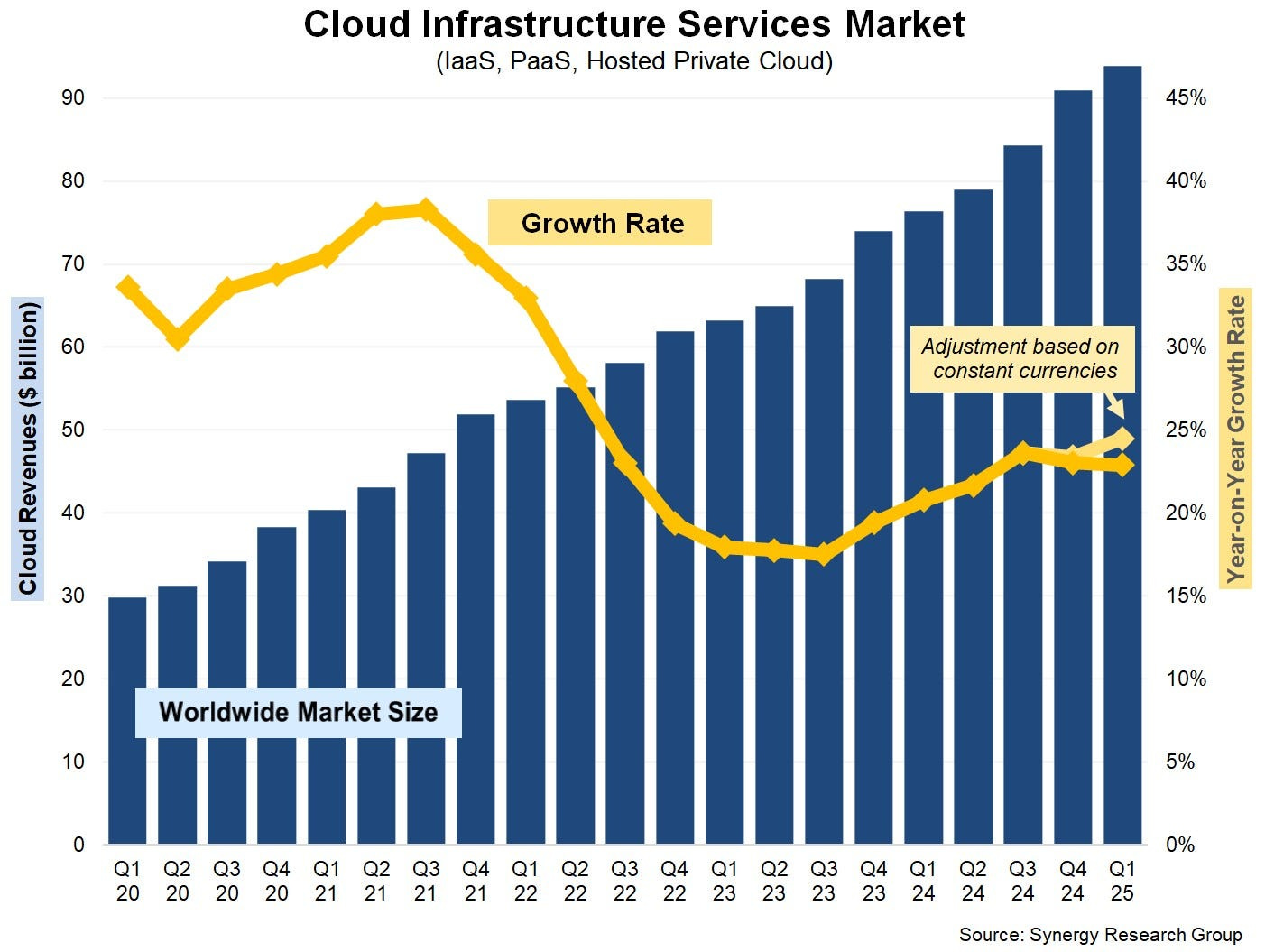

OpenAI → Oracle: $300B over 5 years (announced Sept 2025): This isn’t speculative “future revenue.” It’s a compute reservation — one of the largest enterprise IT deals ever signed. OpenAI raises $6.6B, then immediately locks that capital into a five-year commitment for cloud capacity through 2030.

Oracle → Nvidia / AMD: tens of billions in GPUs: To deliver, Oracle needs hardware. Lots of it. Tens of billions in MI450s, H100s, and their successors. New substations, cooling systems, and interconnects. The spending is front-loaded; the revenue drips out over time. It’s infrastructure first, cash later.

Nvidia → OpenAI / CoreWeave: up to $100B in investments and credit: And here’s the twist. Nvidia turns around and funds the very customers who buy its chips — OpenAI directly, and CoreWeave through equity and financing. CoreWeave then uses that money to build data centers packed with Nvidia hardware, which it sells back to OpenAI. AMD copies the playbook with warrants tied to OpenAI’s growth.

Money leaves Nvidia’s balance sheet and re-enters as revenue. This works well because tech companies (and all companies really) are valued on forward multiples (5x multiple assumes that 1 USD will be turned into 5 USD in the future; all of this rests on the assumption that we can create new can be created infinately; a more interesting question imo is not about bubbles and whether this assumption applies to AI specifically but whether this assumption is valid at all).

Aside from these we end up with secondary players that have a large impact as well.

1.2 The Secondary Loops

Microsoft’s everywhere at once: They’ve poured roughly $13B into OpenAI, run its infrastructure through Azure, and even link up with Oracle so OpenAI’s traffic can route through Azure hardware. Investor, distributor, customer, landlord — four roles, one company. Kante levels of work rate here by Satya Nadella and his crew.

CoreWeave, the flywheel: Nvidia invests in CoreWeave. CoreWeave buys Nvidia GPUs. CoreWeave sells compute to OpenAI (and to Mistral, Anthropic, Cohere, whoever wants a slice). OpenAI pays — partly with funds that originated from Nvidia.

1.3 Quantifying the Circularity

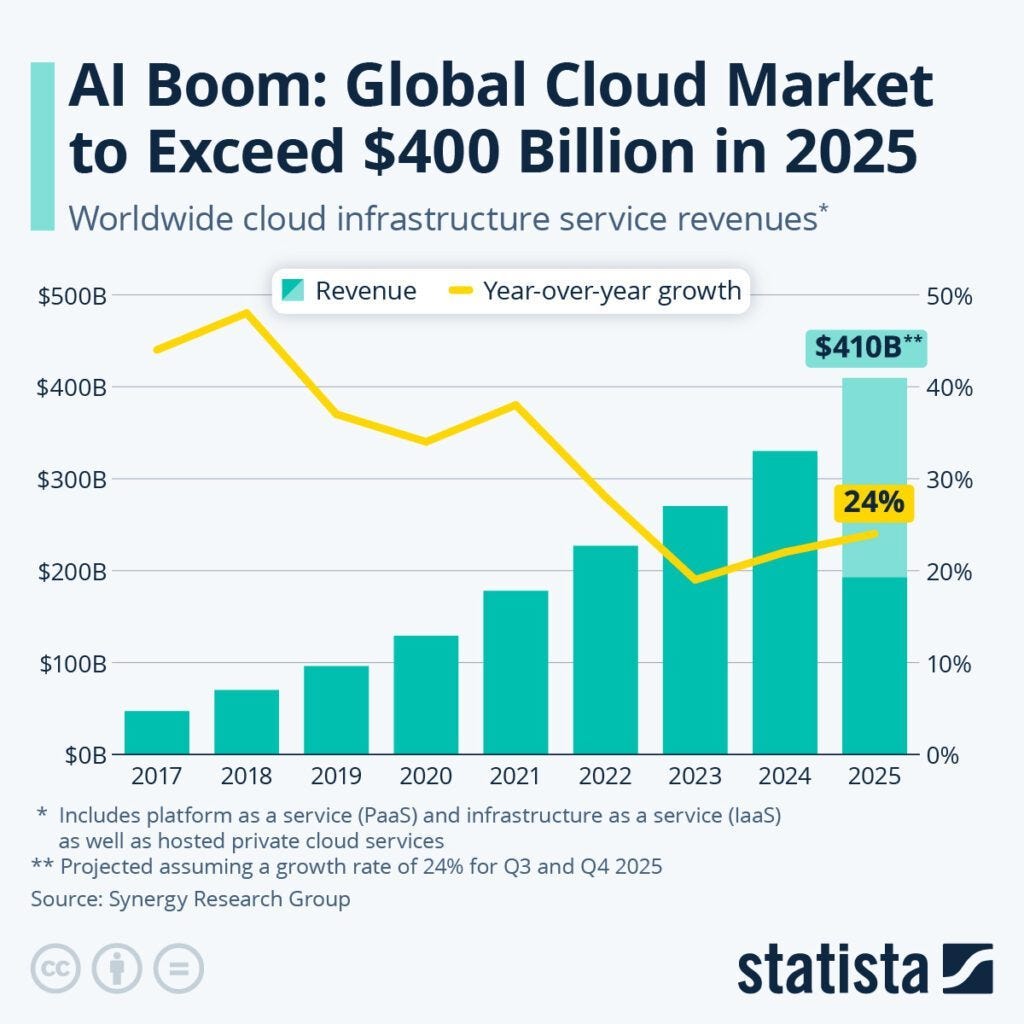

Total capital tied up in this loop: at least $400B+ over five years.

OpenAI → Oracle: $300B

Nvidia → OpenAI / CoreWeave: ~$100B

Microsoft → OpenAI: ~$13B

Other vendors (AMD, secondary clouds): $50B+ (speculative; the reports only say tens of billions)

Furthermore, OpenAI’s current revenue is dwarfed by its committed spend (this deal is worth 80% of the OpenAI stock, priced at the most recent 500B valuation). The gap is bridged by vendor credits, expected revenue, and more funding — much of it tied back to the same players. Nvidia’s “investment” into OpenAI exceeds OpenAI’s available cash. Which means: OpenAI is spending Nvidia’s money on Nvidia’s chips.

So we have the same five or six entities — OpenAI, Microsoft, Oracle, Nvidia, CoreWeave, AMD — transacting with each other, while the press treats every new contract like a fresh data point.

Another “nail in the coffin”? Global cloud infrastructure runs about $400B per year. This closed loop alone represents a material fraction of that — and it’s all the same dollars changing hands under new logos.

1.4 So Yes, It’s Circular … but that’s Deliberate

The critics aren’t wrong about the structure. The same companies are investor, vendor, and customer. Revenues are recognized before real end-user ROI is proven. The optics inflate everyone’s multiples.

But that’s not fraud. It’s a consolidation.

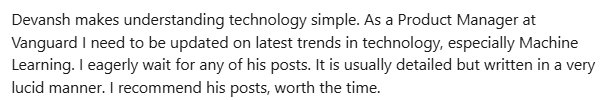

Every industrial empire starts like this: tight capital loops, balance-sheet interdependence, and pre-ordained standards. Rockefeller did it with pipelines. Amazon did it with AWS. Nvidia’s doing it with compute. The goal isn’t to fake demand — it’s to make dependence unavoidable. There are several reasons for this: it takes time for customers to adapt to a new technology, large shifts require equally major societal rewiring, etc etc. No matter what era, and what technology/industry, a certain amount of cheap supply has been required to kickstart demand for a new trend. In business lingo, Jevon’s Paradox: lower costs lead to more usage, which leads to more production, which drives efficiency in production (lower cost per unit produced — what was once 10 USD produce now drops to 1 USD). Somewhat unexpectedly, this means that lowering costs can lead to higher profit margins and better revenues since you’re able to sell more goods that were much cheaper for you to make.

Triggering this magical paradox requires that someone take the original risk to build infrastructure, whether that’s entrenched players (the way Nvidia is doing so) or the government.

So the money loops as industrial blocs consolidate. The question isn’t whether that’s happening. The question is what kind of power structure the loop is creating.

Because bubbles pop, but architectures persist. Distinguishing b/w them requires taking a look at the details. This is where a lot of you have been using the wrong categories/mental models for assessing where we are with AI. But I’m not going to be too mean about this because you are a dumb dumb that didn’t know any better. Luckily you have me to save you (really where would you be w/o me) to fix this.

Section 2: Why “Ponzi” and “Bubble” Are the Wrong Words for this Mess

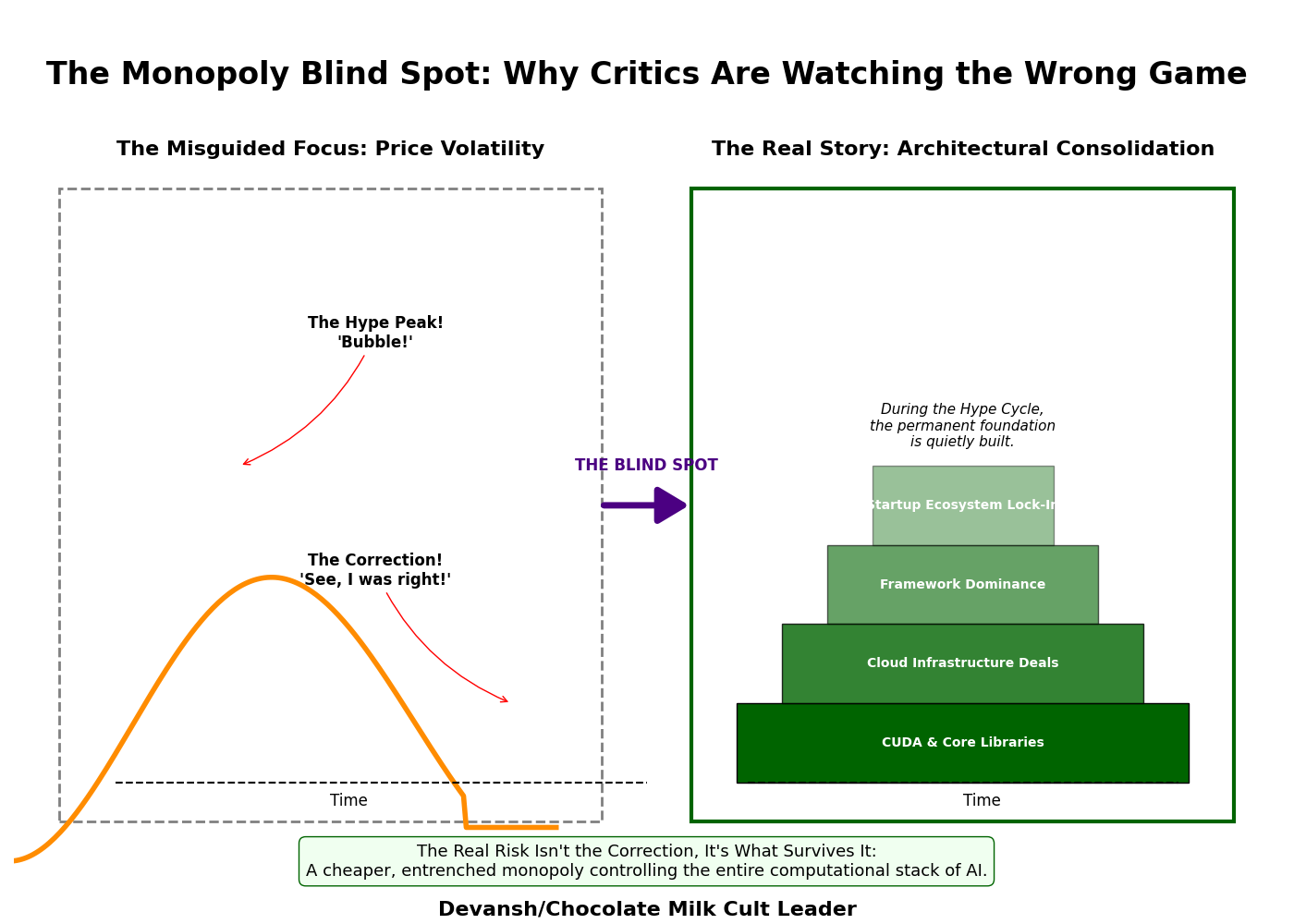

The circular structure is real; everyone can see it. The real question is what kind of system this actually is, because the label you use decides the frame through which you interpret it. Call it a Ponzi, and you expect collapse. Call it a bubble, and you wait for gravity to fix it. But if it’s neither — if it’s something more deliberate — you’re missing the entire point of how power now compounds in AI.

A) What a Ponzi Actually Is

The structure of a Ponzi Scheme is simple:

No underlying business — returns come from new investors, not real operations.

Exponential dependency — requires endless inflows of fresh capital to survive.

Built on deception — early investors told profits come from activity that doesn’t exist.

Why AI doesn’t fit the Ponzi Scheme mold:

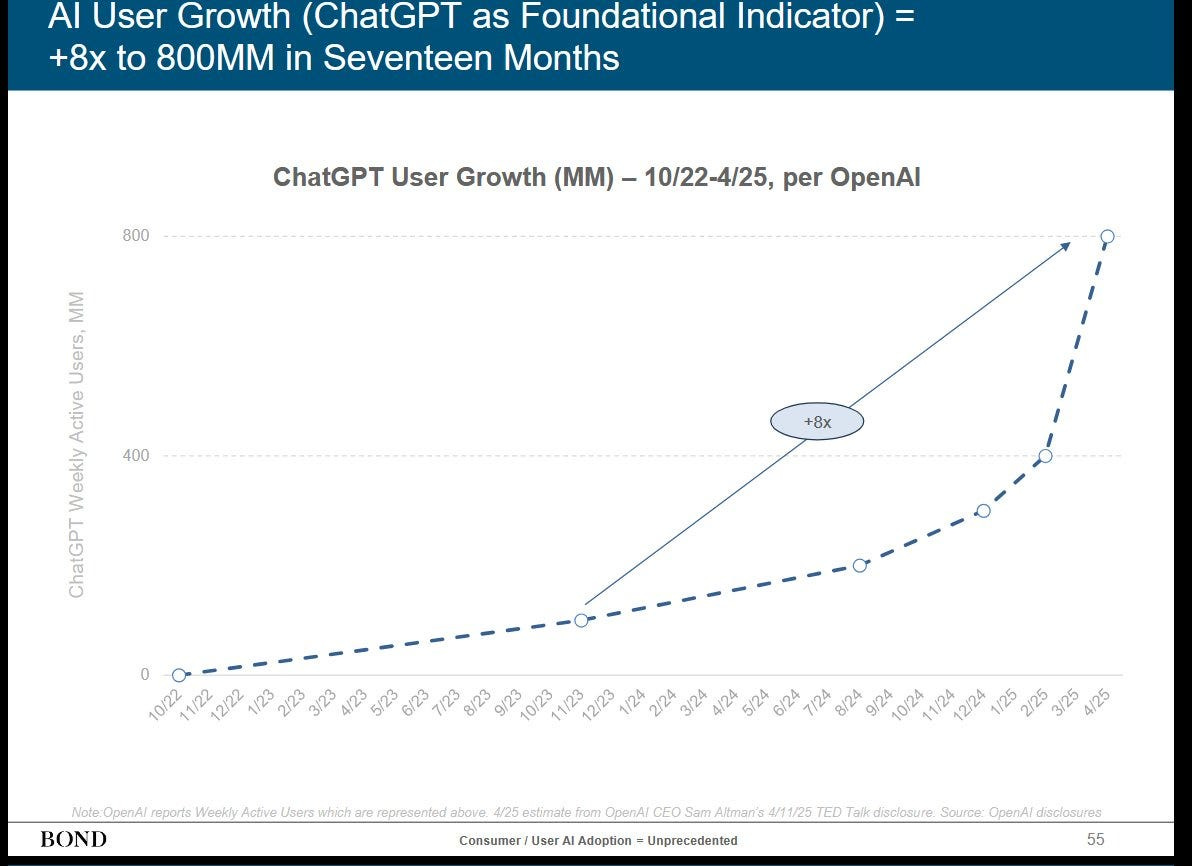

Nvidia generated $64.1 billion in net cash from operating activities (, a 128.16% increase from 2024) — real products, real buyers. Oracle runs physical data centers that serve compute every day. OpenAI has millions of paying users. All the major AI investors are massively profitable, with clearly defined reasons to use AI. The circularity amplifies genuine cash flows; it doesn’t fabricate them.

If the hype cycle ended tomorrow, contracts would shrink, utilization would fall, and valuations would reset. Entire startups might get wiped out. But the core doesn’t vanish — data centers still need to run inference, AI tools will still hit a ton of usage, and researchers will still train models. The system would contract to fit real demand, not implode to zero.

That’s the difference: Ponzis collapse to zero because nothing was real; overvalued systems fall back to fundamentals. Painful, yes. Fraudulent, no.

B) What a Bubble Actually Is

The anatomy of a bubble is very interesting:

Price detached from fundamentals — value driven purely by resale expectation. At their peak, Tulips, NFTs, my (very successful) attempts to sell feet pics (my own; ethically sourcing good feet pics is actually really hard) were not priced on utility — how much someone is willing to pay to use/own something — but on the greater fool theory: someone somewhere will buy this same thing from you at a higher price. Even the 2008 real estate collapse was triggered because houses were sold as an asset that would never go down in value.

Collapse triggered by disbelief: when faith breaks, so does the market.

Why AI doesn’t fit the Bubble Argument:

Workloads are real and measurable. Training runs cost millions because they must; enterprises deploy LLMs that save time, automate work, and make money. You could argue that investors are massively subsidizing AI inference costs, and as companies are forced to care about profits, they will struggle to keep customers happy while still having low costs (case in point, Lovable, which tried to switch to cheaper models to save on costs, only to watch their performance collapse).

But again, this is a very different argument. It’s one thing to say that AI is being massively subsidized and it will never be financially sustainable. It’s another to claim that everyone buying AI is hoping to resell it to someone else.

Even if AGI hype dies, demand for high-performance compute stays — HPC, gaming, simulation, rendering, scientific workloads and many apps will continue to use AI apis. One need look no further than the explosion of tokens used by Gemini to see that there is a very real demand for the AI.

In the worst case, prices will rise and tokens will fall. But very hard to imagine that going to zero.

So even in the worst case, valuations are inflated; forward multiples assume growth curves that may not hold. Some capacity will sit idle. But there’s a floor — Nvidia’s chips enable computation that governments, enterprises, and researchers fundamentally require.

Bubbles pop to zero. Ecosystems correct to utility. This is clearly much more of the latter than the former.

C) The Pattern Beneath It

What’s actually happening is vertical integration via balance sheet.

The playbook has been around for millennia. Sometime in the dark ages, Amazon built AWS for itself, then sold the overflow. Realizing that cloud would need much more of a much, they then funded/gave benefits to startups to guarantee more AWS consumption. Startups were the correct bet since they would have fewer hassles betting on something new (cloud wasn’t well known back then, so enterprises would have been more hesitant). Once you saw the new gen of cloud startups come up, you now had proof that cloud was kadak maal and selling to enterprises became much easier.

Nvidia’s doing the same with compute — funding customers who buy GPUs, then embedding its stack so deeply that alternatives become economically irrational. The impacts of this are era-defining, so we will be touching upon this in a bit. First, let’s close the loop on the incorrect taxonomy.

D) Why the Mislabeling Matters

If this were a bubble, you’d let it pop. If it were a Ponzi, you’d prosecute. But if it’s balance-sheet consolidation, neither collapse nor regulation restores competition. Critics yelling “fraud” are waiting for the crash to prove them right, missing that even if Nvidia’s stock dropped 40%, the power dynamic wouldn’t change.

Oracle might eat idle capacity. CoreWeave might get Yamcha’d. But CUDA remains the default, and every framework still depends on Nvidia’s libraries. That’s not a correction — it’s a discount on monopoly.

Bubbles burst. Ponzis implode. But monopolies — especially ones built out of financial recursion — don’t die on schedule. They stabilize. They persist. And that persistence is exactly what makes this structure dangerous.

Monopolies, industrial consolidation, and huge power plays? Sounds a bit tin-foily. But watch me cook, and we’ll see the full picture.

Section 3: Why Jensen Huang loves the Buuble Narrative

While critics scream about circular deals, Nvidia’s building something much more interesting: a computational monopoly that doesn’t need to prevent competition — it just makes competition seem like the irrational move to all the educated hacks (MBAs) that end up eventually running companies.

This isn’t speculation. It’s not even particularly hidden. But the bubble panic has everyone watching stock prices instead of ecosystem architecture.

3.1 What Nvidia Is Actually Buying

When Nvidia invests $100B in OpenAI and CoreWeave, it’s not buying revenue — it already has that. It’s not buying market share — it already dominates.

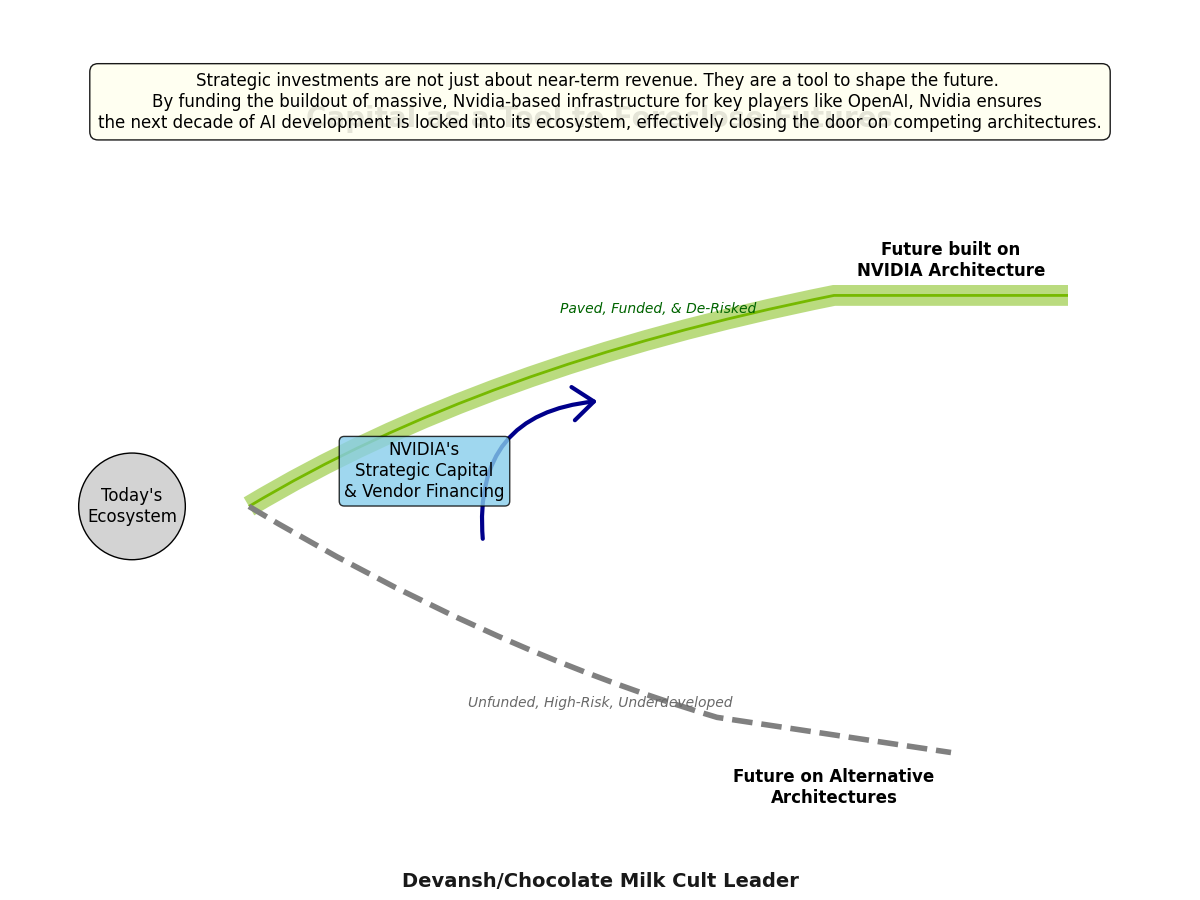

It’s buying time and architectural commitment.

Every dollar that flows through this loop entrenches CUDA as the default computational substrate for AI. OpenAI optimizes for Nvidia. CoreWeave standardizes on Nvidia. Once this happens, researchers continue to default to assuming Nvidia primitives when they design algorithms. The money doesn’t just move in circles — it locks the ecosystem into a single vendor’s architecture before alternatives can mature.

This is vendor financing 101. Google really should take notes because they’re watching Nvidia play the game that they’ve been trying to nail for a decade now.

3.2 The CUDA Moat (And Why It’s Deeper Than You Think)

Here’s what people miss when they focus on chip specs and performance benchmarks:

CUDA isn’t just software. It’s the lingua franca of GPU computing.

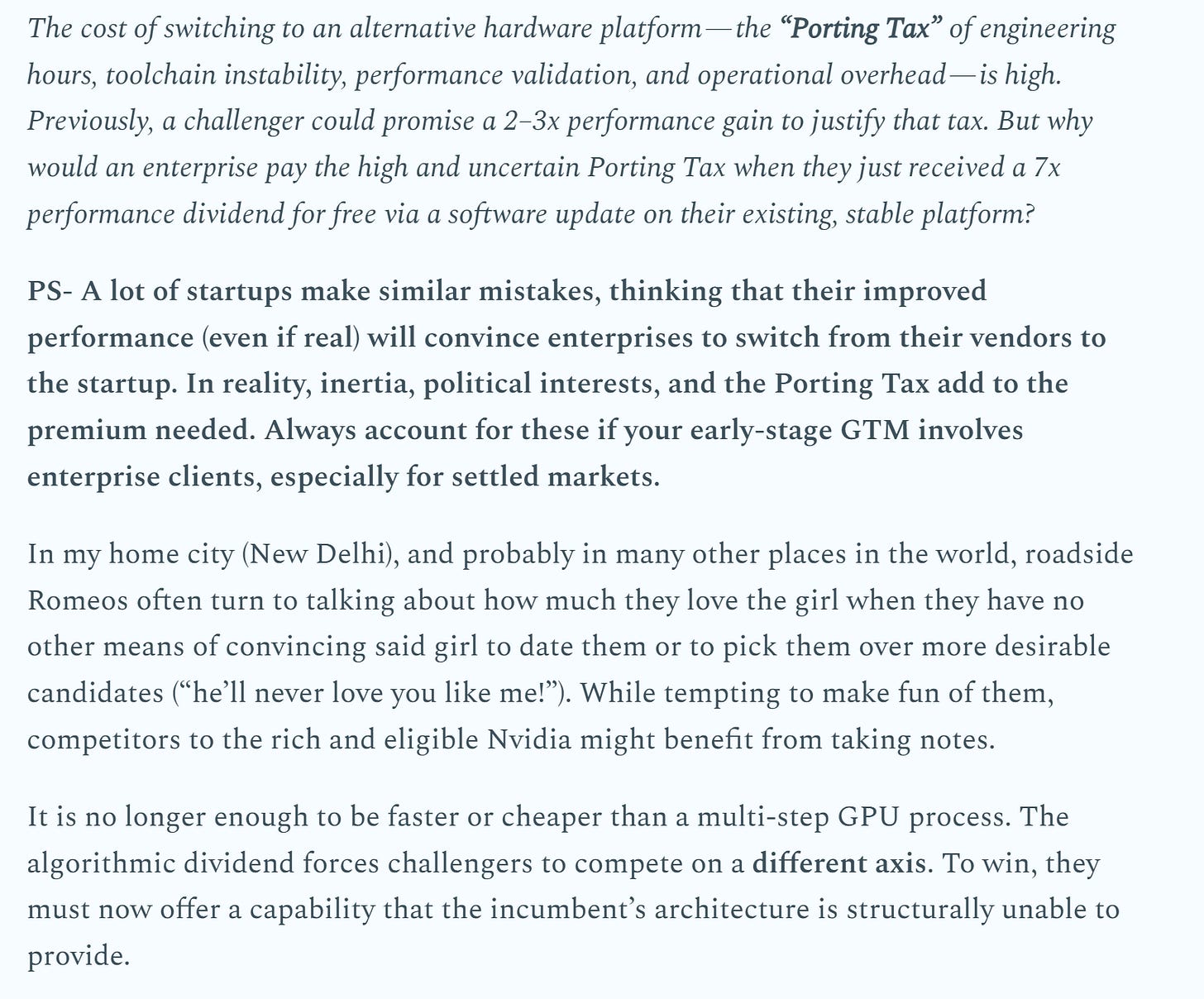

Every major ML framework — PyTorch, TensorFlow, JAX — is optimized for CUDA. The performance layer lives in proprietary libraries: cuDNN for neural networks, cuBLAS for linear algebra, NCCL for multi-GPU communication. Developers don’t learn “how to do parallel computing” — they learn CUDA primitives. Porting to non-Nvidia hardware is really hard.

Example: Training a large language model on Nvidia GPUs requires tuning hyperparameters around specific memory hierarchies, communication patterns, and numerical precision quirks. At the last levels of engineering, you’re essentially optimizing to account for Nvidia’s architectural choices. Move that same model to AMD or custom ASICs? Those hyperparameters don’t transfer. The model architecture might not even be optimal anymore. You’re not switching vendors; you’re starting over.

This is something we call the porting tax, and too many startups underestimate how expensive it can be —

Most organizations won’t do that. Not because they love Nvidia, but because “if it works, don’t break it” is the rational business decision when the alternative is months of engineering work with unclear payoff.

Especially when their leadership is run by MBAs. Call me a hater, but I’ve never seen a single tech company succeed because they hired more MBAs.

Not one; prove me wrong, I dare you.

Now back to our regularly programmed scheduling.

3.3 The Ecosystem Feedback Loop

Here’s the recursion that makes this self-reinforcing:

More Nvidia deployment → more optimized software → more Nvidia deployment.

When 90%+ of AI infrastructure runs on Nvidia, where does optimization effort go? CUDA. PyTorch’s developers optimize for what their users actually run — which is Nvidia. Library maintainers focus on the hardware that matters — which is Nvidia. Researchers design algorithms that work well on the tools they have — which are Nvidia-shaped.

This is also why Nvidia is pouring money into Open Source projects and the American DeepSeek project. They don’t really care about innovation or uplifting American supremacy in AI. They want all innovation points to flow through Nvidia.

This isn’t a conspiracy. It’s just efficient resource allocation by everyone involved.

But the result? Alternative architectures face a bootstrapping problem. Even if AMD releases chips that are faster and cheaper, the software ecosystem is years behind. Developers would need to:

Learn new APIs and primitives

Rewrite optimized kernels

Port existing codebases

Re-tune hyperparameters and model architectures

Build tooling and debugging infrastructure

All while Nvidia’s ecosystem continues improving.

The gap isn’t static — it’s widening. Every month that 90% of researchers optimize for CUDA is another month alternative platforms fall further behind in software maturity.

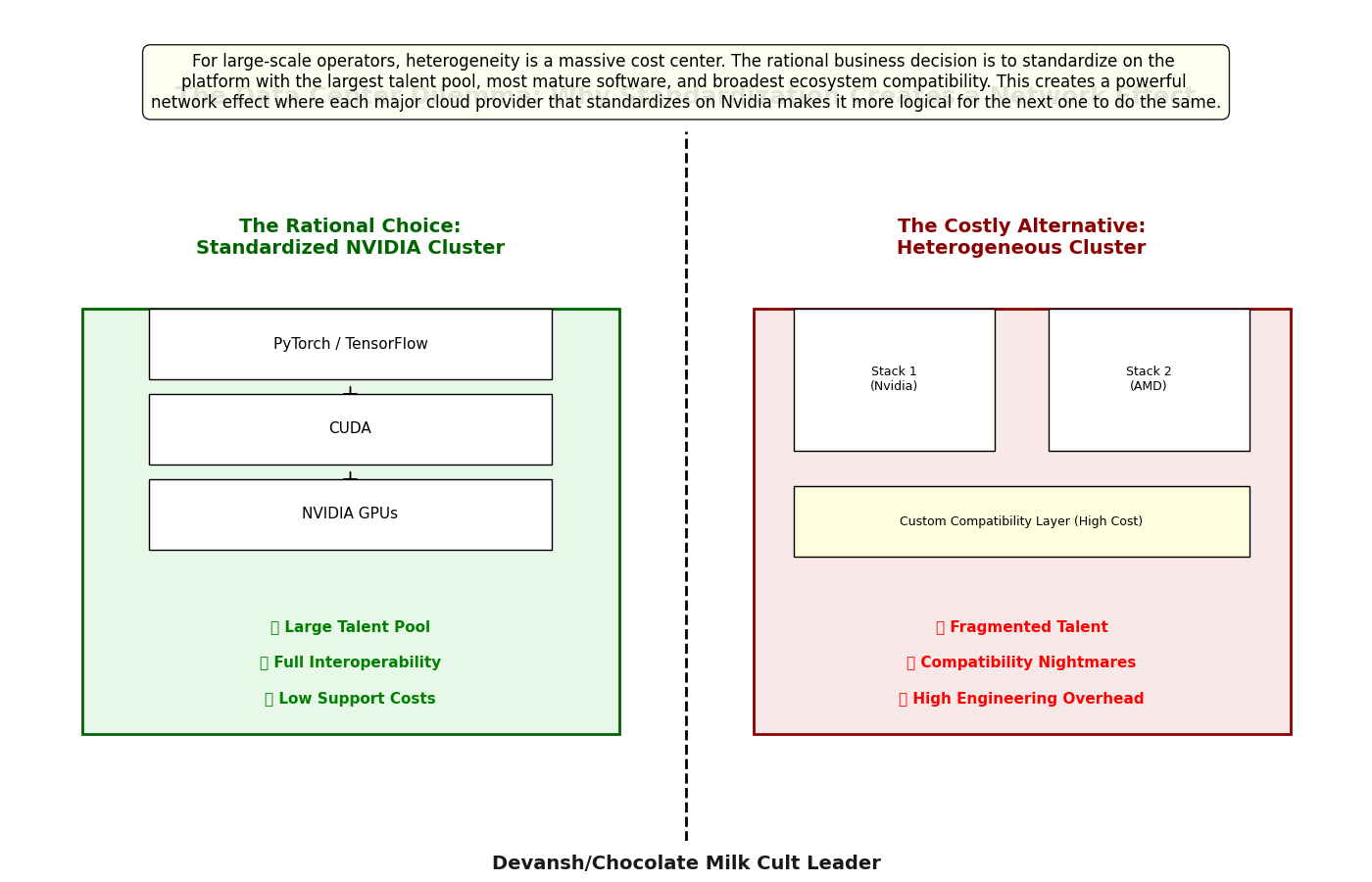

3.4 Network Effects in Infrastructure

Data center operators face the same dynamic. If you’re Oracle or CoreWeave building massive GPU clusters, standardizing on Nvidia gives you:

Interoperability: Customers can move workloads between clusters without code changes

Talent pool: Every ML engineer knows CUDA; proprietary alternatives require retraining

Ecosystem compatibility: All the tools, libraries, and frameworks just work

Lower support costs: Established best practices, extensive documentation, and a large community all make fixing your problems much easier.

Running a mixed fleet (some Nvidia, some AMD, some custom) means:

Maintaining separate software stacks

Training engineers on multiple platforms

Dealing with compatibility issues when customers want to move workloads

Supporting edge cases that the community hasn’t solved

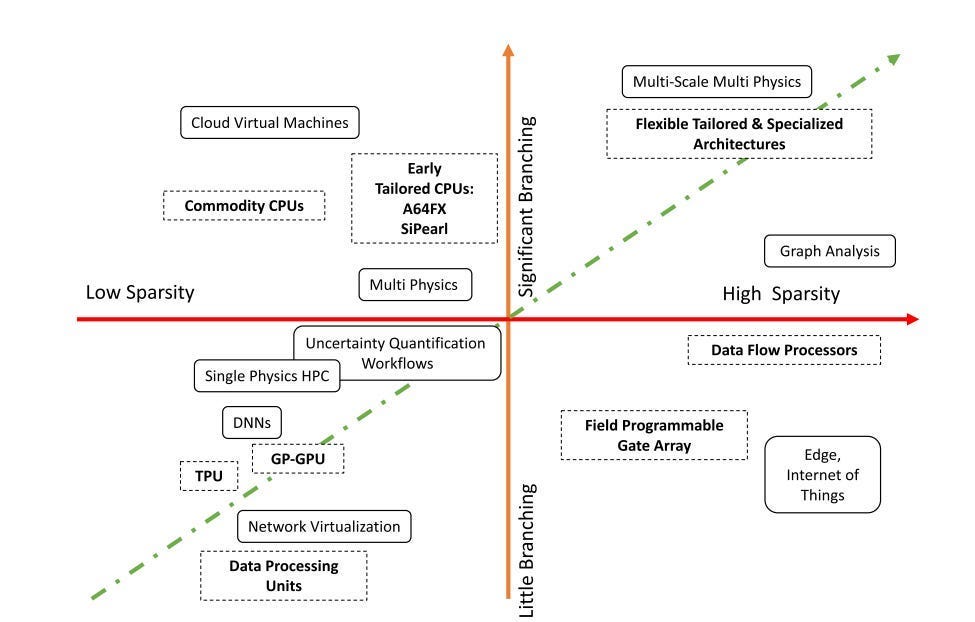

And this is when we’re dealing with GPU-only systems. I’m actually very bullish on different kinds of chips coming and wrecking GPUs because of their various computational/design limitations, which can be seen below —

but pragmatically speaking, ASICs are a tough, tough bet to make. Make them too weird to really crank up differentiation (specialize for niche cases) and they’re too niche to be used in any problem at scale (given how expensive hardware is, you likely won’t even recoup manufacturing costs).

Bring them into the same fold as GPUs, and then you’re left with the porting tax mentioned earlier. Even if Cerebras is 10x better at inference, does that change much when there are a 1000x fewer engineers that can really work with the chip and the questions talking about how to fix an error have 10 views since they were posted 6 months ago.

I’m personally excited about ASICs and heterogeneous computing because I think there’s more to like than not (and going against Nvidia and GPUs scratches the same itch that made me accept short notice, open weight underground cage fights when I was competing), but even the contrarian in me can see why Daddy Jensen has fuck you money and massive backlogs on orders.

(If you’re curious on what I think the next AI stack will look like, we broke this down here) —

All that to say that from a business perspective, heterogeneity is a cost center. Standardization is efficiency. And when one vendor dominates mindshare, the efficient choice is obvious.

This is how architectural lock-in compounds: individual rational decisions by researchers, cloud providers, and enterprises all independently arrive at the same conclusion — stick with Nvidia. Not because alternatives are impossible, but because they’re too unstable and unreliable given the current ecosystem.

3.5 Capital as Moat Construction

Now layer the vendor financing back in.

Nvidia invests in customers → they commit to buying Nvidia chips → revenue funds more investment → deeper ecosystem lock-in → alternatives fall further behind.

But there’s a more subtle effect: Nvidia’s capital pre-empts alternative architectures by ensuring near-term capacity is Nvidia-shaped.

When OpenAI signs a $300B deal with Oracle for Nvidia-based capacity, that’s compute locked in through 2030. Every model trained on that infrastructure will be optimized for Nvidia. Every researcher working at OpenAI will become more fluent in CUDA. Every partner integrating with OpenAI will build tooling that assumes Nvidia.

The circular deals aren’t creating fake demand — they’re foreclosing alternative futures by making sure the immediately available compute all points in one direction.

3.6 Why This Is Different From Normal Competition

In normal markets, monopolies get disrupted when competitors offer better products at lower prices. The superior alternative wins.

Here, superiority isn’t sufficient. You need:

Better hardware (achievable — AMD’s getting there; ASICs all hit better metrics on some numbers)

Equivalent software ecosystem (requires years of engineering)

Critical mass of developers (chicken-and-egg: need users to attract developers, need developers to attract users)

Willingness of organizations to bear switching costs (economically questionable, especially when you have cowardly MBAs and stupid Product Managers calling the shots)

And you need all four simultaneously, while competing against an incumbent that’s actively funding the ecosystem to deepen the moat.

Microsoft didn’t keep Windows dominance through better technology. They kept it through ecosystem lock-in — applications, device drivers, enterprise IT knowledge, training programs. “No one ever got fired for buying Microsoft” wasn’t about technical merit; it was about risk mitigation through conformity.

The difference? Microsoft faced antitrust scrutiny. Nvidia faces bubble panic.

3.7 The Real Strategic Play

What Nvidia’s actually building isn’t a customer base — it’s the standard. The baseline assumption about what “AI compute” means.

Standards are power.

Once everyone thinks in CUDA primitives, alternatives aren’t just technically different — they’re cognitively foreign. The barrier isn’t performance; it’s that the entire field has been trained to think in one specific architectural language.

“Don’t you see that the whole aim of Newspeak is to narrow the range of thought? In the end we shall make thought-crime literally impossible, because there will be no words in which to express it. Every concept that can ever be needed will be expressed by exactly one word, with its meaning rigidly defined and all its subsidiary meanings rubbed out and forgotten. . . . The process will still be continuing long after you and I are dead. Every year fewer and fewer words, and the range of consciousness always a little smaller. Even now, of course, there’s no reason or excuse for committing thought-crime. It’s merely a question of self-discipline, reality-control. But in the end there won’t be any need even for that. . . . Has it ever occurred to you, Winston, that by the year 2050, at the very latest, not a single human being will be alive who could understand such a conversation as we are having now?”

-1984, George Orwell. What can I say about this book that would do it’s brilliance justice? We discussed this phenomenon here

And the circular financing? That’s the mechanism. Use capital to ensure every major AI deployment optimizes for your architecture. Lock in the standard before anyone realizes the game is over.

In other words, the bubble critics are watching stock prices. They’re missing the many ways Nvidia is setting up their chokehold on innovation. Not surprising, what else can we expect from people who build their name off other people’s work?

But still sad.

By the time valuations correct and deals get renegotiated, the architecture will be set. CUDA will be the default. Alternative approaches will be relegated to research labs and niche applications.

That’s not fraud. That’s not speculation. That’s just what successful monopolization looks like when it’s happening in real time. This IBM mainframes all over again, except that Nvidia has a much more active venture arm and much deeper lock-in than peak IBM did.

Conclusion: Now What?

The bubble critics are giving Nvidia exactly what they need: distraction. Every thread about circular accounting is a thread not about ecosystem lock-in. Every chart predicting the crash is attention not spent on why CUDA became the only language anyone speaks.

You’re past that now. You see the actual mechanism — vendor financing buying architectural commitment before alternatives can mature. Capital is deployed to make the convenient choice and the rational choice point the same direction until there’s no other direction left.

So what stops it?

Regulation won’t. By the time legislators understand what CUDA is, the next layer has already consolidated. Antitrust thinks in terms of market share and consumer prices — it has no framework for “primitives become power” or “architectural lock-in narrows what’s thinkable.” The Senate hearing will happen in 2028, Nvidia will promise to play nice, and nothing will change because the people writing the rules don’t understand the game being played.

And even if they did, revolving doors and lobbying donations are phenomenal ways to make friends. There’s no guarantee that regulation won’t tighten Nvidia’s lock-ins.

Back to our question, what actually matters?

It’s an open question, but I think this will likely require a combination of Software, Hardware, and new computing paradigms. Look where GPUs are weak, look where Van Neumann computing fizzles, for areas that make us do much more with less.

I think this is a great time to build against Nvidia’s plays because other major competitors still have huge vested interests in pushing back against Nvidia’s lock-in. Bubble or not, AI has more open questions than answers, and I think the AI world can still be remolded to fit our visions. Nvidia would not be as aggressive about building its ecosystem if its power were solidified. A lot of smart money recognizes this, and the major capital figures being thrown around are proof of that.

I look forward to seeing what you’ll do.

Thank you for being here, and I hope you have a wonderful day,

Dev <3

If you liked this article and wish to share it, please refer to the following guidelines.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

My (imaginary) sister’s favorite MLOps Podcast-

Check out my other articles on Medium. :

https://machine-learning-made-simple.medium.com/

My YouTube: https://www.youtube.com/@ChocolateMilkCultLeader/

Reach out to me on LinkedIn. Let’s connect: https://www.linkedin.com/in/devansh-devansh-516004168/

My Instagram: https://www.instagram.com/iseethings404/

My Twitter: https://twitter.com/Machine01776819

Excellent article Devansh. What stands out to me is how this isn’t just capital consolidation but cognitive consolidation. Once CUDA becomes the default mental model for compute, the monopoly extends far beyond hardware. The next fault line may not be chips, but energy and memory bandwidth. I think whoever cracks those constraints will shape the next architecture cycle. Keen to hear your thoughts. Once again, great article. Got me thinking!

You outdid yourself on this one Devansh - really excellent.

I may be completely off here, but the only way I can see an alternative commercial architecture gaining traction is if someone like AWS (and honestly, probably only AWS) puts their weight behind it - like they did with Graviton.

The migration with AI compute is more complex than the move to Graviton was, but with Amazon's scale, the payoff could potentially be huge for them even with relatively small cost savings from an alternative architecture. And it would also give AWS something that might be even more valuable to them long-term than the cost savings - lack of dependence on NVIDIA.

The other potential disruptor here is China. AI tech export restrictions are already pushing them to develop alternatives, and I only expect it to continue. Even with the NVIDIA sales carve-out, China is highly allergic to dependence on NVIDIA (or any non-Chinese company), and they're likely very willing to pour national resources into it. So, I expect they'll eventually have their own alternative, and to push it internationally once they do. But I have no idea how long that will take.