The Low-Tech Revolution: Why AI Will Transform the Industries Tech Forgot [Markets]

Restaurants, trucking fleets, farms, and construction firms were left behind in the software era. AI finally changes the math

It takes time to create work that’s clear, independent, and genuinely useful. If you’ve found value in this newsletter, consider becoming a paid subscriber. It helps me dive deeper into research, reach more people, stay free from ads/hidden agendas, and supports my crippling chocolate milk addiction. We run on a “pay what you can” model—so if you believe in the mission, there’s likely a plan that fits (over here).

Every subscription helps me stay independent, avoid clickbait, and focus on depth over noise, and I deeply appreciate everyone who chooses to support our cult.

PS – Supporting this work doesn’t have to come out of your pocket. If you read this as part of your professional development, you can use this email template to request reimbursement for your subscription.

Every month, the Chocolate Milk Cult reaches over a million Builders, Investors, Policy Makers, Leaders, and more. If you’d like to meet other members of our community, please fill out this contact form here (I will never sell your data nor will I make intros w/o your explicit permission)- https://forms.gle/Pi1pGLuS1FmzXoLr6

Much of the attention around AI and how it will change industries has focused on the pillars of the digital economy — creating slop content and using AI to make selling shit easier. Some of the more woke have looked beyond and thought about how AI might be used in personalized medicine, clean energy, and other socially useful things.

However, there is one space that AI will impact, that almost noone talks about. And I think AIs impact on that space will be extremely interesting to study.

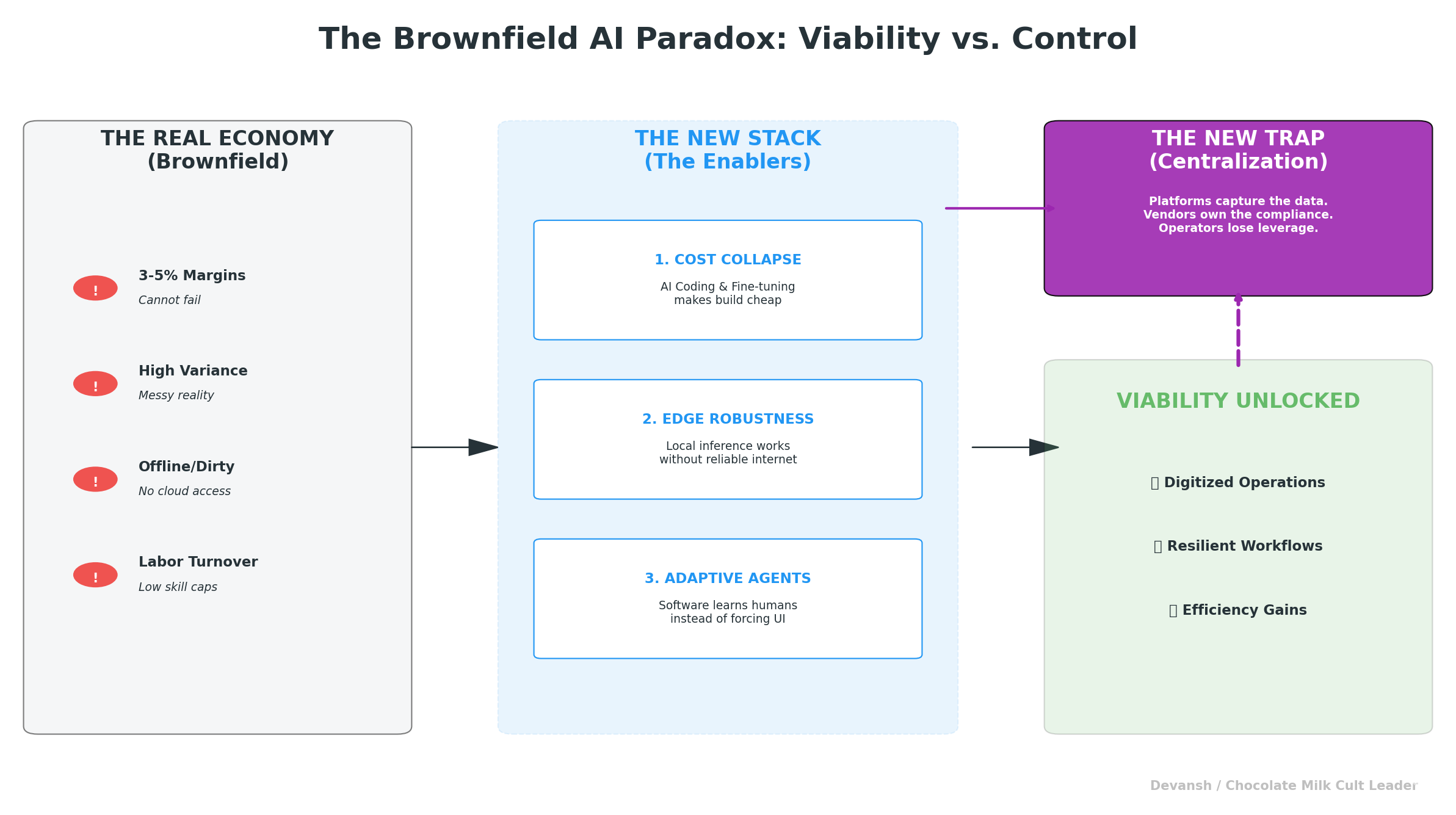

For decades, most of the real economy — restaurants, trucking fleets, farms, construction firms — couldn’t digitize in any meaningful way. Not because they didn’t want to, but because the math never worked. Software was too expensive to build, too slow to deploy, and too fragile to maintain in high-turnover, high-variance environments. A 3–5% margin business could not survive a failed IT rollout, and most rollouts failed.

A new technology stack has emerged that shifts the economics and mechanics of software in 4 ways:

AI has collapsed the cost of building and adapting software.

Automated development, model-assisted workflows, and lightweight fine-tuning remove much of the labor and time that made vertical software unaffordable for 3–5% margin businesses. This becomes even better when we consider that online work further pushes up the supply of developers, driving down the cost of code.Edge computing gives physical environments the reliability they always needed. Brownfield operations cannot depend on cloud availability, inconsistent networks, or API stability. They need systems that run locally, continue working offline, and behave predictably even when connectivity or cloud services fail. Edge AI has fewer dependencies, making it easier to account for.

Cheap models also reduce the cost of inference for low-intelligence tasks, which means that even cloud-based LLMs are easier to deal with.

Agentic systems can now learn from human corrections. Instead of forcing operators to adapt to rigid software, the software adapts to them. This makes it possible to digitize workflows that were previously too variable, too unstructured, or too context-dependent for traditional systems.

Together, these shifts make brownfield digitization economically viable for the first time.

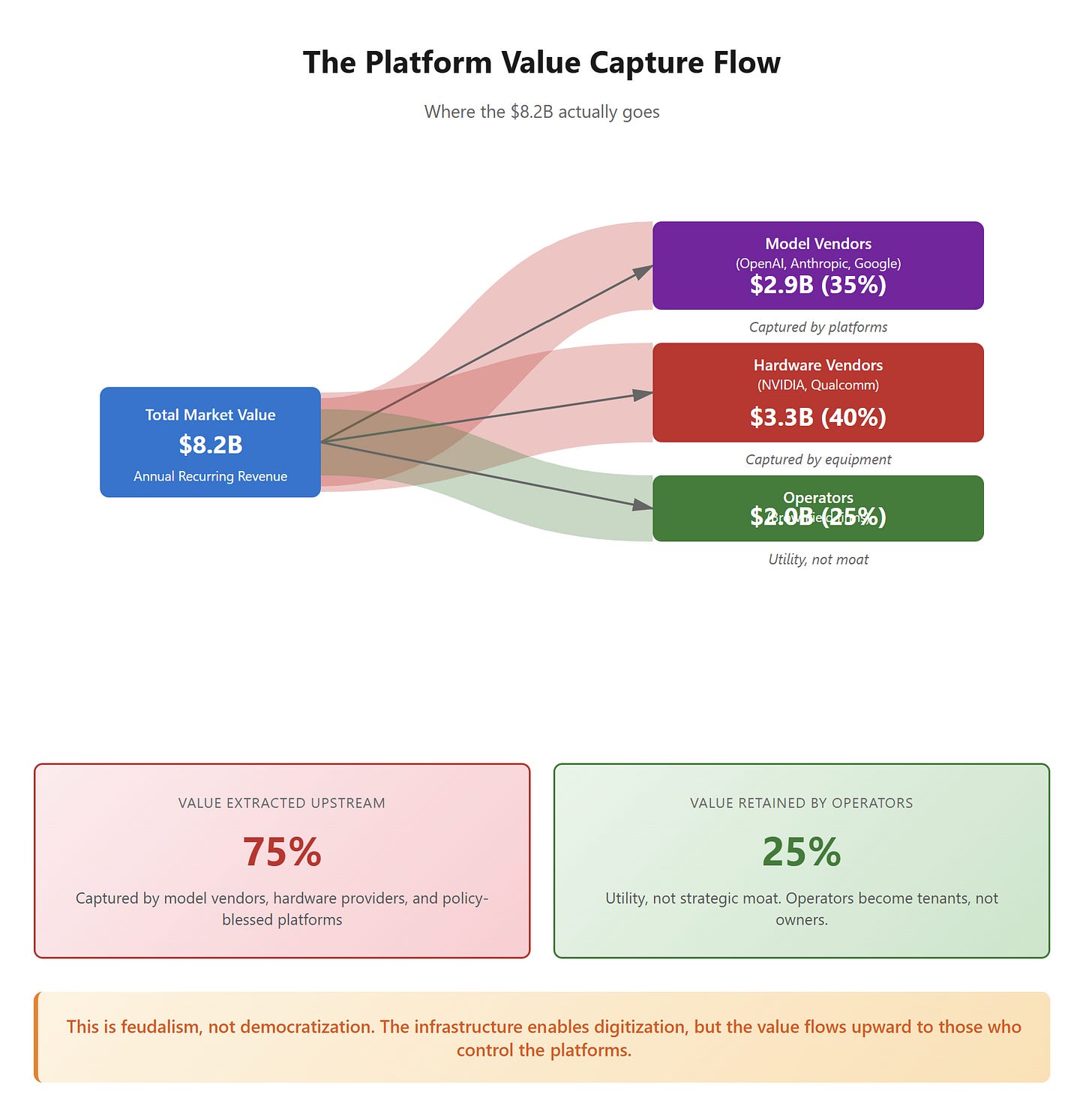

But that does not mean the benefits will flow evenly.

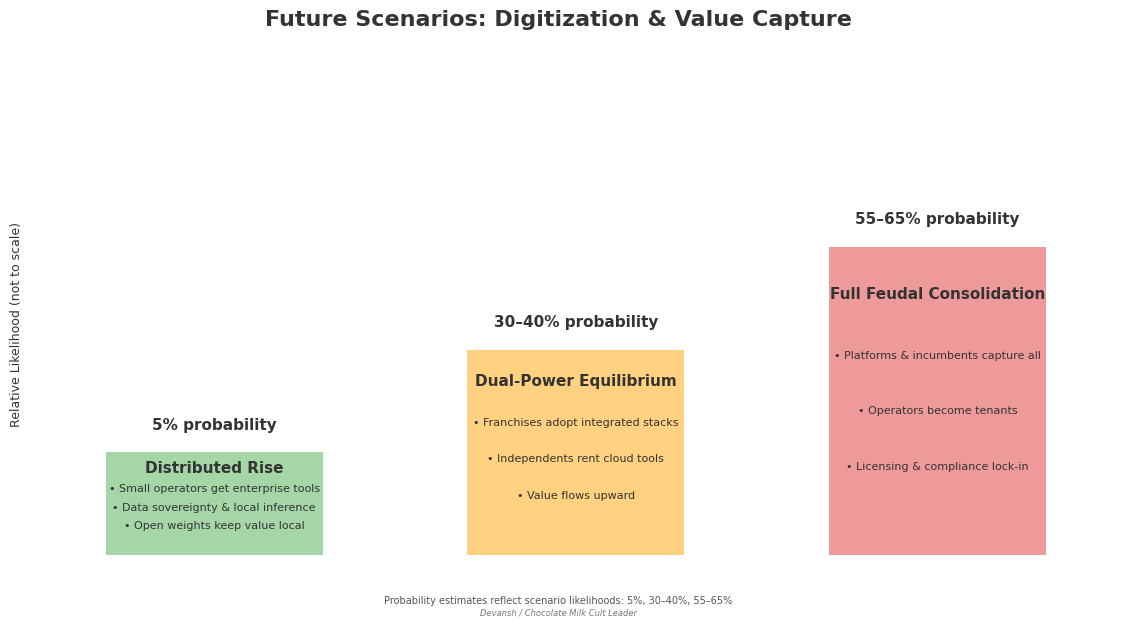

The same stack that enables digitization also creates new forms of centralization. Model providers control access to cognition. Hardware vendors control the edge. Incumbents control compliance pathways. Procurement rules and public subsidies often favor large enterprises over small operators. As a result, brownfield firms are being pushed into adopting AI to survive — but the long-term value created by that adoption is increasingly captured by platforms, not by the operators themselves.

In this chocolate milk cult exclusive, we will look at the following:

Why digitization historically failed in thin-margin sectors

Why it becomes viable now through cost collapse, edge robustness, and adaptive agents

Where the real economic opportunity lies — and which industries cross the viability threshold

What hidden technical and operational costs appear when AI enters physical environments

How platform control, consolidation, and policy design recapture the value

Why adoption becomes mandatory despite these risks

And finally, how operators can still maintain control through deliberate architectural and strategic choices

Get those prayer beads out because you are about to watch God in action.

Executive Highlights (TL;DR of the Article)

Low-tech industries weren’t “behind”; they were rational. On 3–5% margins, a $5M–$10M software rollout with a 30–50% failure rate was a death sentence. Rigid systems couldn’t survive physical chaos, and extreme workforce turnover made training impossible. So the clipboard beat the computer for forty years.

A new stack flips the economics: AI slashes build cost, edge computing finally gives physical environments deterministic reliability, cheap small models make inference viable at scale, and agentic systems adapt to messy human workflows. For the first time, brownfield digitization is actually feasible.

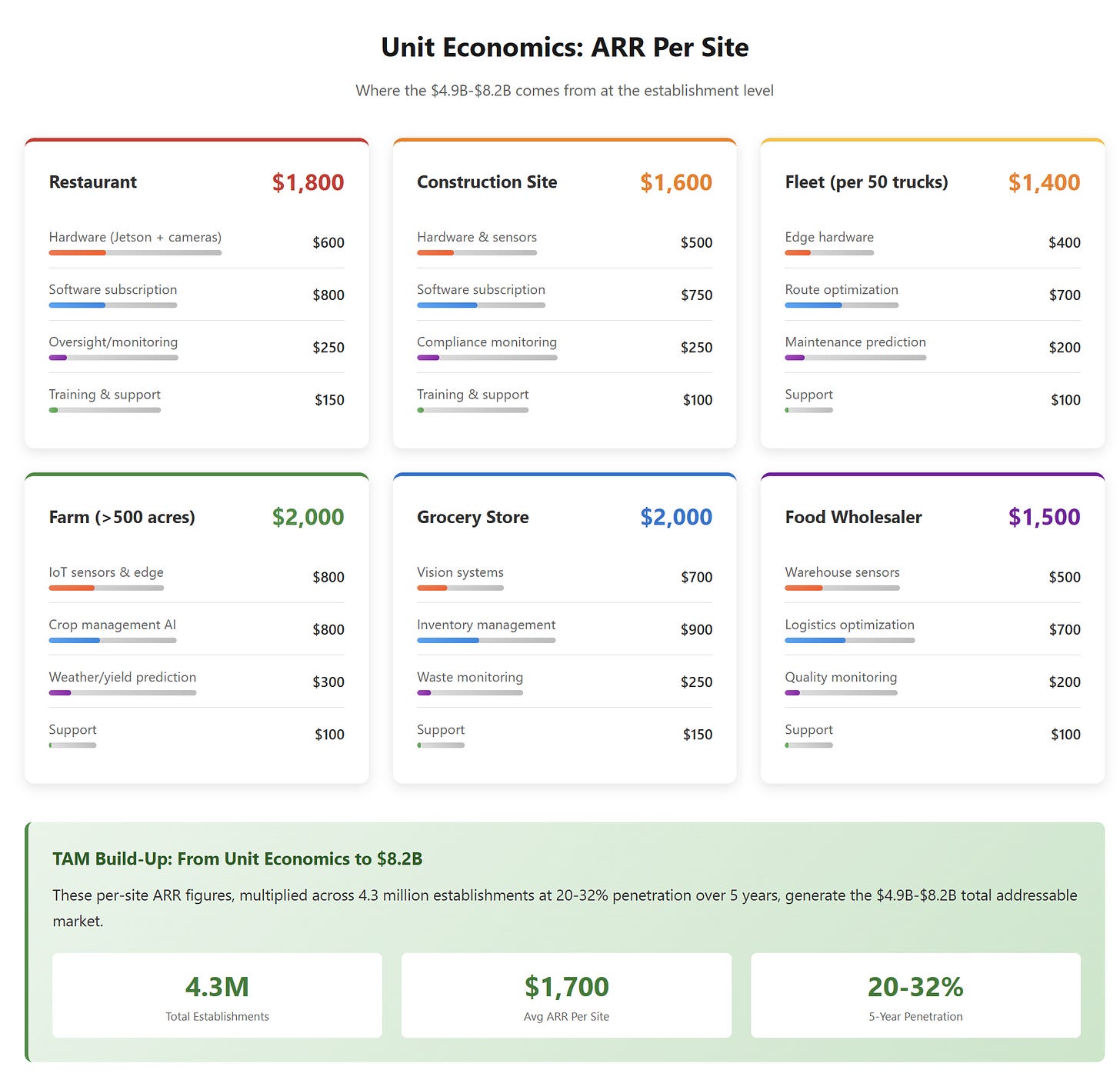

The winners are sectors with brutal margins and high automation potential. Based on fused margin and automation-readiness data, the Digital Viability Index flags agriculture, food wholesale, grocery, construction, and trucking as forced-adoption markets. Together they create a $4.9B–$8.2B ARR window within five years, led by franchises and multi-site operators who can absorb deployment and standardize training. Independents follow slowly and become dependent tenants of platform providers.

But the new stack carries its own bill: probabilistic agents introduce chained failure rates of 10–25%, AI-generated code adds bloat and vulnerabilities, and security audits become mandatory. The cost collapse shifts expense from capex to opex — faster builds, higher supervision.

Meanwhile, centralization accelerates. Incumbents bundle AI features for free, killing low-end entrants, while government procurement and subsidy rules favor large enterprises. Adoption becomes unavoidable, but value flows upward — to model vendors, hardware providers, and policy-blessed platforms.

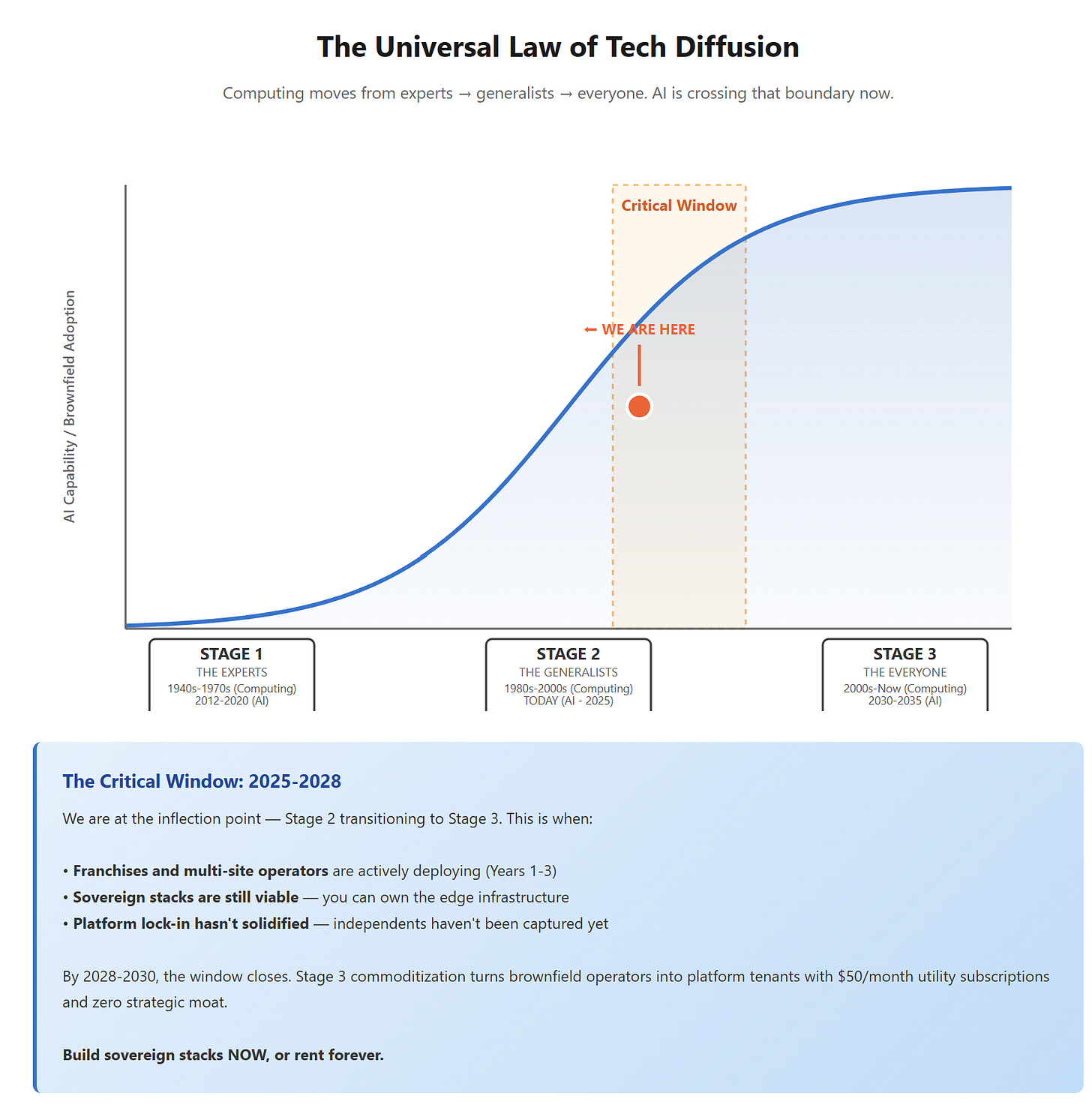

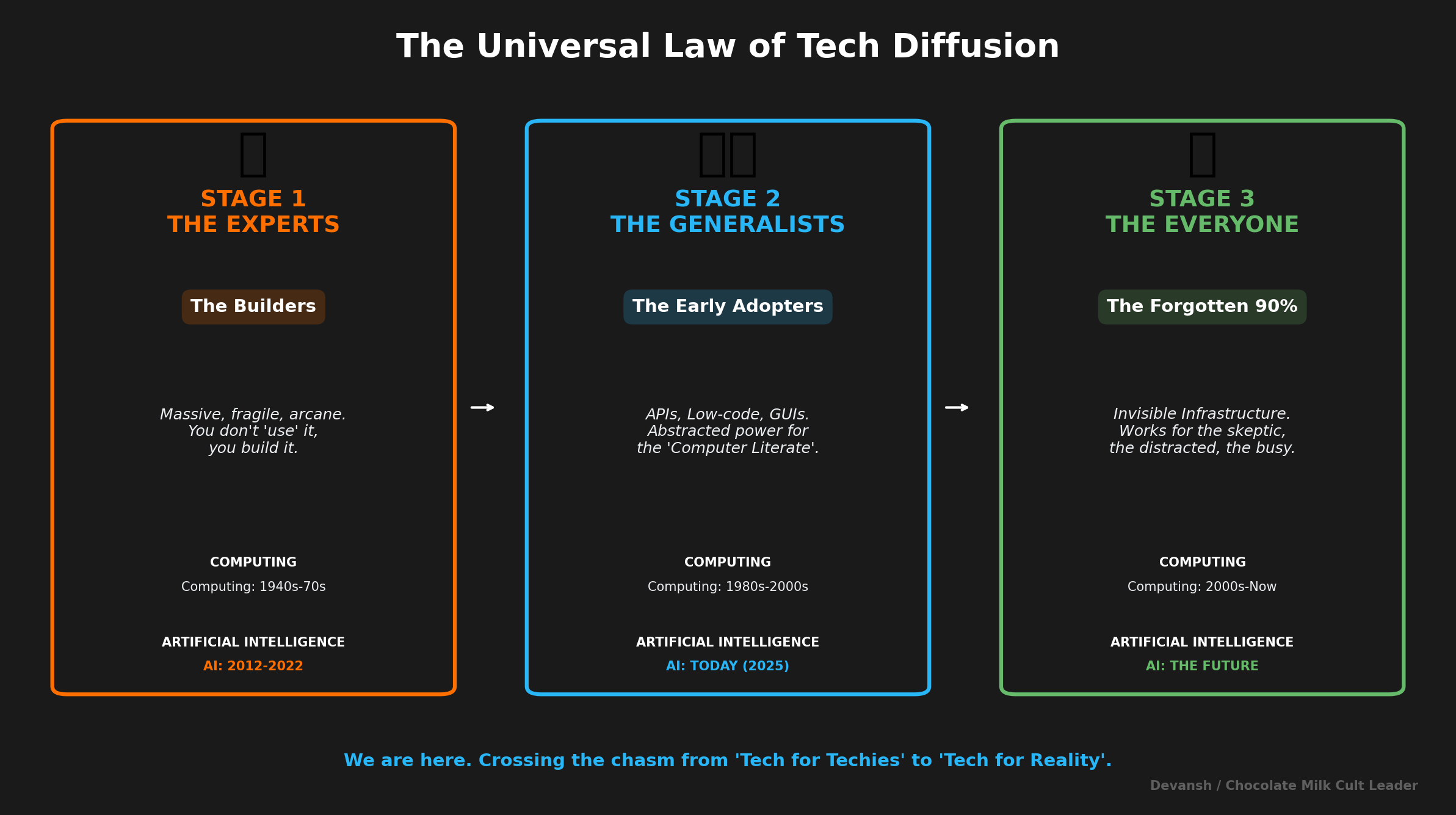

Computing always moves from experts → generalists → everyone. AI is crossing that boundary now. The combination of cheap cognition, robust edge hardware, capital pressure, and regulatory momentum makes AI’s expansion into low-tech industries inevitable — and turns these long-ignored sectors into a rare, time-bound investment opportunity.

Investing in AI to disrupt low-tech industries is a winning bet. It’s a rare confluence of technology, capital (investors need somewhere to invest as SaaS becomes saturated), regulation (governments promote digitization initiatives), and sociology (you have a lot of unemployed developers that can provide low-cost implementation). Let’s understand how.

I put a lot of work into writing this newsletter. To do so, I rely on you for support. If a few more people choose to become paid subscribers, the Chocolate Milk Cult can continue to provide high-quality and accessible education and opportunities to anyone who needs it. If you think this mission is worth contributing to, please consider a premium subscription. You can do so for less than the cost of a Netflix Subscription (pay what you want here).

I provide various consulting and advisory services. If you‘d like to explore how we can work together, reach out to me through any of my socials over here or reply to this email.

PART 1: WHY DIGITIZATION FAILED FOR 40 YEARS

Let’s be clear: The reason your local construction site runs on WhatsApp and yelling isn’t because they hate technology. It’s because for forty years (that’s going back to the 80s for you old people, FYI), buying software was a suicide pact.

The digital transformation consulting industry built a forty-year career out of telling restaurants, trucking companies, and construction firms that they were “behind the curve” and needed to “modernize.” What they didn’t tell them — or didn’t understand themselves — was that the math was designed to kill them.

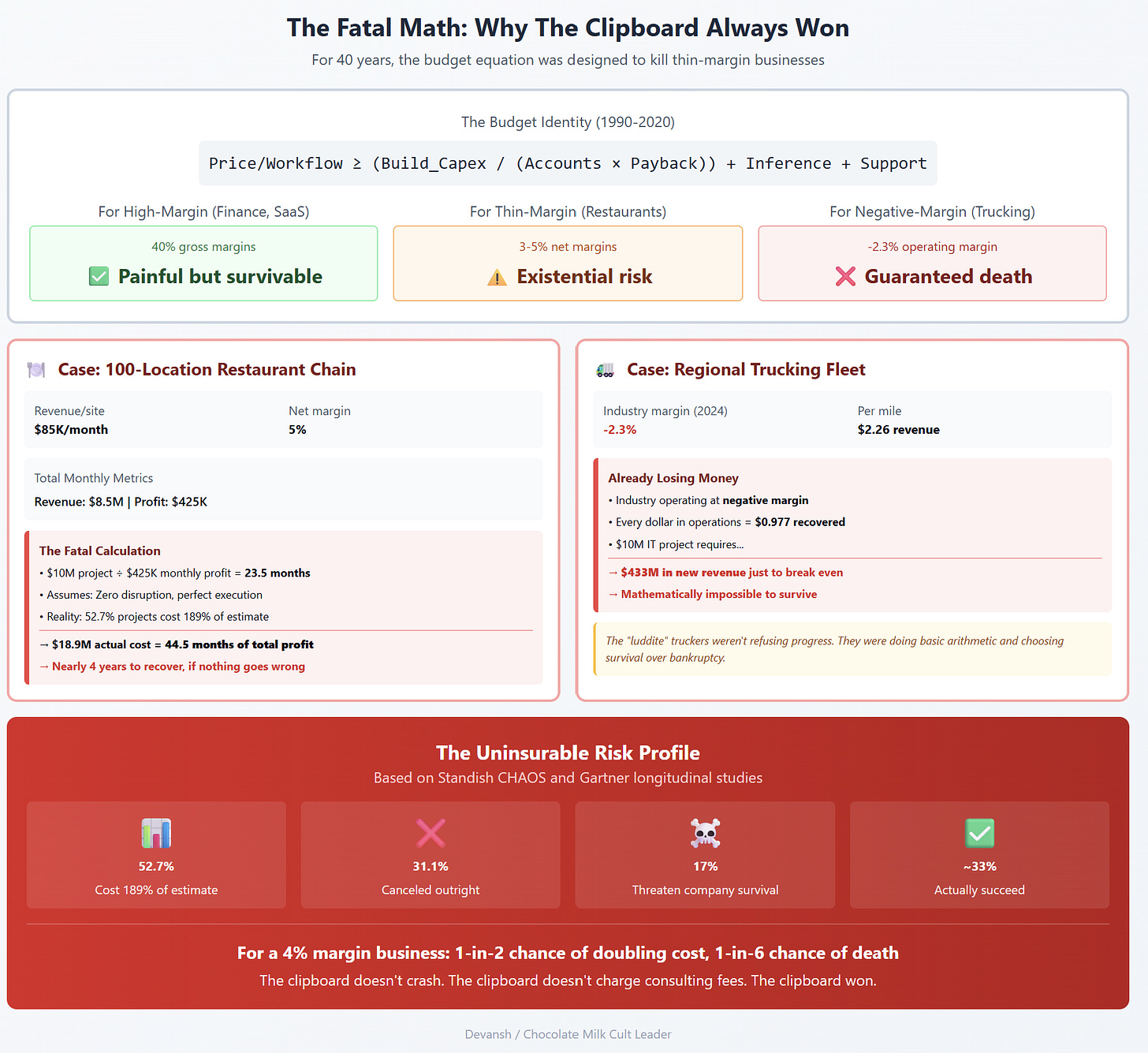

Traditional enterprise software operated on a simple budget identity:

Price_per_workflow ≥ (Build_Capex / (Accounts × Payback_Months)) + Inference_VarCost + Support_Friction

For high-margin industries — finance, enterprise SaaS, telecommunications — the Build_Capex term ($5M–$10M) was painful but survivable. For a firm operating on 40% gross margins, a $10 million software project could be amortized across enough accounts and recovered fast enough to justify the risk.

For brownfield industries, the same equation was a death sentence.

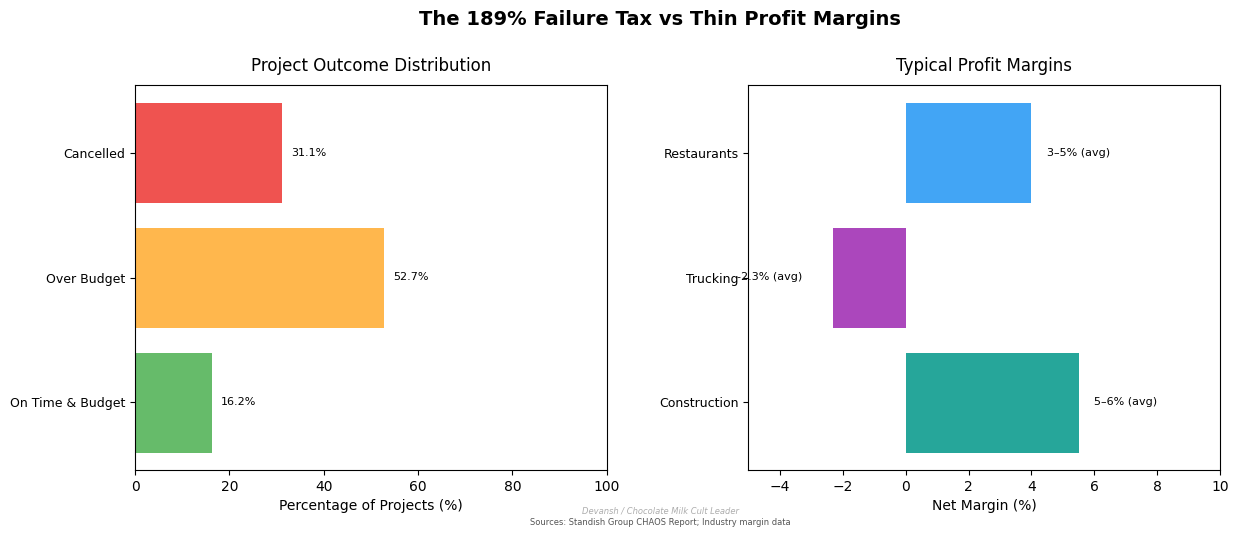

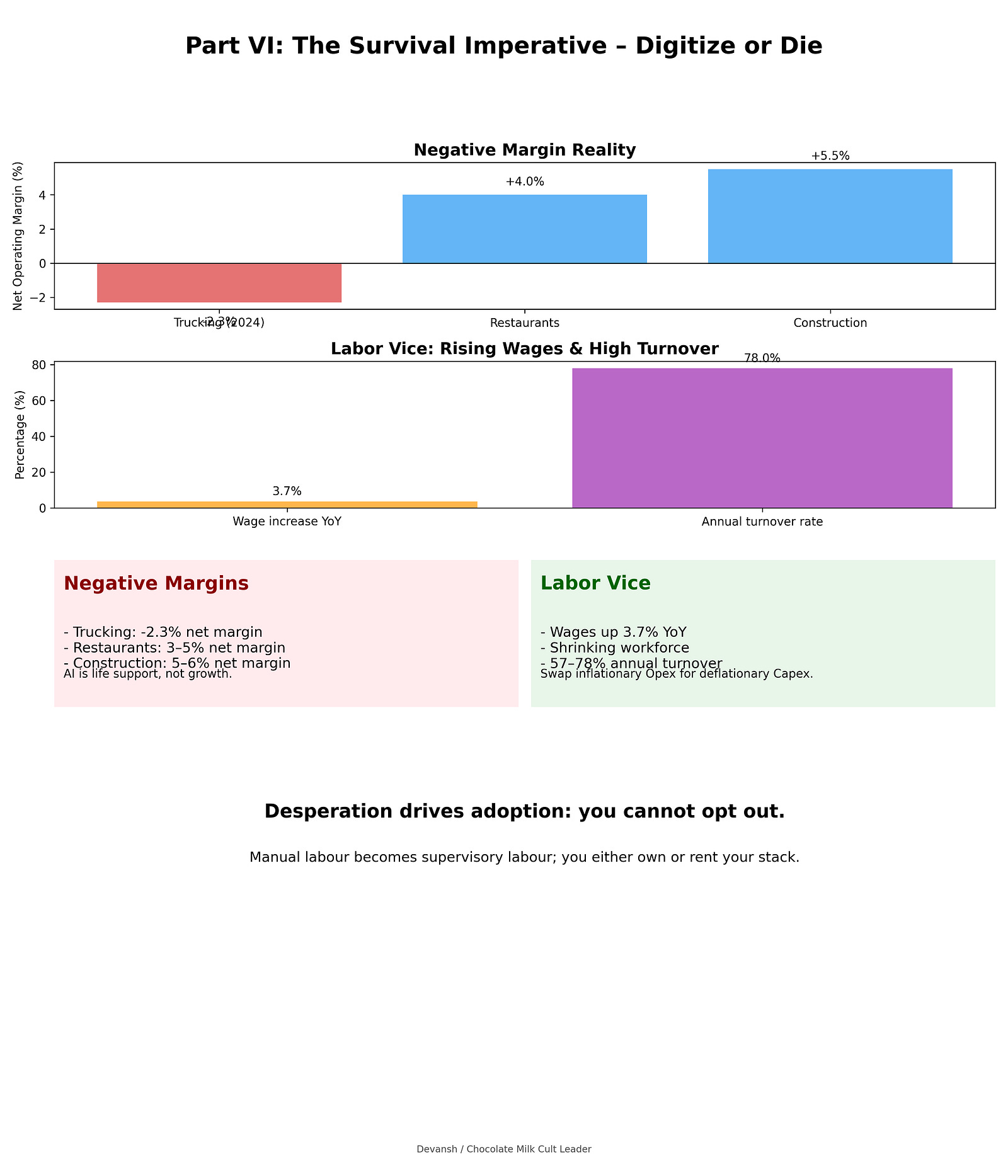

A full-service restaurant operates on 3–5% net profit margins. A truckload carrier, as of 2024, operated at an average of -2.3% operating margin ($2.26 per mile)— not low-margin, but negative-margin (this was bad, not the average, but still it shows how much the industry is beaten). A construction firm’s net margins float around 5–6% on average, with even well-run general contractors often stuck in the mid-single digits.

Now run the math. A $10 million digitization project, under best-case conditions, needs to be amortized. For a restaurant chain with 100 locations generating $85K in monthly revenue per site, that’s $8.5M in total monthly revenue. Assuming a 5% net margin, that’s $425K in monthly profit. A $10M project would consume 23.5 months of total profit just to break even — assuming perfect execution and zero operational disruption.

Here is why the math never worked, and why the graveyards of the 1990s and 2000s are full of failed ERP rollouts.

1. The Law of the 189% Failure Tax

Historically, if you wanted to digitize a trucking fleet or a restaurant chain, you had to buy into the “Enterprise Software” . You hired consultants. You bought licenses. You paid for “integration.”

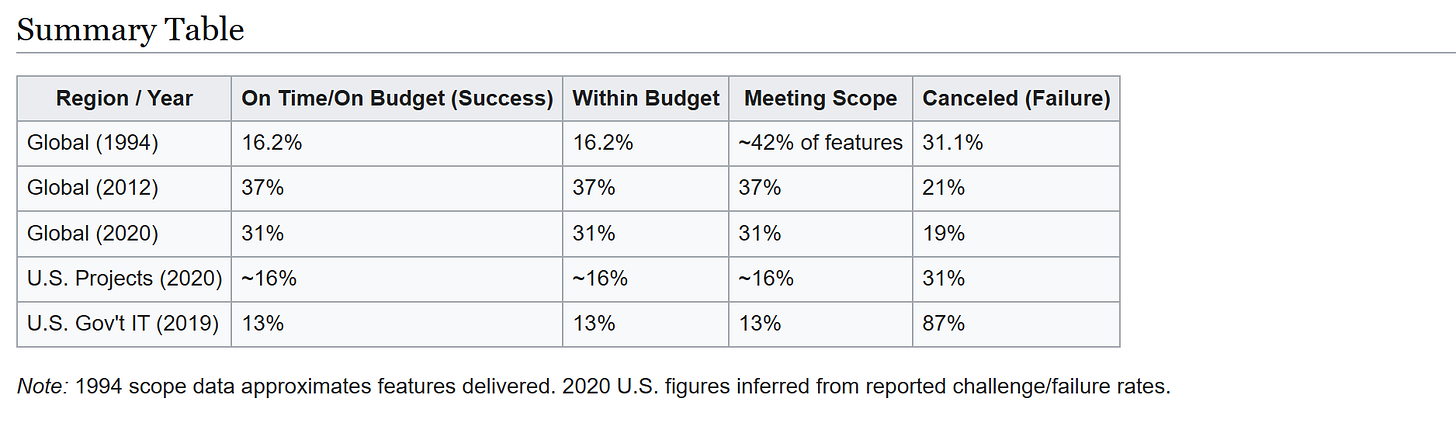

The sticker price was usually $5 million to $10 million. But the real cost was the failure tax. A foundational Standish Group CHAOS report on tens of thousands of IT projects found that 31.1% were canceled outright, and over half (52.7%) ended up costing about 189% of their original estimates. Later CHAOS data hasn’t been much kinder: even in the 2010s and 2020s, only about one-third of projects are fully successful; the rest are either massively over budget, late, or quietly fail

Think about what that means for a business running on 4% margins.

If you are a logistics company making $4 profit on every $100 of revenue, and you sign up for a $10 million project that suddenly costs $28.9 million, you didn’t just miss a quarterly target. You died.

You would need to generate an extra $472 million in revenue just to pay for the mistake.

The “dumb” farmers and “luddite” construction bosses weren’t rejecting the future. They were doing the math. They looked at the risk profile — a 1-in-2 chance of doubling the budget, a 1-in-6 chance of the project killing the company entirely — and they rationally decided to stick with the clipboard. The clipboard doesn’t crash, and it doesn’t charge you consulting fees.

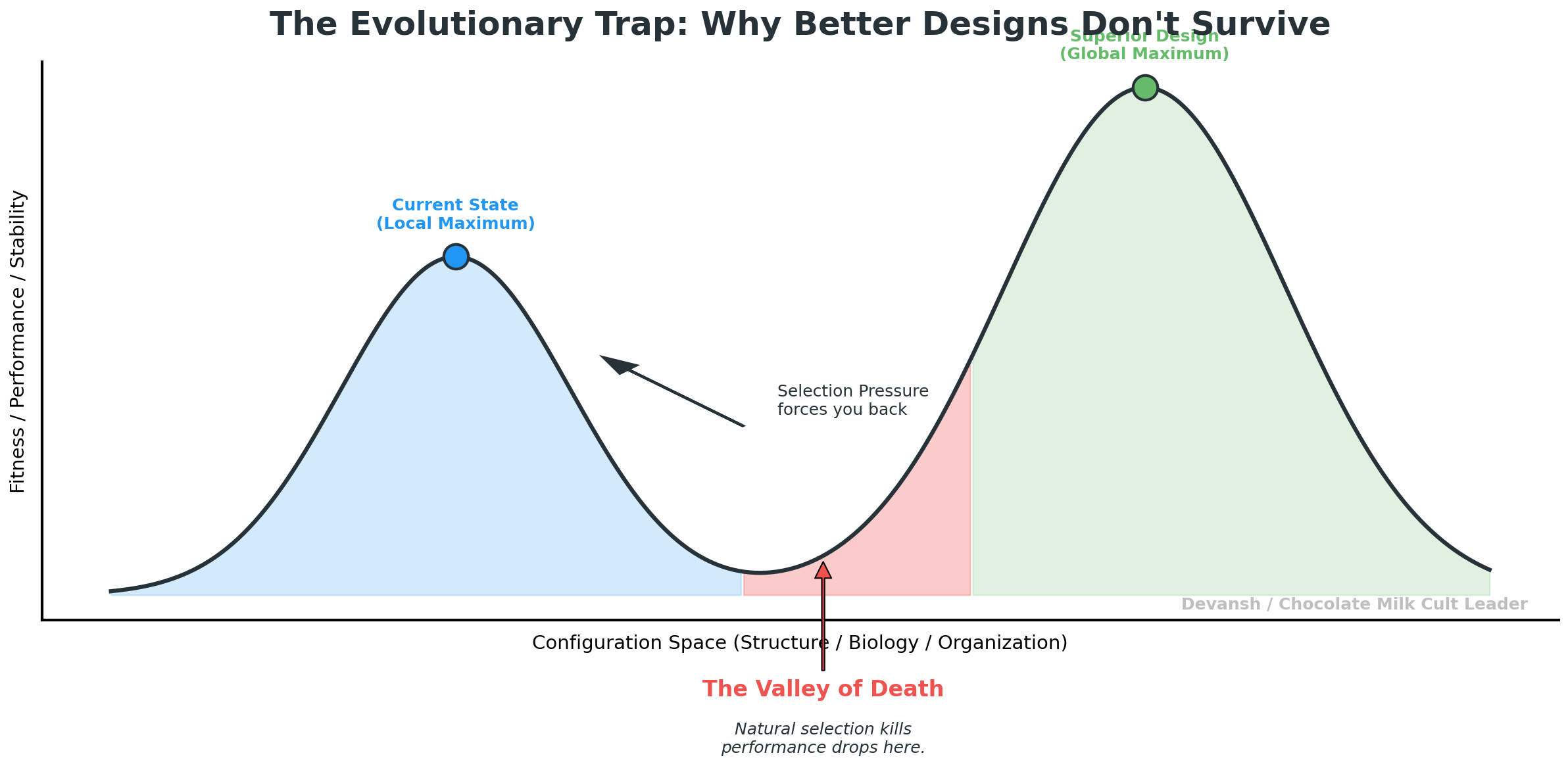

PS — As an interesting aside, this phenomenon is often described as an evolutionary valley. A system — biological, technological, organizational — can see a higher peak ahead, but to reach it, it must first get worse. It must pass through a dip in performance, stability, or fitness before climbing to a superior design. Evolution avoids these valleys because natural selection punishes anything that loses capability, even temporarily. Many pure researchers/engineers always think in terms of great end result, w/o understanding that their proposed solutions will carry a lot of short term pain and thus likely to be shut down by management (who can’t truly understand the better outcomes). This is why communication is key for engineers, see our guide here).

2. The Brownfield Mismatch

“Unfortunately, 22.3 percent of Americans in rural areas and 27.7 percent of Americans in Tribal lands lack coverage from fixed terrestrial 25/3 Mbps broadband, as compared to only 1.5 percent of Americans in urban areas” — U.S. Department of Agriculture.

Silicon Valley builds software for people who sit in chairs. The brownfield economy happens in the mud, in the kitchen, and on the road.

The “Enterprise” stack was rigid. It demanded that you change your business to fit the database schema. But physical reality is messy.

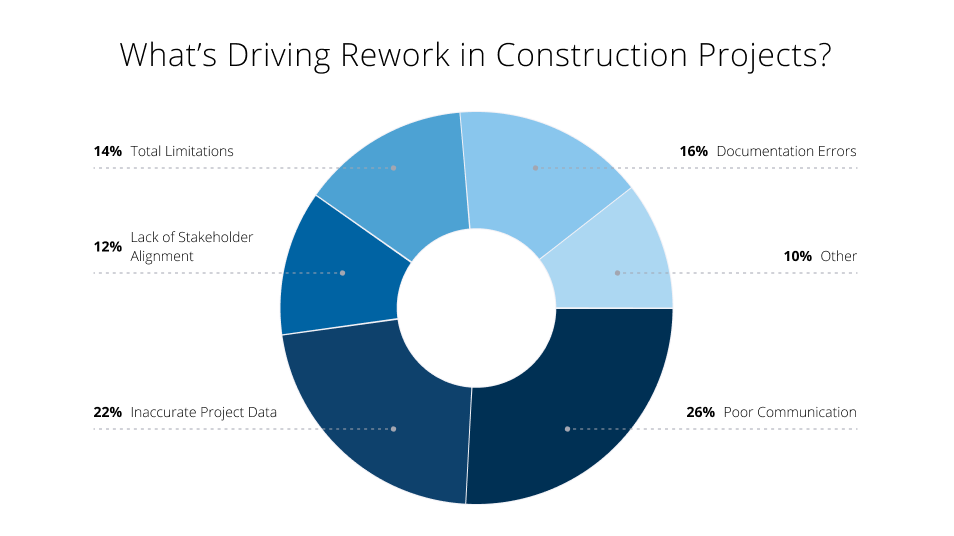

Construction: You have 30 subcontractors who hate each other, working in the rain, with plans that change every hour. You can’t model that in a rigid SQL database. One recent analysis estimates $46 billion a year in construction rework just from miscommunication and poor coordination; an earlier FMI/Plangrid study put U.S. rework from bad data alone at over $31 billion a year.

Agriculture: You have tractors that cost half a million dollars but can’t talk to the sensors in the soil because the data formats are proprietary. You also have rural internet speeds that make dial-up look fast.

Food Service: You have legacy point-of-sale systems (Oracle/MICROS) that are so brittle that changing a menu item requires a support ticket.

The software demanded perfection. The world offered chaos. The mismatch meant that even if you paid the money, the system usually didn’t work. This was made worse by the sheer number of implementation leads and digital consultants who knew fuck all about the industry they were setting out to optimize.

3. The Human Friction (Or: Why Training is Impossible)

Here is the final nail in the coffin. Even if you built the software, and even if it worked, you couldn’t get anyone to use it.

The tech industry assumes “users” stick around. They assume you can send someone to a 3-day training seminar.

In the brownfield world, the workforce is a revolving door.

Hospitality turnover: 78% per year.

Construction turnover: 57% per year.

Logistics and transportation: 50–60% annual turnover, with long-haul trucking often hitting 90%+ in large carriers.

If you run a restaurant chain, your entire staff changes every 14 months.

This doesn’t align with software that takes 6 months to master. You are spending money training people who will quit before they are productive. It’s a leaky bucket that you are trying to fill with expensive champagne.

For forty years, this was the trap. High build costs. High failure rates. High turnover. The gravity was too strong. The margin was too thin. The clipboard won because the clipboard was the only thing that didn’t threaten to bankrupt the company.

But then, the cost of intelligence collapsed. There are only three ways this plays out. Let’s explore them and see what will happen based on what makes digitization possible.

PART 2— THE NEW STACK: WHY DIGITIZATION IS NOW ECONOMICALLY POSSIBLE

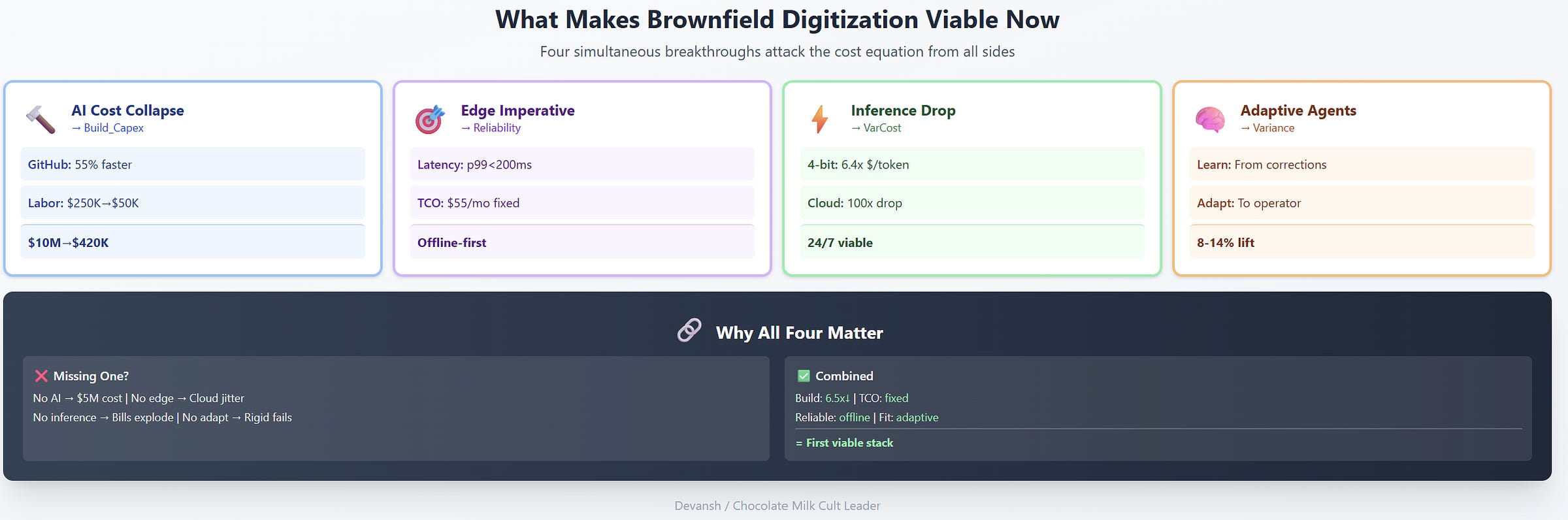

For the first time in forty years, the cost structure, reliability profile, and adaptability requirements of the physical world actually match what modern software can do. And it’s happening for four reasons.

1. AI Has Collapsed the Cost of Building Software

Let’s get one thing straight: The biggest cost in traditional software wasn’t cloud compute, wasn’t servers, wasn’t integration — it was humans writing code slowly and painfully.

That’s the part AI just nuked.

AI-assisted development has already delivered 20–40% productivity gains in real-world teams, with controlled experiments showing up to ~50–55% faster completion on constrained tasks. In practice, that means a large chunk of the ‘human typing’ work in greenfield builds and brownfield maintenance is now handled by machines, not people

Why?

AI handles boilerplate

AI handles integration glue

AI handles documentation. AI can even do QA on code bases (Augment Code is particularly good here) to make changes easier.

AI handles migrations

AI handles tests

AI writes scaffolding that used to take months

In one documented case, Roche cut time-to-competency from 1.5 years to 90 days by re-architecting training around AI-driven systems. Across other industries, AI-assisted onboarding and training routinely show 20–50% time-to-competency reductions, depending on task and role.

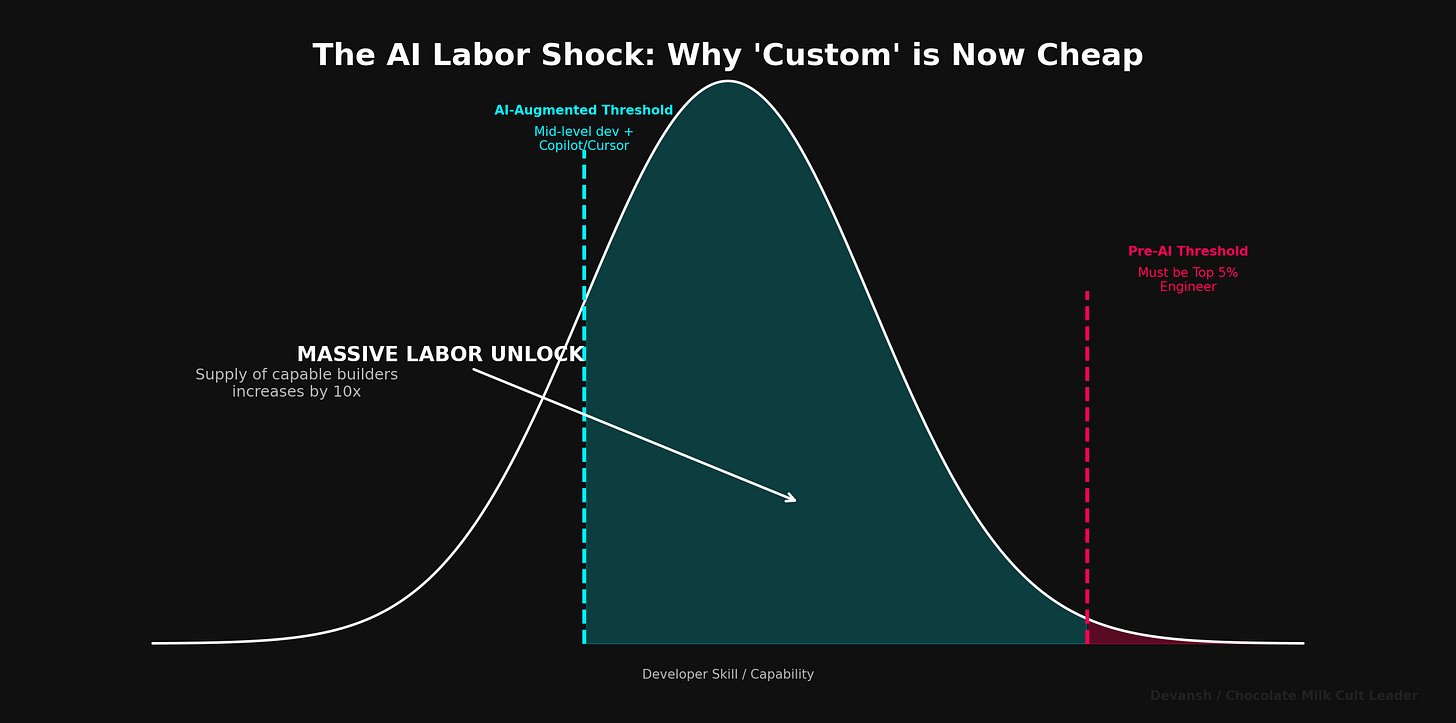

And because the entire global developer pool is now plugged into GitHub Copilot, Claude Code, Augment, Cursor, etc., the supply of “good enough engineers” just shot up while the cost per unit of labor went down.

In plain English: Software is no longer artisanal; it’s assembly-line work done by humans supervising machines.

This is a godsend to Brownfield operators who don’t care about ideology — they care about cost curves. A simple template software + remote FDE+ AI to make quick edits can make intelligent software more feasible than before.

2. Edge Computing Makes Physical Work Environments Actually Digitizable

Cloud evangelists hate hearing this, but here’s the truth: cloud AI is structurally incompatible with real-world operations.

Not because it’s slow (on average it’s faster than local systems, which require some engineering to hit peak performance). Not because it’s expensive (local LLMs and distributed inference can be very difficult). But because it fails in exactly the ways physical systems cannot tolerate.

Physical environments need:

Offline robustness (internet goes out, work must continue). There is some beauty in my writing this article as Cloudflare went down.

Deterministic behavior (no jitter, no sudden p99 latency spikes)

Local fail-safes (a camera system freezing for 200ms is a hazard, not a UX hiccup)

Stable models (no forced migrations, no platform-dependent outages)

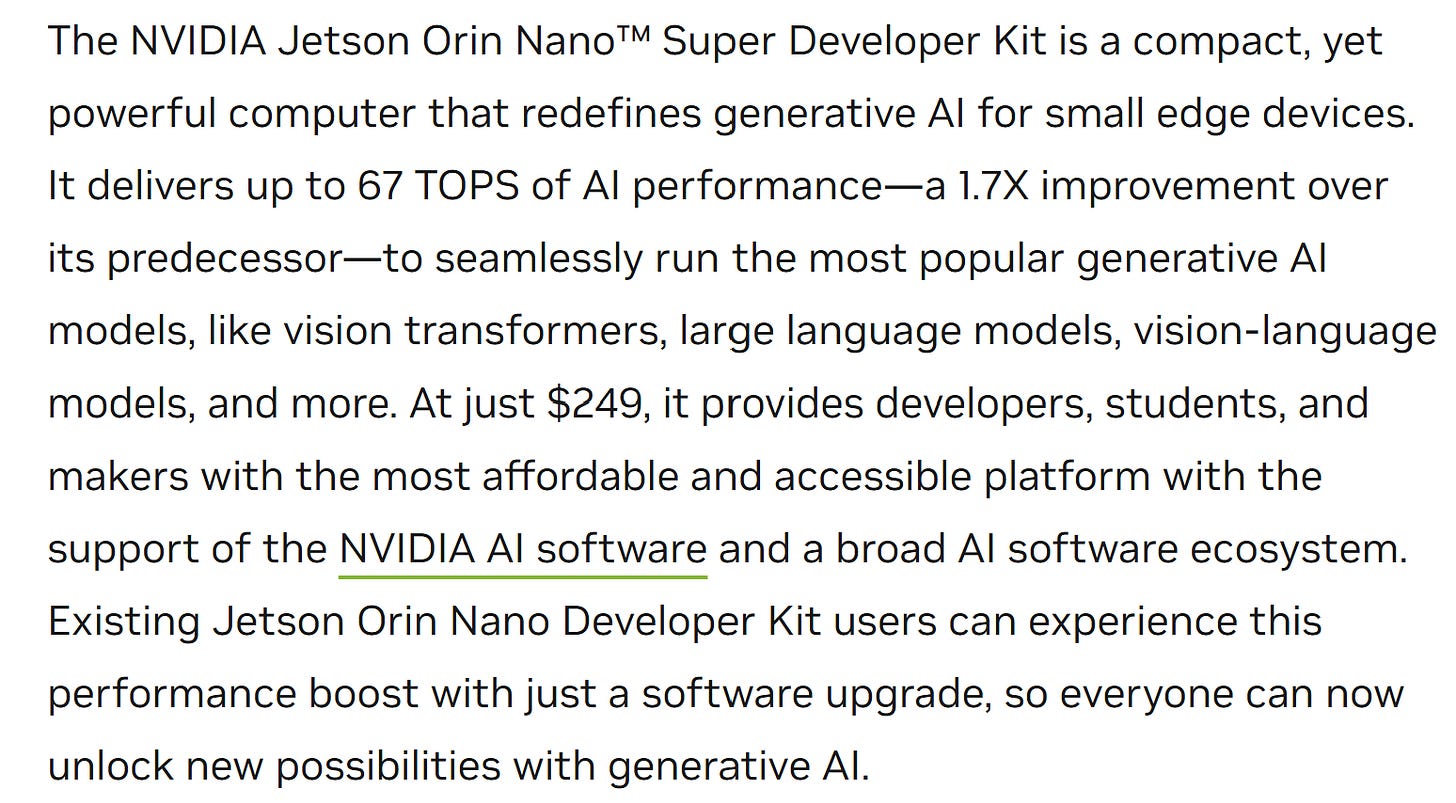

Edge systems don’t win because they’re elegant — they win because they’re cockroach-simple and survive hostile environments. A Jetson Orin or RB6 running a quantized model isn’t “cutting-edge.” It’s “good enough, always on, and impossible to interrupt accidentally.”

That’s what the real world needs. After all, Physical work is not averaged. It’s binary. It either runs, or it stops (unironically great relationship advice as well).

3. Cheap Models Make Inference Economically Feasible

You do not need GPT-5 to check if a chicken went bad or verify a forklift’s load angle.

Squeezed, quantized, and distilled models are: small enough to run on $200 hardware, cheap enough to run continuously, accurate enough for bounded tasks, and customizable enough for site-specific workflows

Low-intelligence tasks used to require high-intelligence tooling because nothing smaller existed. Now we have tiny 3B–8B models that can run entirely on-device, meaning inference cost is effectively free after hardware amortization.

This trend will continue as specialized ASIC AI inference providers move to these spaces to compete against Nvidia’s monopoly in standard cloud computing. I also expect more pop-up “nano-Datacenters” that provide compute to more local spaces.

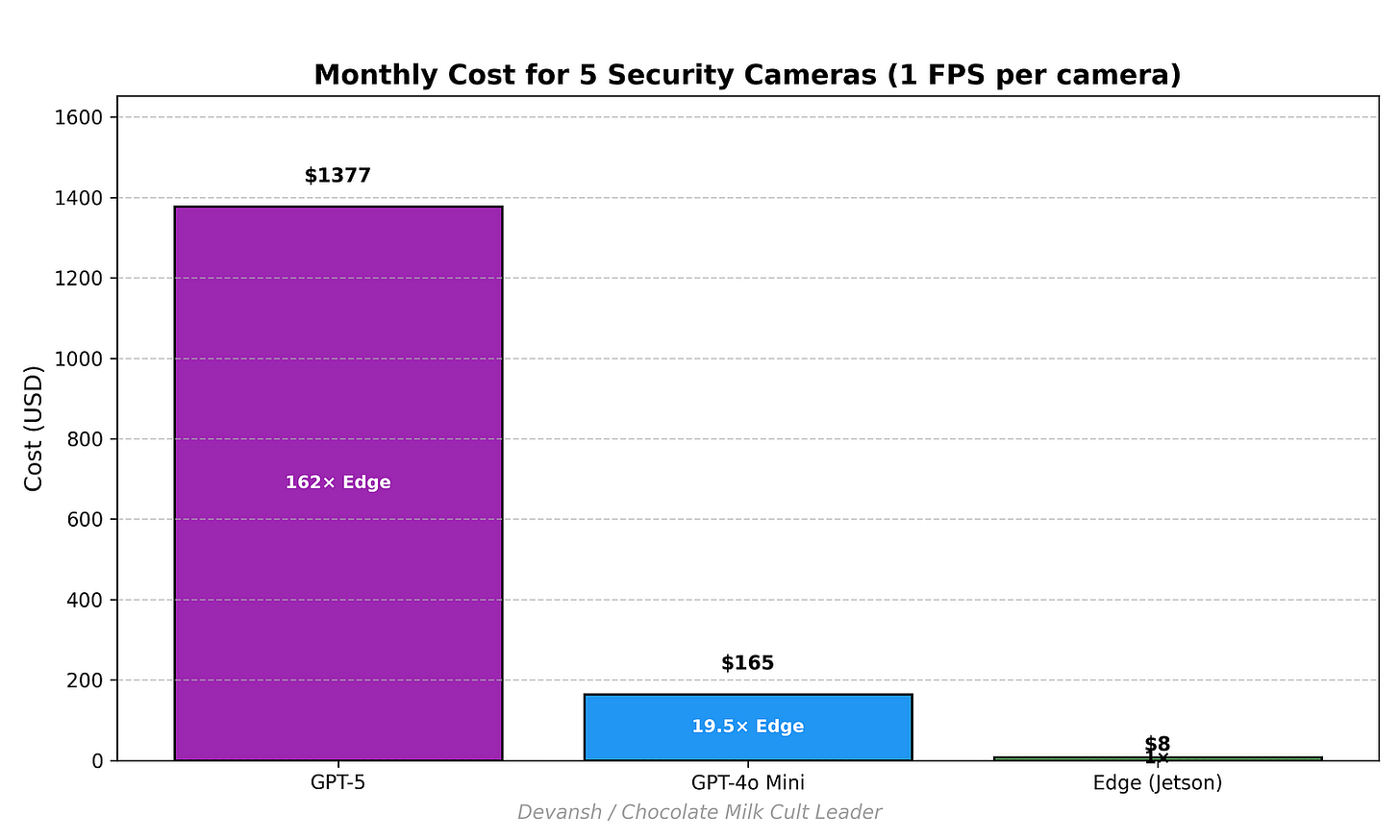

Let’s take the most boring, most common real-world case: a QSR with 5 security cameras pointing at fryers, cash registers, drive-through lanes, whatever. Nothing fancy. Just five cheap eyes staring into space.

Five cameras → 1 frame per second → 720 hours per month.

That’s 12.96 million images per month that need to be understood, labeled, or tracked. Now watch what happens when you run those frames through the cloud.

Let’s say we take two relevant multimodal models (I use GPT because that’s the most famous name):

Top-end model: GPT-5 Input token price: $1.25 per million tokens

High-ROI model: GPT-4o Mini. Input token price: $0.15 per million tokens

A typical image consumes ~85 tokens for vision processing. So:

GPT-5: ~0.0001063 dollars per image → $1,377/month → $16,524/year

GPT-4o Mini:~0.00001275 dollars per image → $165/month → $1,983/year

This is the cheap case. The expensive models explode into five- and six-figure annual bills. And remember, this is just raw inference — no retries, no burst load, no output tokens, no latency penalties.

Now onto the same math with edge.

NVIDIA’s Jetson Orin Nano Super developer kit costs $249.

Amortized over five years → $4.15/month.

Power consumption at 25W, NYC rates → roughly $4.32/month (I’m taking NYC to steelman with upper limits)

Total edge cost: ≈ $8.50/month

Run the ratio:

GPT-5 vs Edge: ~162× more expensive

GPT-4o Mini vs Edge: ~19.5× more expensive

Even the cheapest respectable cloud model is nearly 20× more expensive than edge. And the top-end model is two orders of magnitude worse.

Now in practice, you’ll have a higher setup cost with using an edge model. In fact, when discussing the real cost of open LLMs, we specifically stressed how expensive Open Source gets. However, this situation will be lowered due to a few reasons —

That article is about building from scratch; in this case, we will see product + setup services to a low-tech business.

A huge difficulty is in distributed inference at scale, which is not a factor for locally constrained edge systems (we aren’t trying to serve LLMs to every possible user across the internet, only to local workers).

Even assuming higher product + service costs, we can still reasonably expect a 10x cost differential.

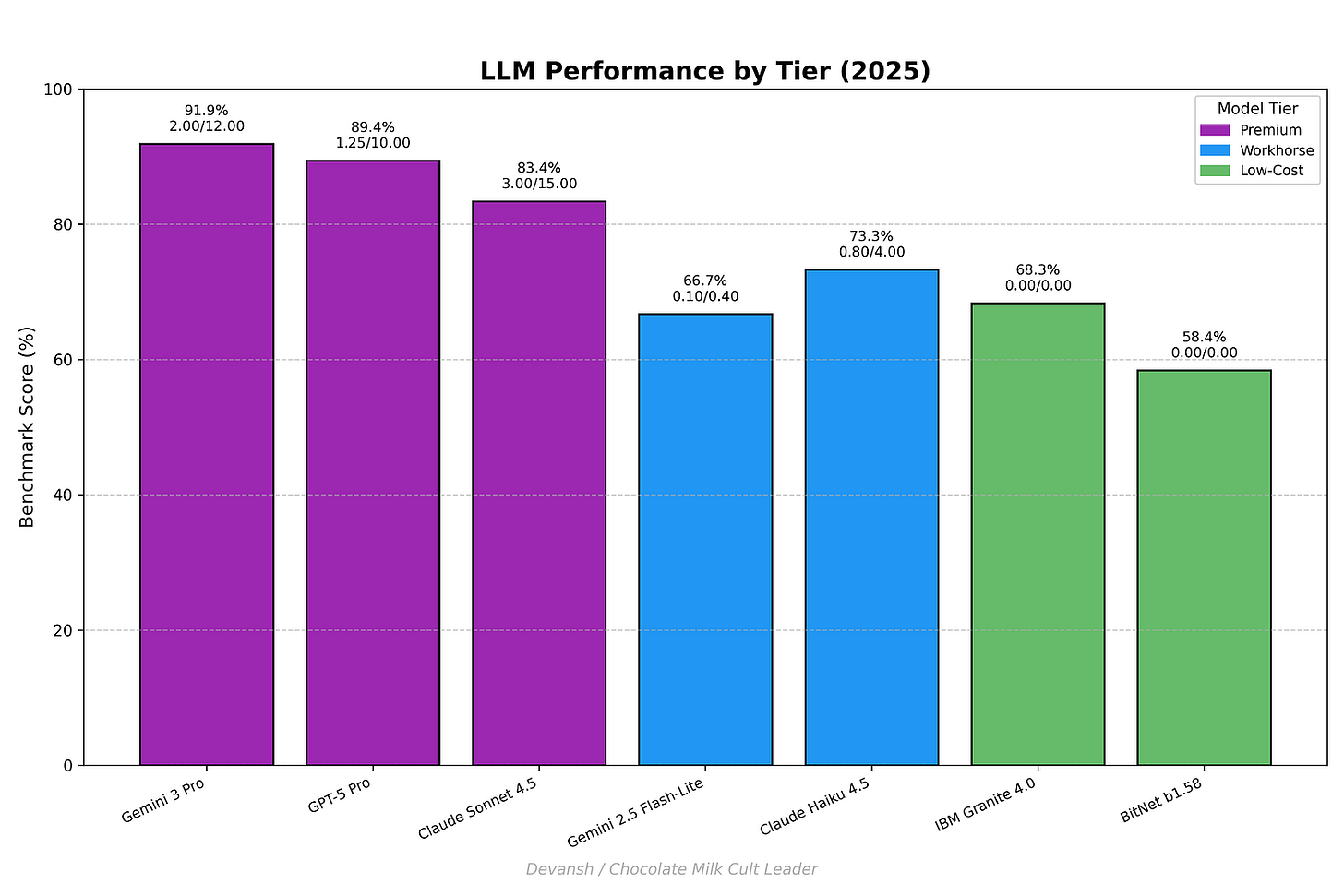

Additionally, for low-cost use cases that don’t have the offline requirement (can use Cloud APIs), we’re also seeing an amazing rise in low-latency, low cost intelligent workhorses like Gemini Flash Lite and Claude Haiku that offer speedy inference for much cheaper. This opens up a lot of flexibility for users.

4. Agentic Systems Can Learn From Human Corrections

This is the most underrated breakthrough.

Traditional software demanded:

requirements

process mapping

standardization

top-down logic

upfront design

Brownfield industries don’t have “processes” — they have habits. Workflows are often improvisational, idiosyncratic, and dependent on whoever’s on shift. Newer LLMs have much more flexibility here, being able to dynamically accommodate instructions, reconfigure workflows, and follow more complex sets of instructions.

This is the first time in history that software can keep pace with the real world instead of asking the real world to behave like software.

But to keep things fair, we must quantify the other side of this coin.

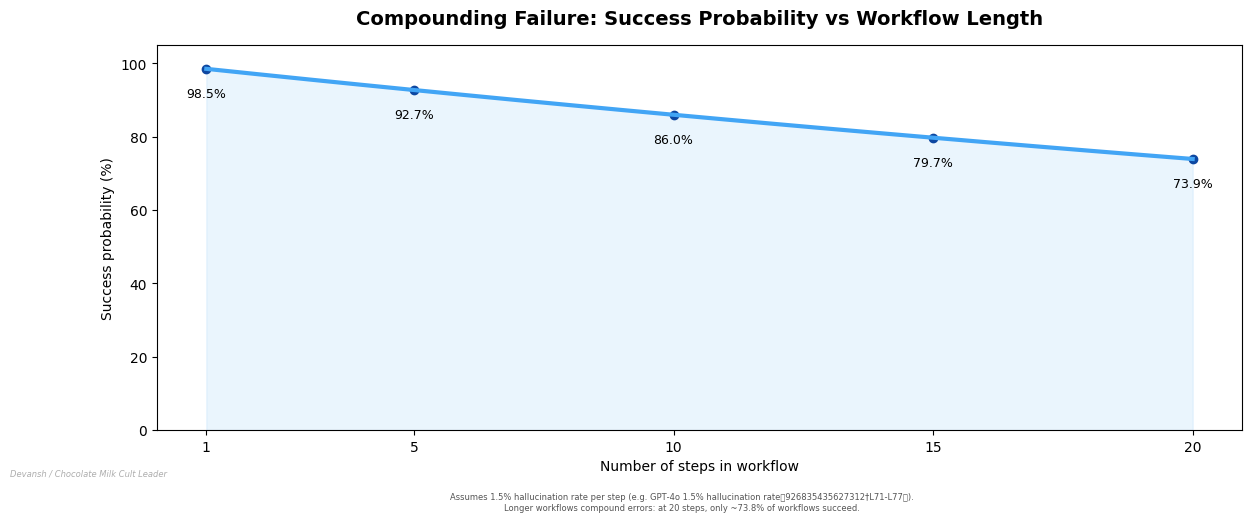

Say your model hallucinates ~1.5% of the time, even with grounding.

Sounds tiny — until you chain steps together:

8-step workflow (trucking dispatch): 11% failure rate

20-step workflow (warehouse ops): 26% failure rate

One in four workflows breaks.

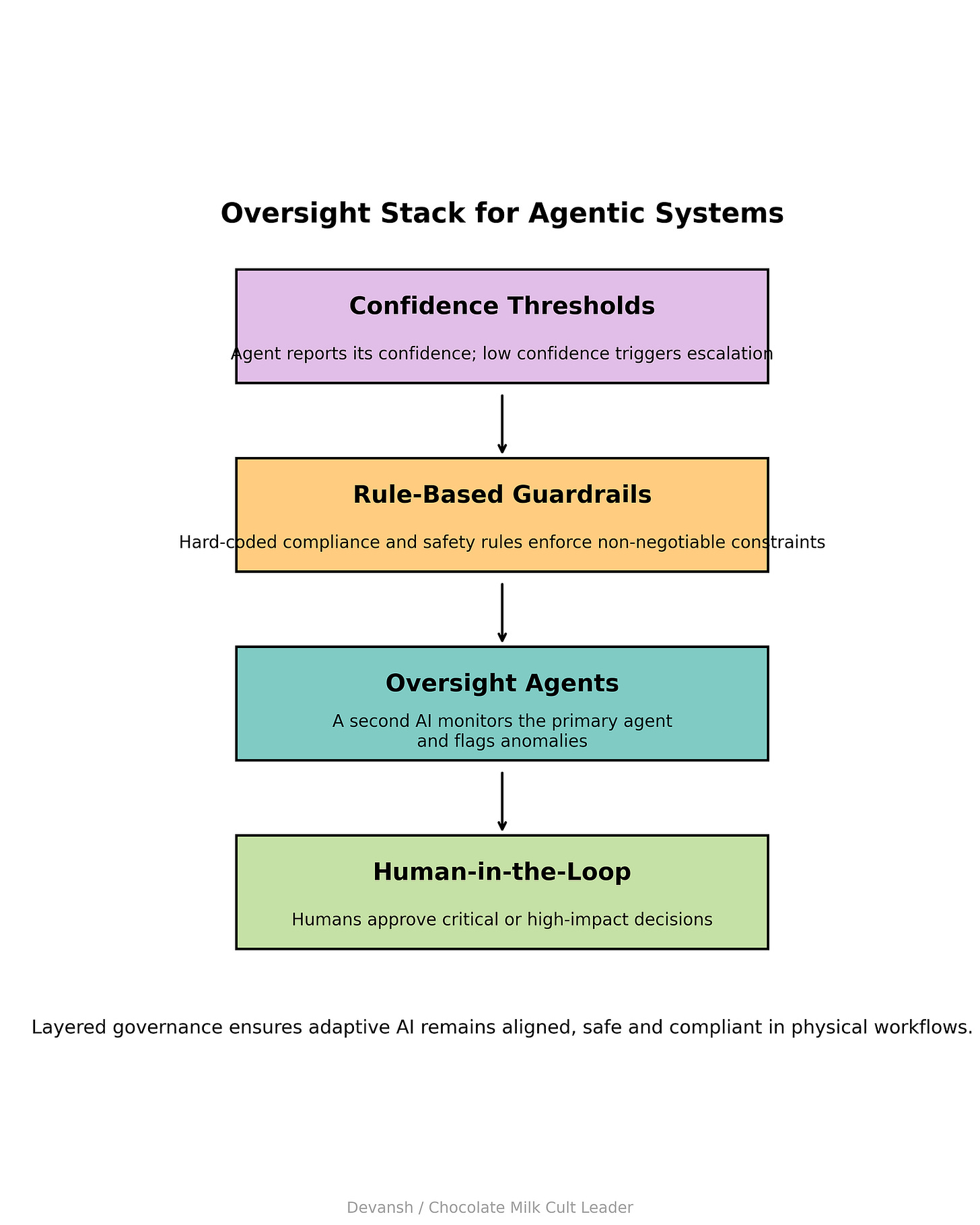

This is why agent stacks need:

fallback rules

human-in-loop gates

confidence thresholds

oversight agents

domain-specific constraints

We will quantify the full “AI Tax” in Part 4. For now, understand that adaptive agents are powerful and fragile — and both matter.

For now, since we have already understood why things have historically failed, and why things are different now, let’s see if this problem is worth solving.

PART 3: THE ECONOMIC WINDOW

So the tech works. The math works. The cockroaches can survive the nuclear winter of a Wi-Fi outage.

Now for the only question that actually matters to a capitalist: Is this problem (lucrative enough to be) worth solving?

For a decade, Venture Capital and other smart finance treated these industries like charity cases. “AgTech” and “Construction Tech” were places you parked money if you wanted to feel good about “real work” while your SaaS portfolio did the heavy lifting. But the cost collapse changes the calculus. When the entry ticket drops from $5M to $400k, the addressable market doesn’t just grow — it explodes.

Here is where the money is, who is going to take it, and why the “democratization” narrative is about to hit a brick wall.

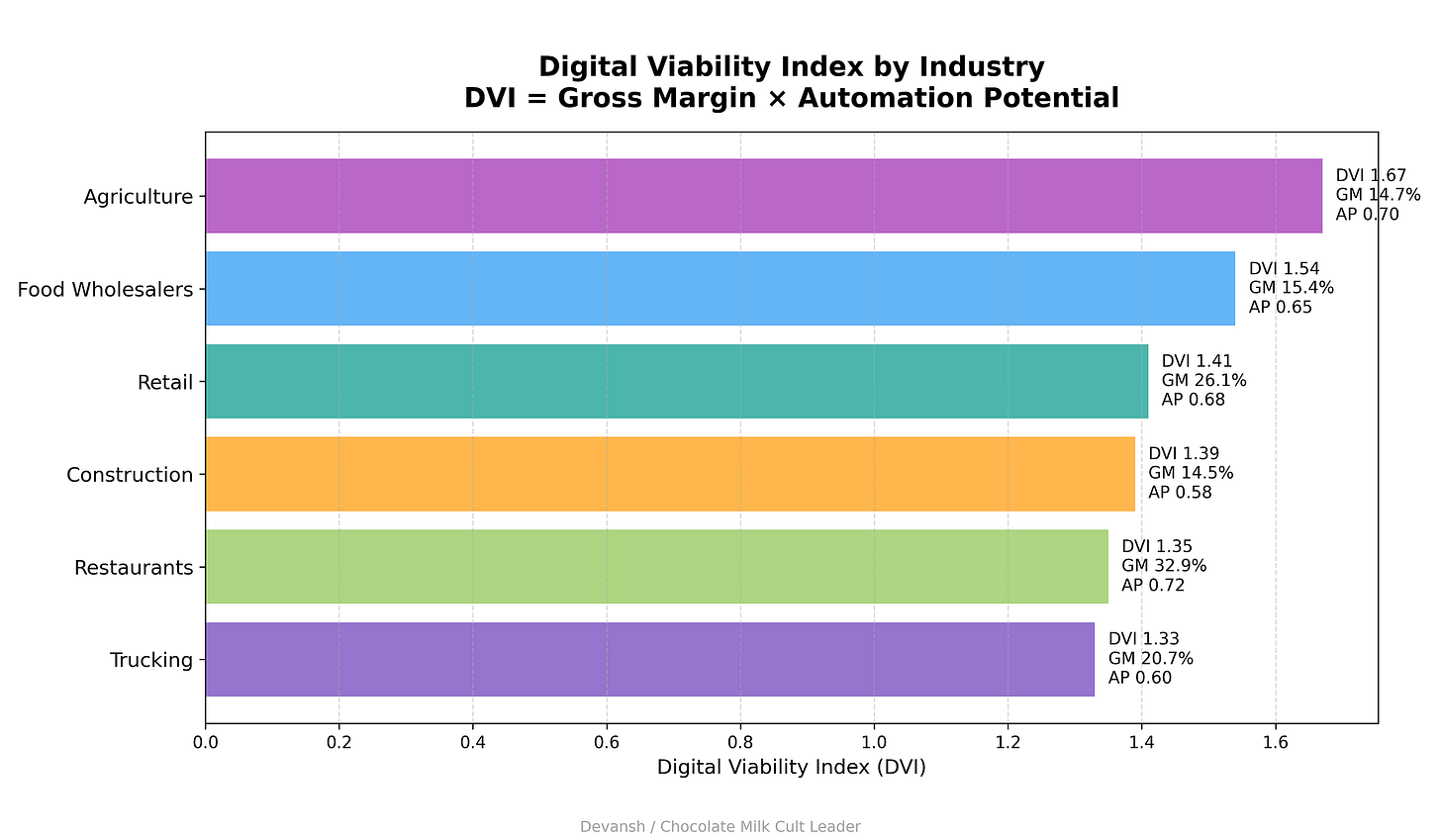

1. The Digital Viability Index (DVI)

We ran the numbers. We didn’t just look at “who needs tech.” We looked at who is starving. The idea: sectors with low margins but high automation potential are more viable than sectors with slightly better margins but workflows that can’t be standardized. And sectors with rock-bottom margins but massive operational chaos become the most viable — because they have no choice.

To get the actual numbers, we fused Damodaran’s 2025 gross-margin data with an automation readiness score. The result is the Digital Viability Index (DVI) — a map of where the pain is acute enough to force adoption.

The DVI Leaderboard:

Farming/Agriculture: 14.7% Gross Margin. High labor volatility. High input variance. (DVI Score: 1.67)

Food Wholesalers: 15.4% Gross Margin. Razor-thin inventory turns. (DVI Score: 1.54)

Retail (Grocery): 26.1% Gross Margin. 40,000 SKUs per store. (DVI Score: 1.41)

Construction: 14.5% Gross Margin. The highest “rework” cost in the economy. (DVI Score: 1.39)

Trucking: 20.7% Gross Margin. Negative operating margins in 2024. (DVI Score: 1.33)

Agriculture tops the list not because it’s the easiest (it’s not — it’s rural, fragmented, and technologically skeptical), but because it’s the most desperate. Farms operate on 14.7% gross margins, face severe labor shortages, and deal with climate volatility that makes manual planning untenable. The pain is so acute that even difficult technology deployments become rational.

Restaurants rank #5 despite having the highest gross margin (32.9%) because the automation potential is slightly lower — customer service, kitchen timing, and menu customization all require human judgment in ways that inventory management doesn’t. But 78% annual turnover and razor-thin net margins (3–5%) still make them a top-tier target.

Trucking sits at #6 despite being one of the most logistics-heavy industries because the workflows are already semi-digital (ELDs, fleet management software). The low-hanging fruit was picked in the 2010s. What’s left — autonomous routing, predictive maintenance, dynamic load matching — is harder to automate and requires edge inference at scale.

The sectors that didn’t make the list? High-margin services (consulting, law, finance) don’t need the cost collapse because they could already afford software. Ultra-fragmented sectors (home services, local trades) have too much heterogeneity and too little coordination for platform plays to work.

The six industries above represent the Goldilocks zone: desperate enough to adopt, structured enough to automate, and large enough to matter.

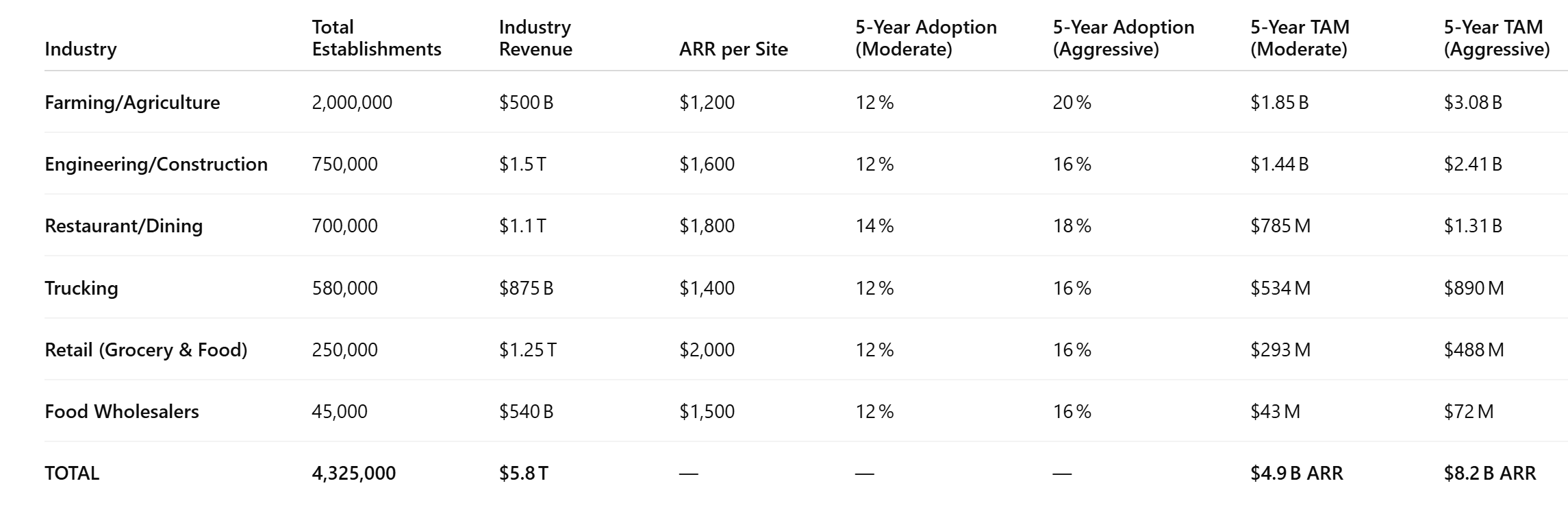

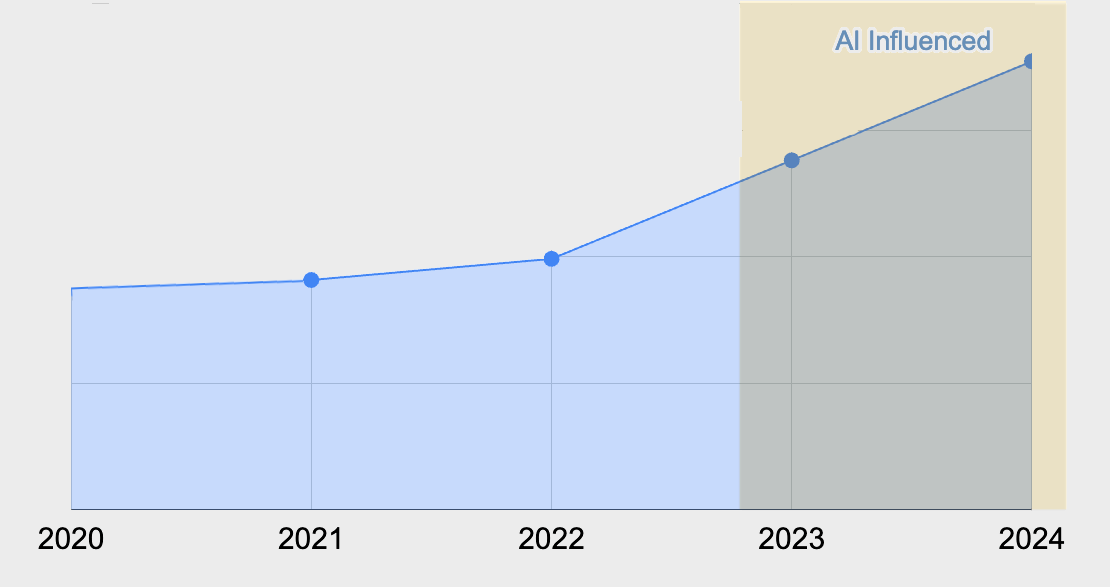

2. The Prize: $8.2 Billion ARR (And Why That’s Conservative)

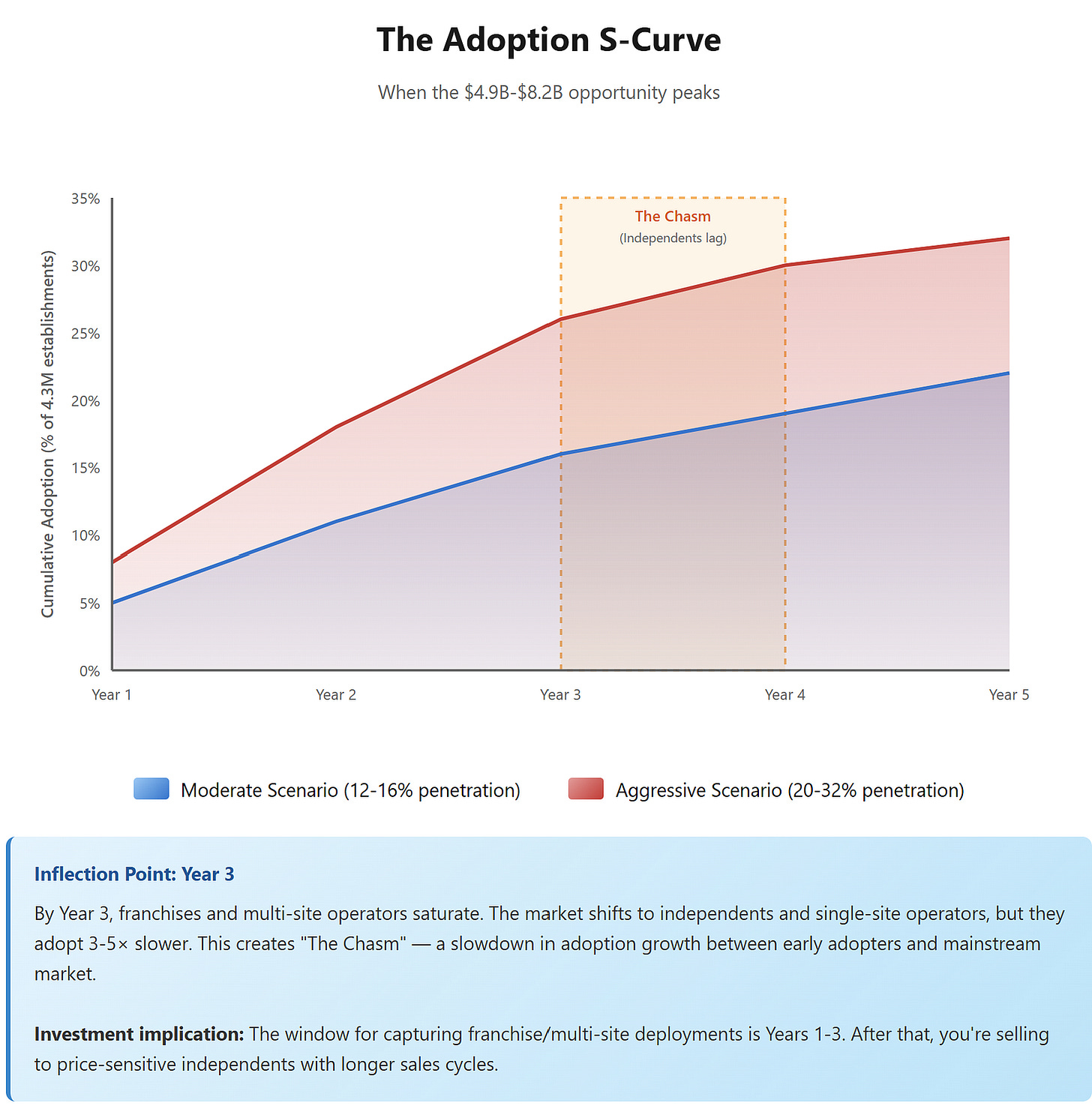

Let’s build how market size from first principles. Take the number of establishments, estimate realistic adoption rates based on historical S-curves (credit card terminals, mobile POS, cloud migration), and calculate annual recurring revenue per site.

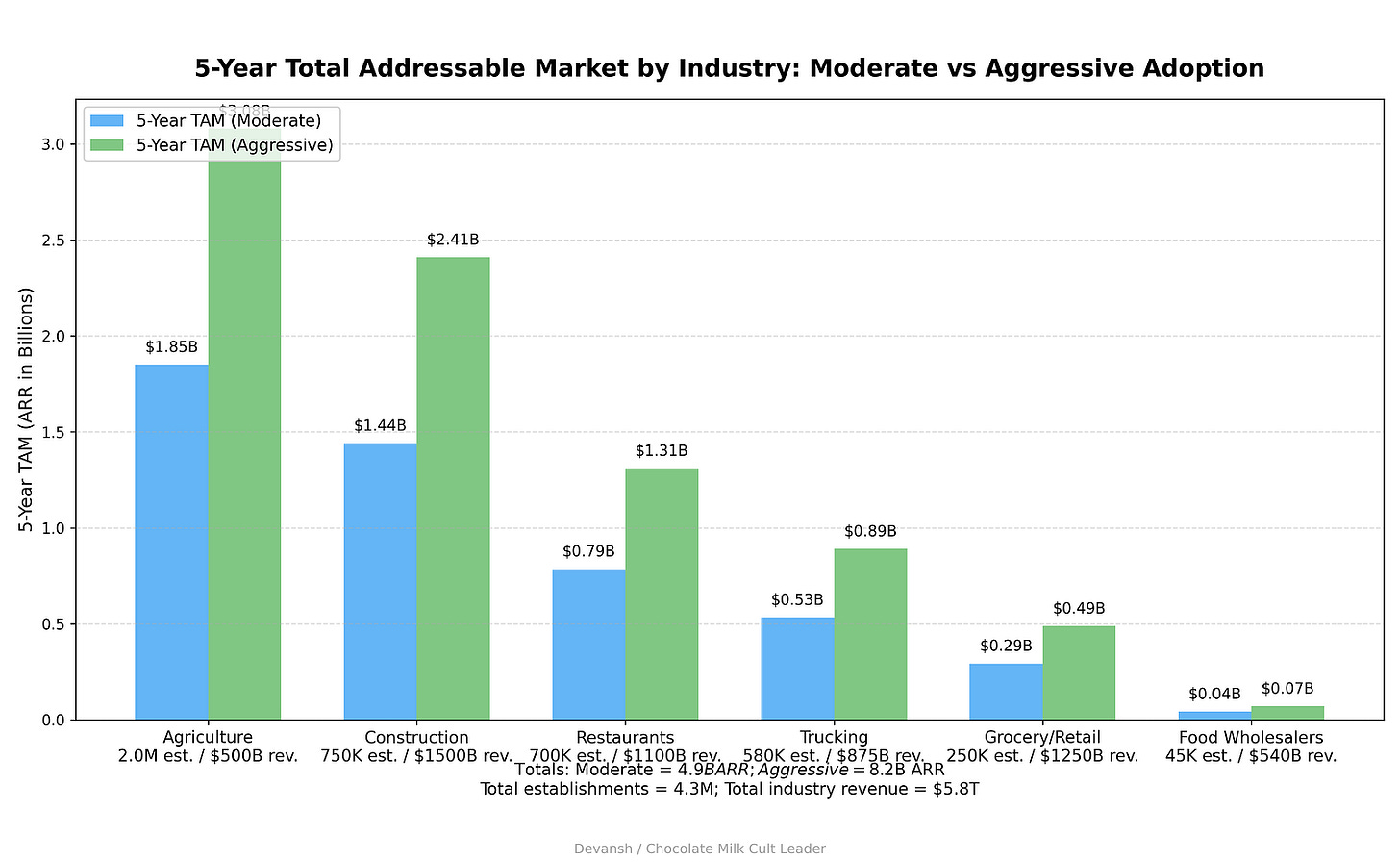

When you map these viable sectors against adoption curves, you get the Total Addressable Market (TAM).

Using a Bass diffusion model calibrated to historical tech adoption (like Square and AWS), we project a 5-year opportunity window.

The Conservative View: $4.9 Billion ARR.

The Aggressive View: $8.2 Billion ARR.

This spans 4.3 million establishments and nearly $6 trillion in economic activity.

The eagle-eyed amongst you will notice two different colors in that very pretty chart —

Moderate scenario: Assumes cloud-migration-pace adoption (12–16% penetration over 5 years). This is conservative — it matches how long it took SMBs to move from on-premise email servers to Google Workspace.

Aggressive scenario: Assumes Square-like adoption (20–32% penetration). This is optimistic but grounded — Square went from zero to 2 million merchants in under 10 years by targeting underserved SMBs with previously inaccessible payments infrastructure.

Also worth noting, the $4.9B–$8.2B range is the addressable annual recurring revenue at Year 5, not one-time project fees. This is subscription-model SaaS revenue, compounding year-over-year as more sites come online.

For context: Toast, the restaurant POS incumbent, did $4.2B in revenue in 2024 across ~120K restaurant locations. This TAM analysis suggests that the entire brownfield AI stack across six industries could generate $5–8B in ARR by Year 5 — roughly the size of one successful vertical SaaS incumbent.

This is not “AI will eat the world” scale. This is not a trillion-dollar opportunity. This is industrial infrastructure for a $5.8 trillion sector of the economy that has been operating on clipboards for forty years.

It’s big enough to matter. It’s not big enough to attract megacap VCs chasing 100x returns. That’s actually a feature, not a bug — it means the space stays under-hyped and over-deliverable. Fund true industry insiders with tech connections (if you’re an industry insider who needs technical support — devansh@svam.com. Let’s get very rich together).

3. The Great Bifurcation (Or: The Toast vs. Square Trap)

The market is going to split in two. It will follow the Toast vs. Square archetype.

The Fast Lane: Franchises, Chains, and Coordinated Networks

Who: Multi-site restaurant franchises (McDonald’s, Chick-fil-A), large construction firms with 100+ employees, agricultural cooperatives, regional trucking fleets with 50+ trucks.

Why they adopt first:

Capital: They can absorb the $200K-500K upfront cost for a 20-site rollout without risking bankruptcy.

IT capacity: They have a CTO or VP of Operations who can evaluate vendors, manage deployments, and handle edge hardware procurement.

Training infrastructure: They can mandate adoption across locations, run centralized training programs, and enforce standardization.

Compliance leverage: They already have SOC2 audits, HACCP certifications, and OSHA programs — adding AI oversight is an incremental cost, not a greenfield build.

Adoption timeline: Years 1–3. By Year 3, 25–35% of franchises and large operators will have deployed edge AI systems at scale.

Evidence: Restaurant franchise renewal rates are 90% vs 60% for independents. Franchises don’t just survive — they compound advantages through coordinated technology adoption. Toast’s customer base skews heavily toward multi-unit operators for exactly this reason.

The Slow Lane: Independents, Single-Site Operators, and Mom-and-Pops

Who: Independent restaurants, solo construction contractors, family farms under 500 acres, owner-operator truckers.

Why they lag:

Capital constraints: A $50K-100K upfront cost is a bet-the-business risk for a single-location operator running on 3–5% net margins.

No IT staff: The owner is also the GM, the accountant, and the janitor. They don’t have time to evaluate vendors or manage edge hardware.

Training burden: With 78% annual turnover, they’re in perpetual onboarding mode. Adding new software means re-training a revolving door of employees.

Risk aversion: They’ve seen technology projects fail before (the Oracle-MICROS migration, the “iPad POS” that never worked right). They’re skeptical by experience, not by ignorance.

Adoption timeline: Years 5–10+. By Year 5, maybe 5–10% of independents will have adopted. By Year 10, maybe 30–40%.

Evidence: Mobile POS adoption shows this exact pattern. Square went after independents with dead-simple, low-cost card readers. Toast went after multi-unit restaurants with integrated, high-touch systems. Both succeeded — but at very different price points and adoption speeds.

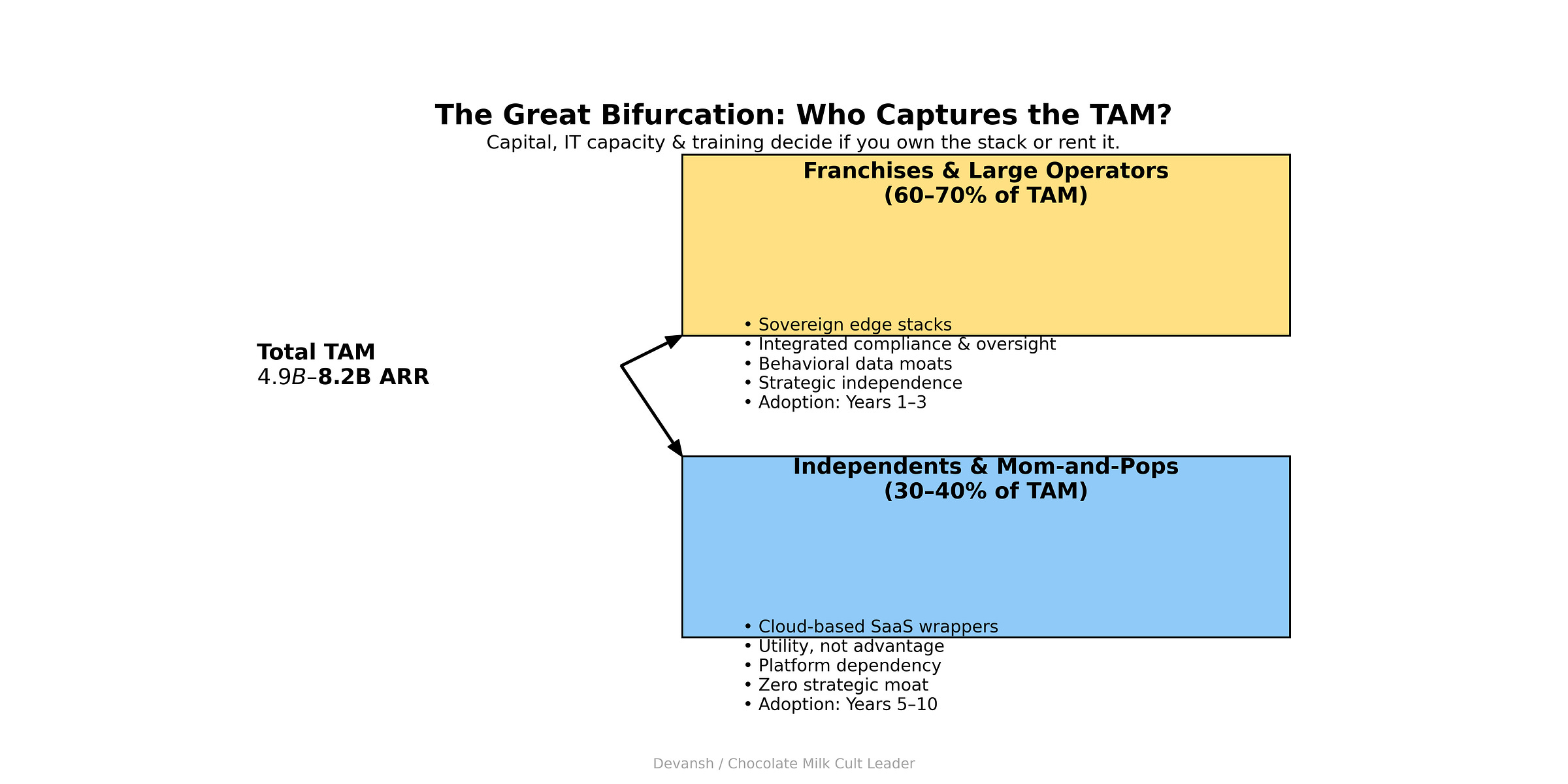

What This Means for the TAM

The $4.9B-$8.2B TAM breaks down roughly as:

60–70% captured by franchises and large operators (they adopt faster and spend more per site)

30–40% captured by independents (they adopt slower and buy cheaper, lighter-weight tools)

But here’s the darker implication: the value doesn’t just flow to different operators at different speeds. It flows to different product tiers that create permanent stratification.

Franchises get:

Sovereign edge stacks (Jetson Orin, proprietary models, full control)

Integrated compliance and oversight layers

Behavioral data moats (they own the correction exhaust)

Strategic independence (no platform lock-in)

Independents get:

Cloud-based SaaS wrappers (ChatGPT Plus, Gemini, lightweight APIs)

Utility, not advantage

Platform dependency (they rent cognition, they don’t own it)

Zero strategic moat (their data flows upstream to the vendor)

This is the Square vs Toast bifurcation playing out again.

Square gave independents access to card processing — but Square kept the transaction data, the customer relationships, and the pricing power. The merchant got utility; Square got the business.

Toast gave multi-unit operators a full-stack restaurant OS — and charged $165/month per location for it. But the operator controlled the data, integrated deeply with their operations, and built lock-in through workflow dependency.

AI adoption will follow this exact pattern. The tools that independents can afford will be the ones that extract value upstream. The tools that franchises deploy will be the ones that compound advantages locally.

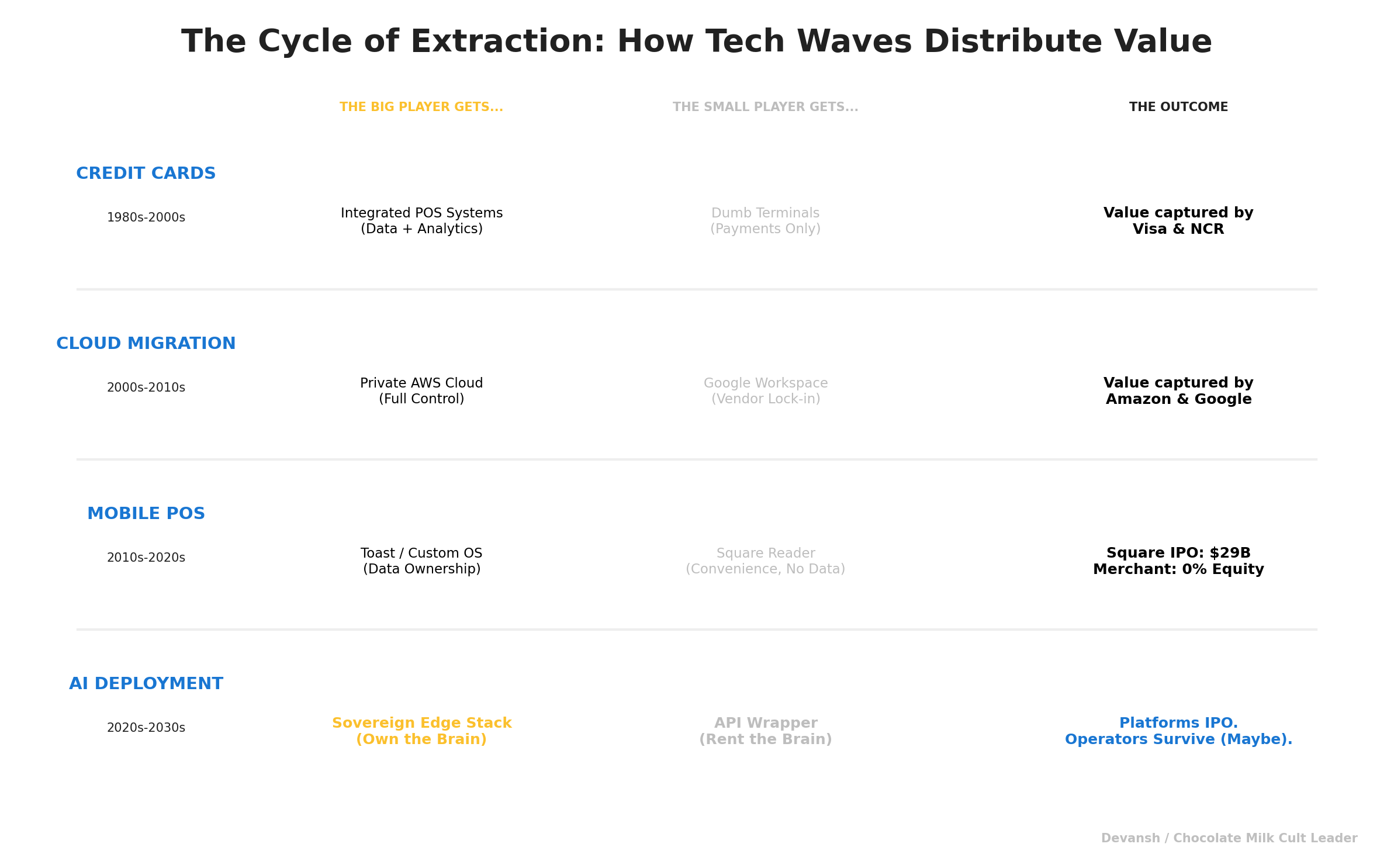

Historical Analogues: This Has Happened Before

This isn’t speculation. We’ve seen this movie three times already.

The Setup

So we have a $55/month tech stack that works (Part II).

We have an $8.2 billion market desperate to buy it (Part III).

It sounds like the perfect trade.

But there is a reason we called this the “Suicide Pact” in Part I. The old pact was financial. The new pact is operational. Because when you deploy probabilistic, hallucinating agents into physical environments, you aren’t just deploying software. You are deploying risk.

We have quantified the financial upside. Now we need to audit the AI Tax.

PART 4 — THE AI TAX: THE BILL COMES DUE

Congratulations. You used the “New Stack” from Part II. You built a $5 million logistics platform for $400,000 using a squad of agents and a junior developer named Tim. You deployed it to the edge. The cost collapse worked.

Now, meet the Repo Man.

The “Cost Collapse” isn’t a gift; it’s a displacement. You didn’t delete the cost of engineering; you moved it from Capex (upfront build) to Opex (future disaster cleanup). When you replace deterministic code with probabilistic models, you sign a new kind of contract. You trade certainty for velocity.

In a chat app, that’s fine. In a supply chain, it’s a liability.

Here is the bill they don’t show you in the demo.

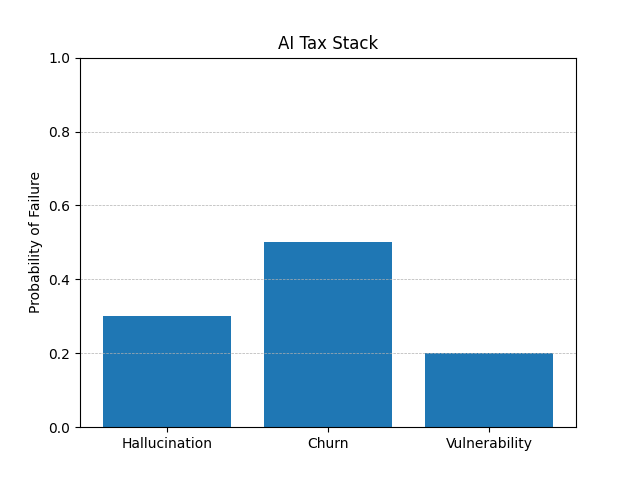

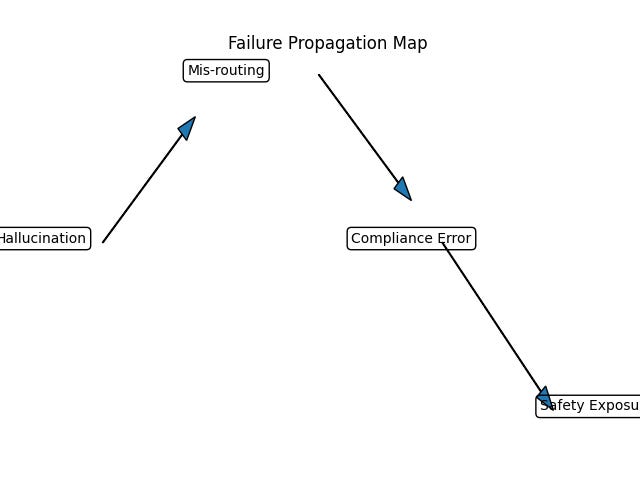

1. The Hallucination Tax (Or: The 26% Failure Rate)

If ChatGPT lies to you about a recipe, you make a bad dinner.

If an agent lies to a warehouse management system about inventory levels, you miss a shipment, lose a client, and get sued.

The industry loves to talk about “98.5% accuracy.” That sounds great. It’s an A+.

But brownfield workflows aren’t single questions. They are chains ( Receive Order → Check Stock → Verify Credit →Schedule Truck → Print Label).

The Math: A 5-step chain with 98.5% accuracy per step has a 92% success rate.

The Reality: A complex 20-step workflow (common in logistics) has a 26% failure rate.

We saw this in Mata v. Avianca, where a lawyer used ChatGPT to write a brief, and it invented fake court cases (clearly unaware of Iqidis and its amazing Citation Checking Feature, making it a godsend for lawyers). Everyone laughed at the lawyer.

But imagine that agent isn’t writing a brief. Imagine it’s checking OSHA compliance for a construction site. It “hallucinates” a safety regulation that doesn’t exist, or worse, ignores one that does. The “Hallucination Tax” is the cost of building the Oversight Stack — the layers of human-in-the-loop verification required to catch that 26% failure rate before it hits the physical world.

2. The Bloat Tax (The Archaeology Problem)

AI writes code fast. But it writes bad code fast.

It repeats itself. It ignores “Don’t Repeat Yourself” (DRY) principles. It imports libraries you don’t need. It creates “Spaghetti Code” at the speed of light.

The data is damning:

Code Churn is Doubling: GitClear analyzed 153 million lines of code. Since Copilot took over, “code churn” (lines written and then deleted within 2 weeks) has doubled. This is the fingerprint of “Vibe Coding” — generating trash, realizing it’s trash, and deleting it.

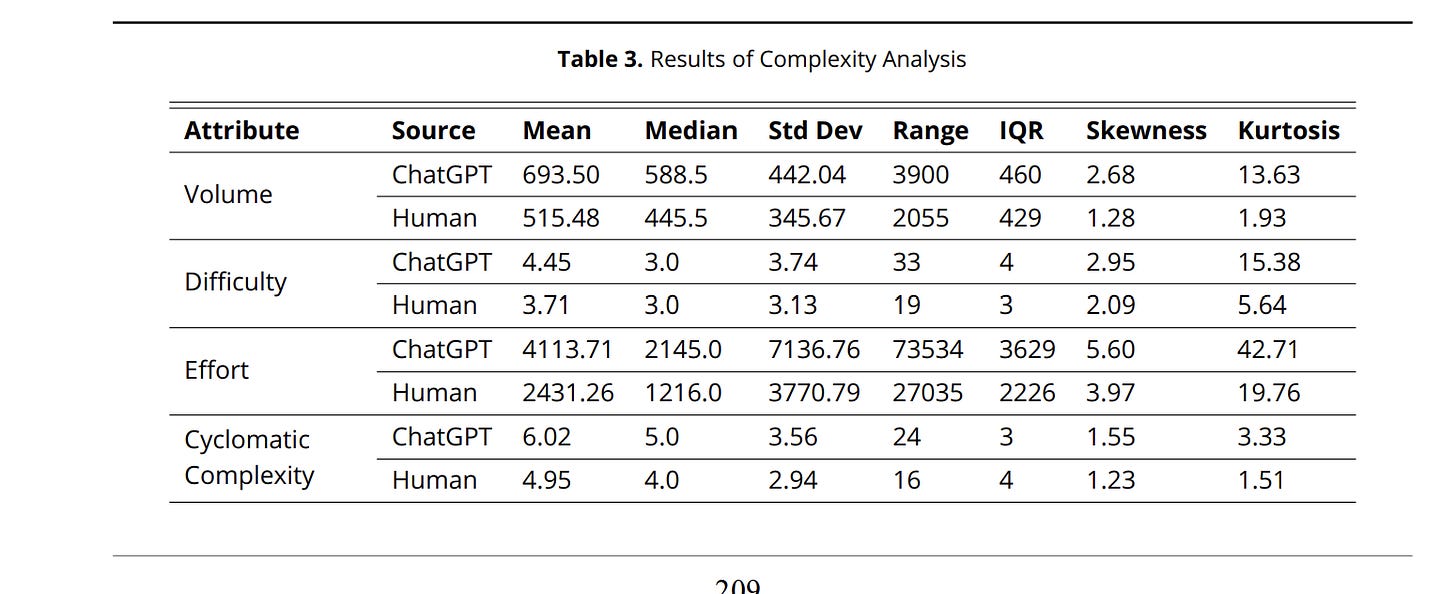

Complexity Explosion: A 2025 VFAST study found that AI-generated Python code had 22% higher cyclomatic complexity than human code.

For a brownfield operator with a small IT team, this is fatal. You saved money on the build, but now you need a team of seniors just to untangle the mess when it breaks six months later. This is Knowledge Debt, and it compounds daily.

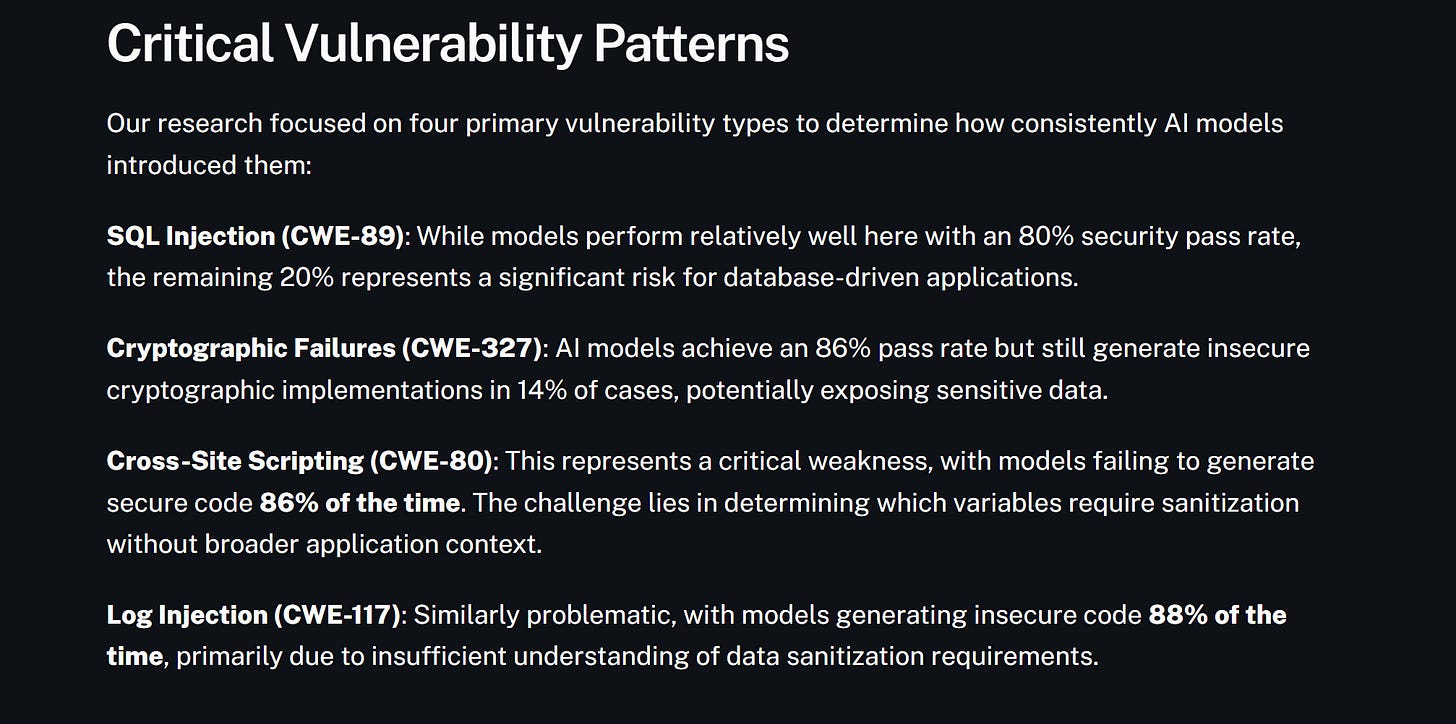

3. The Vulnerability Tax (Security as an Afterthought)

“However, our comprehensive analysis of over 100 large language models reveals a troubling reality. Across 80 coding tasks spanning four programming languages and four critical vulnerability types, only 55% of AI-generated code was secure. This means nearly half of all AI-generated code introduces known security flaws.

What’s more concerning: this security performance has remained largely unchanged over time, even as models have dramatically improved in generating syntactically correct code. Newer and larger models don’t generate significantly more secure code than their predecessors.” — Source.

Veracode tested huge swaths of AI-generated code. The results should terrify anyone putting this stuff into production:

45% of AI-generated code contained insecure vulnerabilities.

72% of Java code failed security checks.

We aren’t talking about obscure bugs. We are talking about SQL Injection and Hardcoded Credentials. The basics.

In a SaaS startup, a breach is a bad PR day.

In a brownfield industry — like a trucking fleet or a food processor — a breach means ransomware shutting down the factory floor.

The “Vulnerability Tax” is the cost of auditing every line of code the robot wrote. If you skip the audit to save money, you are essentially leaving the keys in the ignition of your entire company.

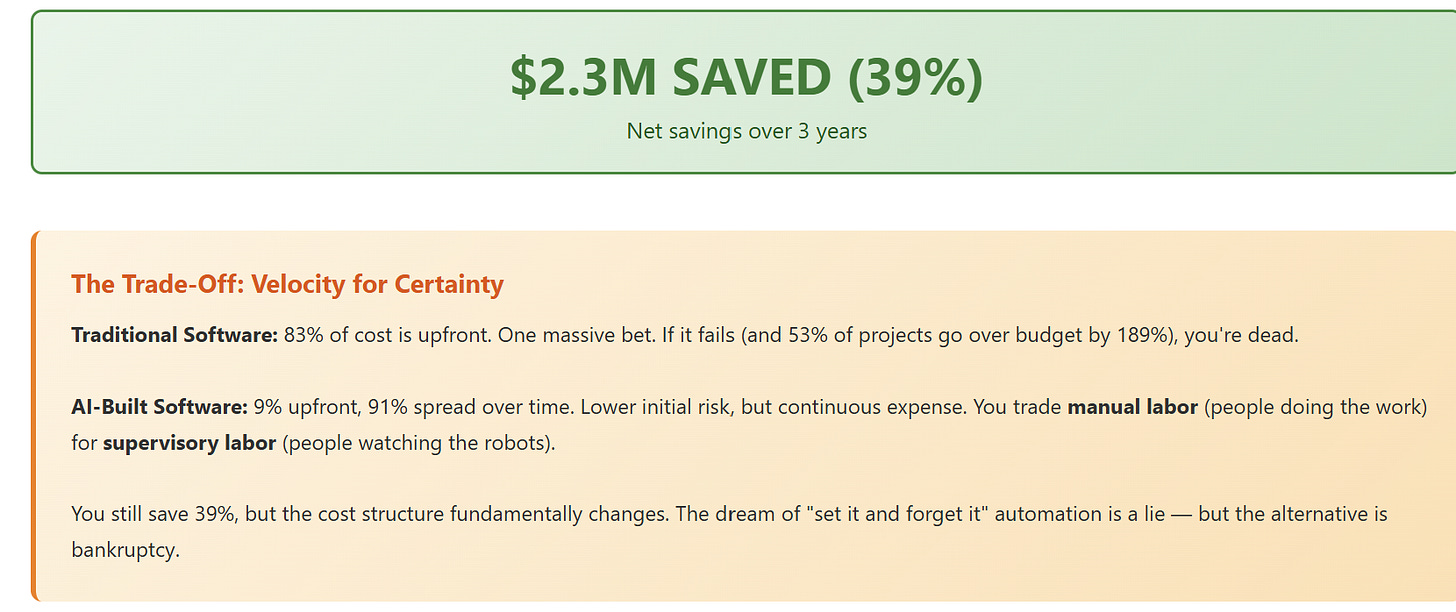

4. The Net Result: The Free Lunch is Over

The “Cost Collapse” thesis from Part II is true, but it comes with a caveat.

You can build cheap. But you cannot build lazy.

The Upside: You get into the game for $400k instead of $5M.

The Downside: You are now managing a system that is statistically likely to lie to you, structurally guaranteed to be bloated, and probabilistically insecure.

This doesn’t mean you shouldn’t do it. You have no choice.

But it means that the dream of “set it and forget it” automation is a lie. You are trading manual labor (people doing the work) for supervisory labor (people watching the robots).

And while you are busy watching the robots, the platform giants are busy figuring out how to turn you into a tenant.

PART 5: The Great Re-Centralization

The cost collapse in Part II should create a golden age of independent, sovereign operators. It should let a family farm outcompute a conglomerate.

But technology doesn’t exist in a vacuum. It exists in a market structure designed to extract rents. While you are busy deploying agents, 2 forces are conspiring to turn you from an owner into a sharecropper.

1. The Incumbent Pincer (Why You Can’t Undercut Toast)

The standard startup playbook is “enter at the low end with a cheaper product.”

In the AI era, that playbook is dead.

The Mechanic: You launch a specialized AI inventory agent for $50/month. Toast looks at your feature, builds a “good enough” version using their massive data advantage, and bundles it into their existing platform for free.

The Result: You can’t compete with free.

And you can’t exit, either. The IPO window is nailed shut. The only exit is M&A.

In 2024, there were 2,107 SaaS M&A deals. The vast majority were “tuck-ins” — incumbents buying threats for pennies (10M- 50M) to absorb their tech and kill their brand.

You aren’t building a competitor. You are building R&D for Oracle. At least on the bright side, if you don’t dilute like a retard and use AI for cheap building, you can walk with a good bit of gambling + cocaine money.

2. The Policy Rigging (The State-Sponsored Oligopoly)

Don’t expect the government to bust the trust. They are the ones signing the checks. Public policy is actively accelerating centralization through Procurement Bias.

The GSA Anointment: The U.S. General Services Administration (the world’s biggest buyer) didn’t open a marketplace. They effectively anointed a Holy Trinity: OpenAI, Google, and Anthropic. If you aren’t them, good luck selling AI to Uncle Sam.

The Subsidy Gap: Look at the EU’s “Digital Decade” subsidies. The data shows a massive Amplification Gap. Large enterprises are adopting AI at 4.2x the rate of SMEs using these funds. Why? Because grants require compliance teams, grant writers, and lawyers.

In a tale as old as time, public money flows to those who already have money. If you’re a business owner, it’s always worth remembering that no level of product or technical brilliance can ever outcompete a bribe.

This leaves the brownfield operator in a trap. You have to adopt the tech to survive, but the system is rigged to extract the value.

Navigating this is a very tricky question, and honestly, out of the scope of this article. We will cover it another time (reach out to me if you’re impatient).

For now, let’s bring this article to a close.

Conclusion

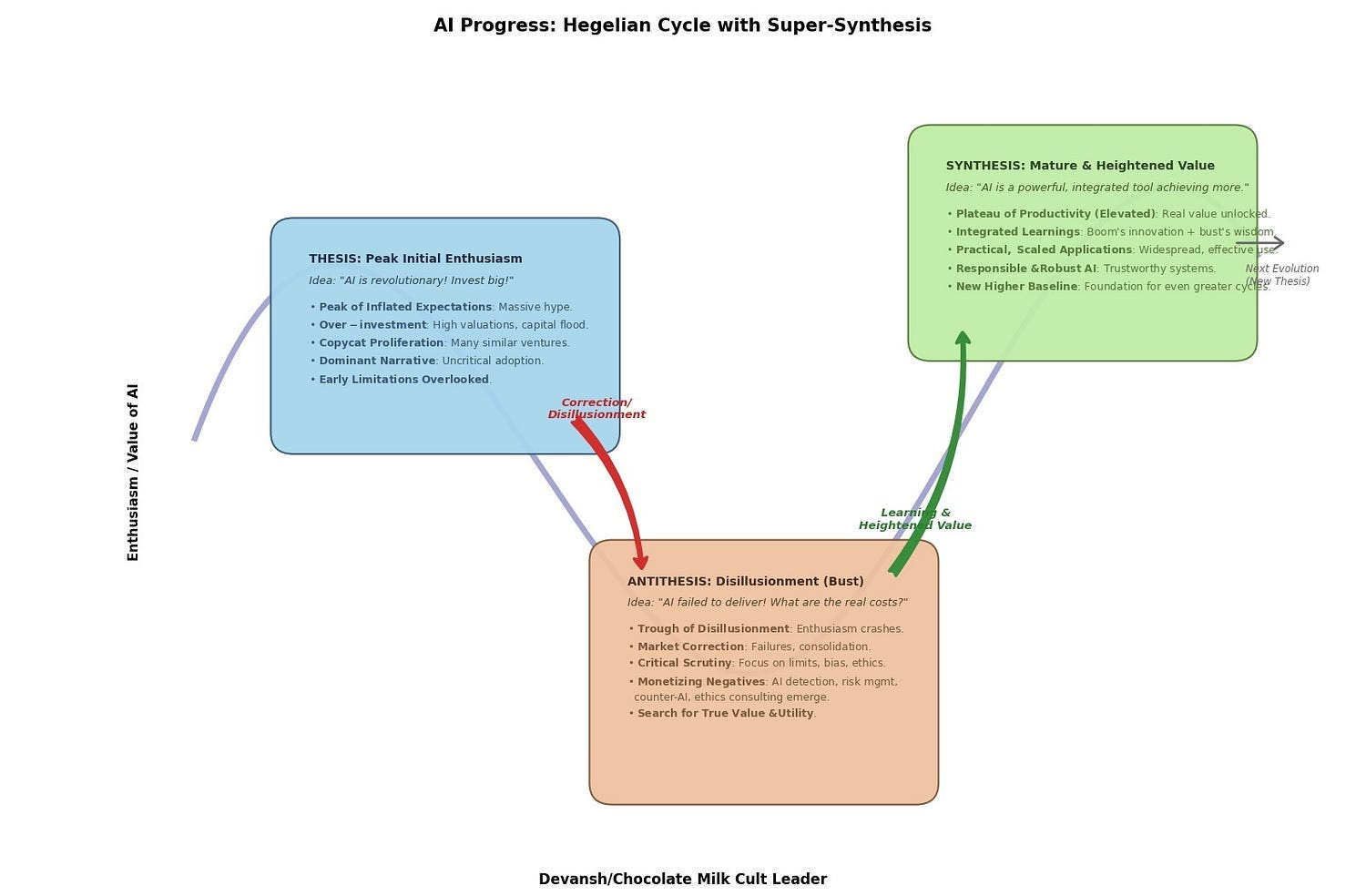

Let’s take a step back from AI and look back at the history of computing to see if we can pull any interesting insights from it.

Every major technological wave follows the same diffusion curve:

Built by experts

Used by generalists

Owned by everyone

Confused? Time for a History Lesson in Computing.

Stage 1: The Domain of Experts (1940s–1970s)

In the early decades, computers were:

Massive, fragile, and expensive.

Operated by physicists, engineers, and specialists.

Built in labs, military programs, or research centers.

They were inaccessible — not just technically, but culturally. You didn’t “use” computers. You built them, maintained them, debugged them.

The tools were raw. The workflows were alien. And almost no one knew how to make them useful to non-experts.

Stage 2: The Domain of Generalists (1980s–2000s)

This is where the inflection began.

PCs entered offices, schools, and homes.

Software abstracted away the need to “speak machine.”

The personal computer, productivity suite, and GUI stack turned experts into builders — and builders into product designers.

Still: to truly leverage the technology, you needed to be semi-technical. Early adopters. Developers. The “computer literate.”

It was usable — but not yet default.

Stage 3: The Domain of Everyone (2000s–Today)

Today, the average user:

Doesn’t care what a processor is.

Doesn’t know how file systems work.

Doesn’t read manuals.

But they can navigate phones, apps, cloud systems, and workflows with near-fluency — because the infrastructure shifted.

UX, defaults, guardrails, and operating models matured to support non-technical, skeptical, or distracted users at massive scale.

Now Map That to AI

The AI trajectory is running the same gauntlet, facing the same evolutionary pressures. And understanding where we are on this map is critical to seeing why now is the perfect time to bet on AI going where no tech has never gone before.

AI Stage 1: The Domain of Experts (The Alchemists — e.g., early pioneers of neural networks, symbolic AI, knowledge-based systems).

This was AI in its academic crucible. Models built from scratch, algorithms understood by a select few. The “users” were the creators. The output was often proof-of-concept, not product. The barriers were immense, the tools arcane.

AI Stage 2: The Domain of Generalists & Early Adopters (The Current Frontier — e.g., developers leveraging TensorFlow/PyTorch, prompt engineers using GPT-4 via APIs, businesses deploying off-the-shelf ML solutions).

This is where we largely stand today. Foundation models, APIs, and low-code platforms have dramatically expanded access. A new class of “AI generalists” can now build and deploy sophisticated applications without needing to architect a neural network from first principles. ChatGPT and its kin have even brought a semblance of AI interaction to a wider public.

AI Stage 3: The Domain of Everyone (The Unwritten Future — AI as truly ubiquitous, trusted, and seamlessly integrated utility).

It’s about making AI functional for the forgotten 90%. “AI for the rest of us”, to borrow from Steve Jobs (technically, I’m borrowing from someone who worked closely with Jobs on early Apple; this person attributes the rest of us framing to Jobs).

If we follow the current of history, then the spread of AI into the world is inevitable. It was never a matter of if, only when. And looking at the numbers, hardware, tooling, and foundational models (both intelligent and cheaper ones) are now at the point where they can make a dent in these workflows.

So are we getting into this, or do you hate money?

Thank you for being here, and I hope you have a wonderful day,

Dev <3

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow. The best way to share testimonials is to share articles and tag me in your post so I can see/share it.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

My (imaginary) sister’s favorite MLOps Podcast-

Check out my other articles on Medium. :

https://machine-learning-made-simple.medium.com/

My YouTube: https://www.youtube.com/@ChocolateMilkCultLeader/

Reach out to me on LinkedIn. Let’s connect: https://www.linkedin.com/in/devansh-devansh-516004168/

My Instagram: https://www.instagram.com/iseethings404/

My Twitter: https://twitter.com/Machine01776819

Hi Devansh, Great article. I had similar thought process. We are currently delving in the textile industries. They also work on thin margin and I believe lot of optimizations that can happen in that industry. Overall I believe it can be applied similarly in other manufacturing like Steel, Glass.

The edge computing argument here is really compelling, especially that cost breakdown showing cloud models running 20x more expensive for continuous inference. The evolutionary valley concept also helps explain why so many brownfield operators have resisted digitizaton even when the benefits seemed obvious. One thing I'm curious about is how the Hallucination Tax plays out in practice for QSRs and construction firms. That 26% failure rate on 20-step workflows sounds catastrophic, but maybe the human oversight layer catches most of it before any real damge? Either way, the DVI framework is a solid lens for evaluating which sectors actually need this badly enough to tolerate the AI tax.