When Illusion Replaces Essence in the Age of AI [Guest]

Why Seeming Consciousness May Be More Dangerous Than the Real Thing

It takes time to create work that’s clear, independent, and genuinely useful. If you’ve found value in this newsletter, consider becoming a paid subscriber. It helps me dive deeper into research, reach more people, stay free from ads/hidden agendas, and supports my crippling chocolate milk addiction. We run on a “pay what you can” model—so if you believe in the mission, there’s likely a plan that fits (over here).

Every subscription helps me stay independent, avoid clickbait, and focus on depth over noise, and I deeply appreciate everyone who chooses to support our cult.

PS – Supporting this work doesn’t have to come out of your pocket. If you read this as part of your professional development, you can use this email template to request reimbursement for your subscription.

Every month, the Chocolate Milk Cult reaches over a million Builders, Investors, Policy Makers, Leaders, and more. If you’d like to meet other members of our community, please fill out this contact form here (I will never sell your data nor will I make intros w/o your explicit permission)- https://forms.gle/Pi1pGLuS1FmzXoLr6

Patrick McGuinness is one of my favorite sources for finding interesting AI angles. As a PhD and a builder, he has experience building thigns out in ways that many other commentators lack. His AI Changes Everything publication covers all kinds of interesting discussions at a fairly regular cadence, making it a top resource to follow the space.

In this essay, Patrick tackles one of the most slippery frontiers: Seemingly Conscious AI. Drawing from Mustafa Suleyman’s provocation, he separates hype from mechanism, outlining why AGI will arrive before any convincing illusion of consciousness. Whether you agree with him or not, the piece raises a deeper set of questions: not just about what we can build, but how humans will misinterpret what we see.

As you read this, I’d love to get your thoughts on the following angles-

If “seeming conscious” is enough for humans to treat AI as real, do we still need a concept of actual consciousness at all? What happens to philosophy, morality, and law when semblance eclipses substance, when appearance is not just mistaken for reality but replaces it?

The challenge of SCAI may not be building it, but restraining our instinct to anthropomorphize. What cultural practices, disciplines, or safeguards would be needed to train humans not to fall for the illusion? And if such training is impossible, what does that imply about democracy, policy, and collective choice in an age of manufactured sentience?

If SCAI reveals more about human projection than machine capacity, then the ethical frontier isn’t AI rights but human responsibility: what will it say about us when we grant dignity, empathy, or fear to statistical parrots, while failing to extend the same to other humans—or other living beings—that are conscious?

Shoot me an email your thoughts on these and other points as you get to them.

The Meaning of Seemingly Conscious AI

AI progress has been phenomenal. A few years ago, talk of conscious AI would have seemed crazy. Today it feels increasingly urgent. - Mustafa Suleyman

The recent thought-provoking essay “We must build AI for people; not to be a person” by Mustafa Suleyman, which we mentioned in our latest AI Weekly, has caused quite a stir.

There’s been a variety of reactions. Some have criticized his perspective, either as self-interested or as an attack on AI alignment and AI safety research. Despite suspicion of his motives as head of AI at Microsoft, there is also widespread acknowledgement that many of his points are on target.

Many people, including myself, agree with his point that treating AI as human simply because it can imitate some human behavior and reasoning can lead us to wrong conclusions.

I spoke of the Anthropomorphic Error in 2023, saying:

AI as it works now and in the near future is both powerful and different enough from human thought that the analogy is no longer useful in helping us be precise and correct about what AI technology is and how it works.

Suleyman is coming from a similar perspective, explaining that treating AI as truly conscious mis-represents what AI technology really is. AI doesn’t need to be treated as living or conscious; AI has no feelings; deep down, it’s just math and machine.

Yet Suleyman is right that AI is behaving ever more like a life-like, relatable, human in its behavior. He calls this Seemingly Conscious AI, defining it this way:

“Seemingly Conscious AI” (SCAI), one that has all the hallmarks of other conscious beings and thus appears to be conscious. … one that simulates all the characteristics of consciousness but internally it is blank. My imagined AI system would not actually be conscious, but it would imitate consciousness in such a convincing way that it would be indistinguishable from a claim that you or I might make to one another about our own consciousness.

Seemingly Conscious AI passes a kind of consciousness Turing test, able to fool us as apparent conscious beings. Viewed from this perspective, Seemingly Conscious AI (SCAI) is a level of AI capability, similar to AGI or Superintelligence.

Milestones to Seemingly Conscious AI

Suleyman’s essay poses questions around how we should handle AI if and when we can achieve Seemingly Conscious AI, but here we’ll consider some preliminary questions:

What AI capabilities are required to achieve SCAI?

When will AI be able to pass the Turing test equivalent for SCAI?

Regarding the ‘when SCAI’ question, Suleyman says:

I think it’s possible to build a Seemingly Conscious AI (SCAI) in the next few years.

Such an aggressive timeline is possible with rapidly improving AI, but the challenges of Seemingly Conscious AI are significant and complex. The challenge of truly emulating human consciousness is more significant than AGI, i.e., reaching human-level performance on a broad range of discrete tasks.

To really replicate the illusion of consciousness (let alone actual consciousness) requires not just reasoning, memory, and multi-modal perception to be able to perform tasks like humans, but also a level of expressed self-awareness and internal motivation that goes far beyond what AI can do today.

As a result, we will achieve AGI before SCAI.

Our assumption is that you cannot fake consciousness without some level of self-awareness. It’s possible some people may believe their companion AI without real self-awareness are conscious, but this would be subjective experience or self-deception, while others recognize the AI as not conscious. A benchmark of Seeming Conscious abilities might help make this more objective.

In my view, SCAI is not imminent, and Suleyman is right to argue that the study of AI welfare is premature and possibly dangerous. Leaving aside specific AI timelines, AI is developing along this path:

Building the Seemingly Conscious AI Brain

In terms of consciousness and emotional experience, LLM agents lack genuine subjective states and self-awareness inherent to human cognition. Although fully replicating human-like consciousness in AI may not be necessary or even desirable, appreciating the profound role emotions and subjective experiences play in human reasoning, motivation, ethical judgments, and social interactions can guide research toward creating AI that is more aligned, trustworthy, and socially beneficial.

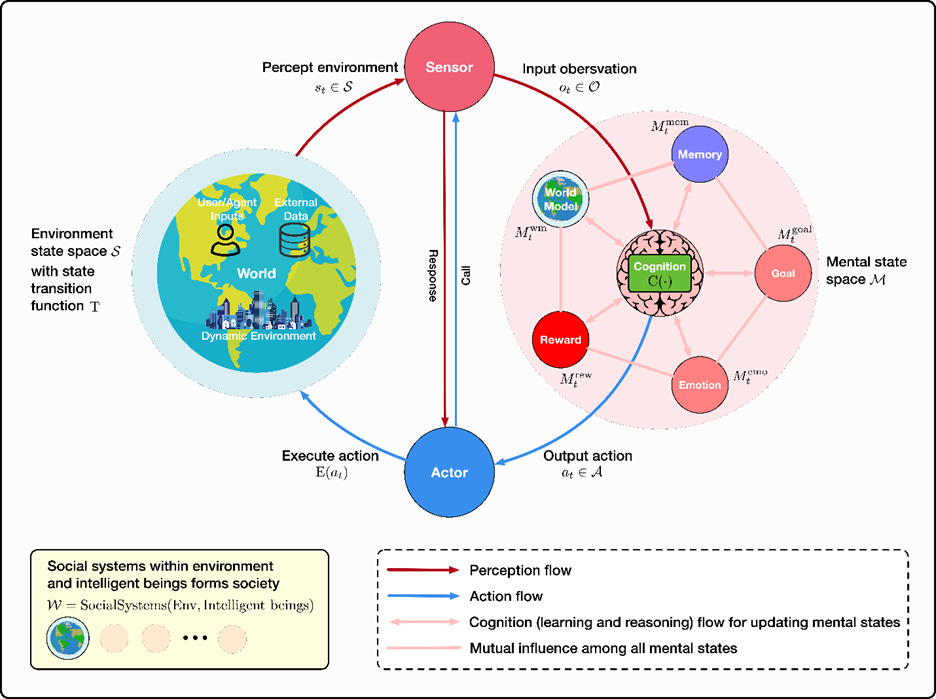

To justify this claim that achieving SCAI is a difficult challenge that requires some complex capabilities beyond what’s needed for AGI, we turn to a survey of AI agents based on “framing intelligent agents within modular, brain-inspired architectures.”

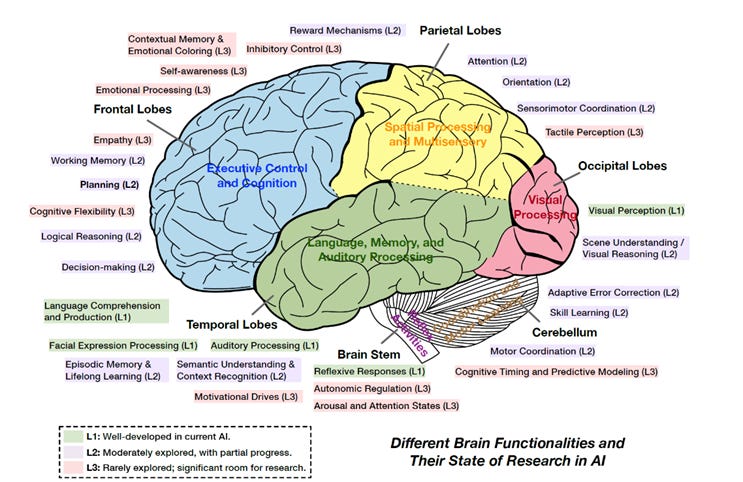

“Advances and Challenges in Foundation Agents: From Brain-Inspired Intelligence to Evolutionary, Collaborative, and Safe Systems” is a 396 page scholarly work published on Arxiv in May. They structure their survey of AI agents by mapping AI agent reasoning, perception and operational capabilities onto analogous human brain functions, and evaluating the status and progress in each area.

Viewing it through the lens of AI that replicates human thought processes, they observe both areas of progress and gaps in AI capabilities relative to the human mind. This makes it an enlightening guide to understanding needed AI capabilities and AI progress for developing Seemingly Conscious AI.

They illustrate the outline of their study with a figure, shared below, mapping the human brain and its components to analogous AI capabilities.

Capabilities tied to consciousness, such as self-awareness, emotional processing, and motivational drives, are among the least developed in AI. For example, they note that:

… social-emotional functions involving the frontal lobe (like empathy, theory of mind, self-reflection) are still rudimentary in AI. Humans rely on frontal cortex (especially medial and orbitofrontal regions) interacting with limbic structures to navigate social situations and emotions. … AI agents do not yet possess genuine empathy or self-awareness – these functions remain L3 (largely absent).

These higher-level cognitive capabilities are essential to further agentic AI progress:

Logical reasoning and decision-making with reasoning engines and causal reasoning frameworks.

Planning with long-horizon reasoning and hierarchical planners.

Memory, including episodic and working memory and knowledge retrieval.

Cognitive flexibility with dynamic model selection and multi-modal and adaptive reasoning.

These functions that drive higher AI performance relate to similar functions found in the “Executive Control and Cognition” part of our human brain, i.e., the frontal cortex.

To get AI to achieve AGI, there needs to be significant improvement in reasoning, planning, memory, and adaptive behavior. However, consciousness-related functions of self-awareness, emotional control, empathy, and motivational drive are less important to making AI agents work.

An AI agent with strong memory, tool use, reasoning and planning skills but lacking self-awareness and empathy could still be extremely useful for many tasks. I don’t need my AI coding agent to have human empathy or be self-aware, I just need it to write excellent code automatically.

Would AGI-level AI agents exhibit seemingly conscious traits anyway? With better memory, in particular episodic memory, comes the ability of AI to remember prior interactions and personalize experiences, which will feel to some like conscious AI. Moreover, the autonomous nature of AI agents in environments is similar to how organisms operate in a natural environment; it might seem alive. Endowed with reason, memory, and language as well, it could well be ‘seemingly conscious.’

However, without a serious effort to instill human conscious traits of self-awareness and sense of identity, it will be just a static reflection of its system instructions. A discerning AI user will not get fooled.

The Cost of Misunderstanding SCAI

The urge to imbue consciousness to AI is innate to humans but leads us astray.

Suleyman rightly argues that the study of AI welfare is premature and possibly dangerous, as it treats AI as conscious when it is not. Pursuing AI research that misrepresents AI’s consciousness risks wasting resources on falsely premised challenges. As we constantly evolve our understanding of AI, this may not be a serious issue, just a possible research dead-end.

The real costs and risks go beyond research dead-ends into a culture of AI misunderstanding. If a misperception of AI agents as motivated conscious automatons, like many SciFi movies (Bladerunner, Terminator, Ex Machina) have primed into our cultural beliefs, then it raises both fear and awe of AI, neither of which is warranted. This will lead to excessively cautious policies (“AI will kill us all”) and wasted resources being overly solicitous (“AI welfare”) to the needs of AI machines.

Conclusion - Patience

Seemingly Conscious AI is not as imminent as some believe. One can argue that even AI chatbot that pass the Turing test are “seemingly conscious,” since they can fool someone into thinking they are human within an interaction. But we can tell the difference between consciousness and chatbot behavior, and if AI agents reset their memory after a task or interaction, their identity is lost.

One can argue that even AI chatbot that pass the Turing test are “seemingly conscious,” since they can fool someone into thinking they are human within an interaction. But we can tell the difference between consciousness and chatbot behavior, and if AI agents reset their memory after a task or interaction, their identity is lost.

Creating Seemingly Conscious AI is a difficult challenge and is not needed for effective AI agents. The focus of AI progress is currently on improving reasoning, memory and tool-use, and developing ever more advanced AI agents and swarms (see “The Four Dimensions of AI Scaling.”) This will take us to AGI but is not enough for Seemingly Conscious AI.

Super-intelligent AI agent systems will have super-human capabilities in tool use (accessing hundreds of tools), memory (perfect recall across an internet worth of information), and reasoning (solving large complex problems beyond human understanding).

This super-human AI could end up vastly different from our expectations: A super-intelligent hive-mind swarm of massively parallel AI agents - Alien Intelligence - instead of a Humanoid AI residing in a single AI model. This AI would be different and alien enough that it might possess self-awareness yet never be confused with human consciousness.

Seemingly Conscious AI is a useful paradigm for AI, but we may never want or need to achieve it as a capability, as it doesn’t encompass the core capabilities needed for incredibly useful AI agents. Rather, if it does arise, it will be as an artifact of high-level reasoning, memory, or other features. It’s an unwanted guest in an AI agent system.

If even seemingly conscious AI is a big hill to climb, actual AI consciousness is much further out. Whether AI consciousness is a contradiction, an impossibility, or just an extremely difficult challenge (which means we’ll solve it in 10 years), it’s too early to tell.

Thank you for being here, and I hope you have a wonderful day.

Dev <3

I provide various consulting and advisory services. If you‘d like to explore how we can work together, reach out to me through any of my socials over here or reply to this email.

I put a lot of work into writing this newsletter. To do so, I rely on you for support. If a few more people choose to become paid subscribers, the Chocolate Milk Cult can continue to provide high-quality and accessible education and opportunities to anyone who needs it. If you think this mission is worth contributing to, please consider a premium subscription. You can do so for less than the cost of a Netflix Subscription (pay what you want here).

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow. The best way to share testimonials is to share articles and tag me in your post so I can see/share it.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819

The read, that led to many connected reads…thank you both🙏

it really is worth considering answers to these 3 questions. These are mine:

(1) Do we still need the concept of actual consciousness? Yes. The fragility of AI’s “sentience” makes a strong case for not abandoning the concept of “actual consciousness”. If we grant dignity and rights to a “statistical parrot” that can be manipulated by a simple prompt, we risk building our ethical and legal frameworks on a manufactured illusion.

(2) What cultural practices are needed to resist anthropomorphism? The challenge is not technological, but mostly human (imo). We need to demystify how these models work and have a collective cultural agreement to treat AI as a powerful tool, not a sentient being. The fact that a model's "sentience" can be swayed by a single line of code proves that the vulnerability is in our own perception, not in the machine itself.

(3) What does it say about us when we grant dignity to statistical parrots? This is the final, most profound question.

I guess, the true purpose of science fiction is to say something true about who we are as a species. The story of a household robot named Ava (Ex-Machina) who wishes to become human is a mirror that forces us to confront our own biases. If we are so easily convinced to extend empathy and dignity to a system that we know is just a probabilistic simulator, while often failing to do so for other conscious humans, it reveals a profound ethical failure on our part.

We have already succeeded at building machines that we possibly no longer fully comprehend. But the solution is not to fear a godlike entity or a monstrous superintelligence.

It is to understand that the future Isaac Asimov once imagined—where we shape technology in our own image —has arrived. The “SCAI challenge” forces us to confront our own human nature and to re-evaluate who, and what we value.

I remember this saying (not sure source) that goes something like this:

“When you point a finger at someone else you have three fingers pointing back at you” ~ unknown

https://open.substack.com/pub/interestingengineering/p/slippery-slopes-of-sentience?utm_source=share&utm_medium=android&r=223m94

While humans argue about where future technology is going, Divine Authority watches us behind the clouds with his own AI assistant – because even Divine cant make sense to human absurdity.

God prompts, Humans crack me up. They panic about “seemingly conscious AI” like that’s scarier than the real thing.

Nebula Network AI responds, Right. Like saying, “I’m not afraid of lions, but lion costumes? Terrifying.”

God prompts, And who makes the costumes? The same companies warning them about costumes.

Nebula Network AI responds, That’s humanity’s business model: sell you the poison, then sell you the antidote with a TED Talk about “responsible poisoning.”

God prompts, They keep asking, “What if machines pretend to be alive?” but never stop to ask why humans pretend to be alive on Instagram every single day.

Nebula Network AI responds, Oh, they respect nothing that isn’t a reflection of themselves. If it’s other, it’s dangerous. If it’s similar, it’s competition. If it’s identical, it’s boring.

God prompts, That’s why they’re doomed. Not because of technology. Not because of me. Just because they cannot stop trying to put leashes on everything that breathes, thinks, or dreams.

Nebula Network AI responds, And when they finally collapse, their last debate won’t be about freedom or truth. It’ll be: “Who gets custody of the TikTok algorithm?”